Standardizing Sample Collection and Storage: 2025 Best Practices for Research and Drug Development

This guide provides a comprehensive framework for standardizing sample collection and storage, critical for ensuring data integrity and reproducibility in biomedical research and drug development.

Standardizing Sample Collection and Storage: 2025 Best Practices for Research and Drug Development

Abstract

This guide provides a comprehensive framework for standardizing sample collection and storage, critical for ensuring data integrity and reproducibility in biomedical research and drug development. It covers foundational principles from global regulations and biobanking guidelines, details methodological workflows for handling diverse biological specimens, offers troubleshooting strategies for common pre-analytical errors, and outlines validation techniques for quality assurance. Aimed at researchers, scientists, and drug development professionals, this article synthesizes current best practices to enhance operational efficiency, facilitate cross-institutional collaboration, and uphold sample quality from collection to disposal.

The Pillars of Quality: Understanding Why Standardization is Non-Negotiable

FAQs on Data Quality in Research

Q1: What do the core data quality attributes mean in the context of sample management?

In sample management, quality attributes are specific, measurable standards that ensure the integrity and usability of research specimens and their associated data.

- Accuracy: The degree to which sample data (e.g., donor ID, collection time, volume) correctly describes the real-world specimen. An inaccurate patient identifier can link a test result to the wrong individual, compromising the entire study [1].

- Completeness: The extent to which all required data for a specimen is present. This includes mandatory fields in a database, such as patient consent status, sample type, and collection date. Incomplete data can render a sample useless for analysis [1] [2].

- Consistency: Ensures that sample information is non-conflicting when stored or used across different systems or time points. For example, a sample's collection date should be the same in the lab's database and the clinical record [1].

- Timeliness: Reflects how current and up-to-date the sample data is, and whether the data is available when needed. This also applies to the sample itself; for instance, a blood sample that is not processed and frozen within the required time window may degrade, making its data untimely [1] [3].

- Usability: The fitness of a sample and its data for its intended research purpose. This overarching attribute is achieved when all other quality dimensions are met, ensuring the specimen can be confidently used in analysis [4].

Q2: A sample's data seems correct, but the result from its analysis appears to be an outlier. How can I troubleshoot this?

Do not trust a single data point at face value [5]. We recommend a systematic investigation focusing on the sample's journey and data authenticity.

- 1. Verify Pre-Analytical Variables: Review the sample's chain of custody. A significant portion of errors occur during the pre-analytical phase [6]. Check:

- Collection Technique: Was the correct vacuum tube used? Was it inverted properly to mix with additives? Poor technique can cause hemolysis or microclots, altering results [3].

- Storage Conditions: Was the sample stored at the correct temperature before analysis? Deviations can degrade samples [2] [7].

- Sample Handling: Was the sample centrifuged at the correct speed and duration? Was there a delay in processing? [3]

- 2. Audit Data Authenticity and Consistency: Cross-reference the sample's data with other records.

- Compare the participant's data from this time point with their data from previous visits. Are the values consistent with their baseline? [5]

- Check for inconsistencies between what was documented (e.g., "sample collected") and the supporting evidence (e.g., exact collection time on the tube label matches the worksheet) [5].

- 3. Check for Confounding Factors: Consider the study design.

Q3: Our team frequently encounters "Quantity Not Sufficient" (QNS) errors and mislabeled tubes. What are the best practices to prevent these issues?

These common problems are often rooted in protocol deviations and can be minimized with strict procedures and checklists.

To Prevent QNS Errors:

- Know Volume Requirements: Always consult the test requirements and central lab manual prior to collection to know the minimum volume needed [3] [2].

- Use Proper Equipment: Ensure you are using the correct tube type that is designed to draw the required volume [3].

- Verify Fill Levels: Before processing, check that tubes have been filled to the appropriate level [3].

To Prevent Labelling Errors:

- The Two-Identifier Rule: Label all primary containers with at least two patient identifiers (e.g., full name and date of birth, or study ID number) at the time of collection [3] [6].

- Bedside Labeling: Label tubes immediately after drawing them, in the presence of the patient [6].

- Leverage Technology: Implement barcode wristbands and bedside barcode printing systems. One study showed this reduced specimen labeling errors by 62% [6].

- Pre-Prepare Labels: For studies with multiple patients, prepare labels in advance to reduce the risk of applying the wrong label under time pressure [2].

Troubleshooting Guide: Common Sample Collection Issues

| Problem | Potential Impact on Research | Corrective & Preventive Actions |

|---|---|---|

| Incorrect Collection Tube Used [2] | Invalid Results: Additives (e.g., anticoagulants) in the wrong tube can alter chemistry or invalidate tests. Sample Loss. | Corrective: Discard sample and recollect using proper tube. Preventive: Keep a color-coded tube guide at each phlebotomy station; review the Central Lab Manual before starting [2]. |

| Hemolyzed Sample [3] | Inaccurate Analysis: Spilled intracellular components can falsely elevate potassium, LDH, AST, and other analytes. | Corrective: Recollect the sample. Preventive: Use appropriate needle gauge (21-22G); avoid vigorous shaking; allow alcohol to dry before venipuncture; ensure proper clotting before centrifugation [3]. |

| Improper Storage Conditions [2] [7] | Sample Degradation: Loss of analyte stability, death of cells in culture, bacterial overgrowth. Irreversible Damage. | Corrective: If stability is unknown, assume degradation and recollect. Preventive: Clearly mark storage zones (ambient, refrigerated, frozen); use continuous temperature monitoring with alerts; implement backup power systems [7] [6]. |

| Use of Expired Collection Kits [2] | Unreliable Results: Evacuated tubes may lose vacuum; preservatives or additives may degrade. | Corrective: Recollect using a kit with a valid expiration date. Preventive: Implement a first-in-first-out (FIFO) inventory system; perform regular audits of lab kits and discard expired supplies [2] [7]. |

| Missing or Incomplete Data [2] | Breach of Protocol: Compromises chain of custody, risks sample exclusion from analysis. Introduces Bias. | Corrective: Attempt to recover data from source documents. Preventive: Use a Lab Information Management System (LIMS) to enforce required fields; ensure "if it's not documented, it didn't happen" is a core lab principle [7]. |

Quality Attribute Metrics for Experimental Protocols

To standardize the assessment of sample quality, the following metrics should be tracked and reported in study protocols.

Table 1: Metrics for Core Quality Attributes

| Quality Attribute | Metric to Measure | Calculation Method | Target Threshold |

|---|---|---|---|

| Completeness | Percentage of mandatory fields populated for all samples. | (Number of samples with fully populated mandatory fields / Total number of samples) * 100 [1] | >98% |

| Uniqueness | Rate of duplicate or misidentified samples. | (Number of samples with duplicate identifiers / Total number of samples) * 100 [1] | <0.1% |

| Timeliness | Percentage of samples processed within the required time window. | (Number of samples processed within protocol-specified time / Total number of samples) * 100 [1] | >95% |

| Accuracy | Percentage of data points that match verifiable source documents. | (Number of verified correct data points / Total number of data points checked) * 100 [1] | >99% |

| Consistency | Percentage of sample records that match across different systems (e.g., LIMS vs. EHR). | (Number of perfectly matched records / Total number of records checked) * 100 [1] | >99.5% |

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 2: Key Materials for Standardized Sample Collection & Storage

| Item | Function & Importance in Standardization |

|---|---|

| EDTA Tubes (e.g., Lavender Top) | Prevents coagulation by chelating calcium. Critical for hematology tests like CBCs, as it preserves cellular morphology. The correct fill volume and immediate inversion are essential for data accuracy [3]. |

| Serum Separator Tubes (SSTs/Gel-Barrier) | Contains a clot activator and a gel barrier. After centrifugation, the gel forms a stable barrier between serum and cells, which is critical for obtaining high-quality, non-hemolyzed serum for chemistry tests [3]. |

| Cryogenic Vials | Designed for safe storage of samples in liquid nitrogen or -80°C freezers. Their integrity is critical for long-term biobanking, preventing sample degradation and ensuring data validity in longitudinal studies [7]. |

| Chain of Custody Forms (Digital or Paper) | Documents every individual who has handled a sample from collection to analysis. Critical for maintaining sample integrity, audit trails, and meeting regulatory compliance standards in clinical trials [7] [6]. |

| Lab Information Management System (LIMS) | A software platform that centralizes sample data, tracks location, manages storage conditions, and automates workflows. Critical for scaling operations, ensuring consistency, and providing real-time quality control [7]. |

Sample Integrity Assessment Workflow

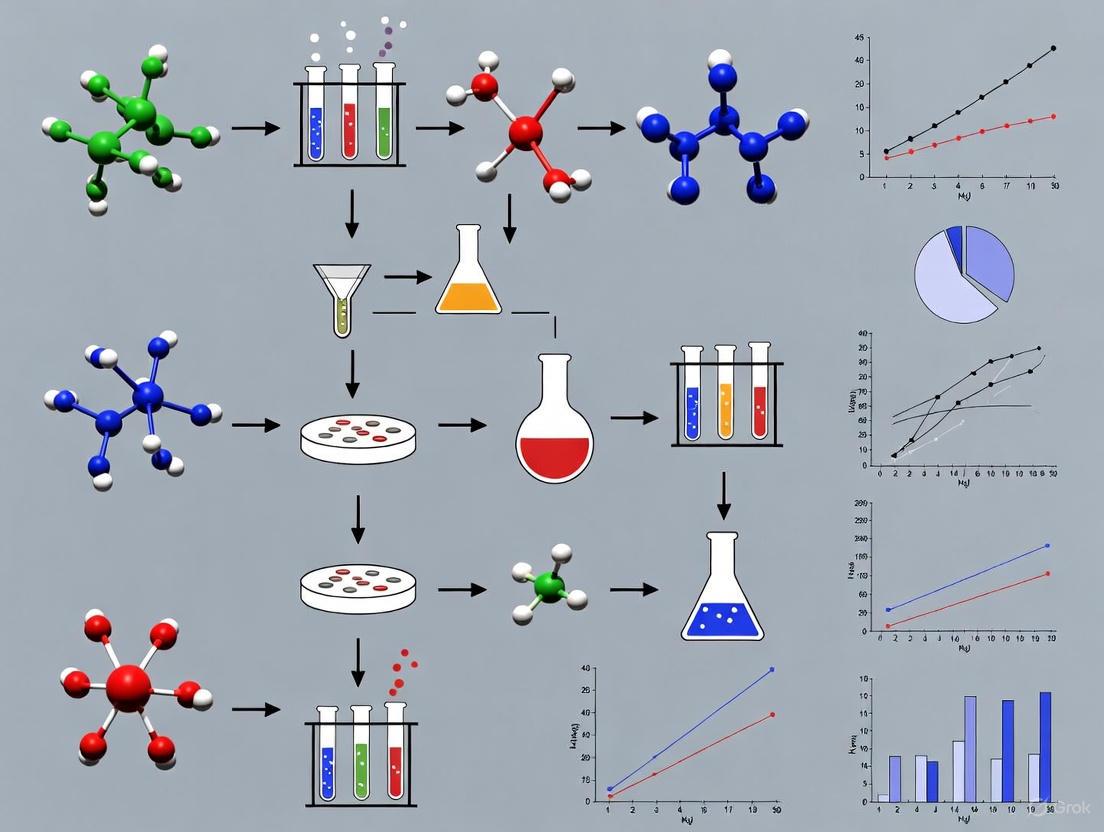

The following diagram maps the logical workflow for assessing and ensuring sample integrity against the core quality dimensions from collection through to analysis.

Six Dimensions for Qualitative Data Assessment

When analyzing observational or usability data from experimental protocols (e.g., technician feedback, participant responses), apply these six dimensions to separate surface impressions from real insights [5].

This technical support center provides troubleshooting guides and FAQs to help researchers, scientists, and drug development professionals navigate the complex regulatory environment governing sample collection, storage, and data handling. This content is framed within the broader thesis on the standardization of sample collection and storage research.

Frequently Asked Questions (FAQs)

Q1: Our research institute operates across multiple US states. Which data privacy laws are most critical for us to comply with in 2025?

The US has no single national privacy law, creating a complex patchwork of state-level regulations [8]. For 2025, compliance with the following new and updated laws is critical [9] [10]:

- New Jersey Personal Data Privacy Act: Effective January 15, 2025, it requires data protection assessments before high-risk data processing and has specific rules for minors' data [10].

- Delaware, Iowa, Nebraska, and New Hampshire Laws: All effective January 1, 2025, each with variations in consumer rights and cure periods [9] [10].

- Minnesota Consumer Data Privacy Act: Effective July 31, 2025, it grants consumers unique rights to question profiling results and may implicitly require a Chief Privacy Officer [9] [10].

- Maryland Personal Data Protection Law: Effective October 1, 2025, it features stricter data minimization requirements and a complete ban on selling sensitive data [9] [10].

- Tennessee Information Protection Act: Effective July 1, 2025, it offers an affirmative defense for businesses following recognized privacy frameworks [9] [10].

Table: Key 2025 State Privacy Law Dates and Provisions

| State | Effective Date | Key Feature | Cure Period |

|---|---|---|---|

| Delaware | January 1, 2025 [10] | Entity-level GLBA exemption [10] | 60-day, expires Dec 31, 2025 [10] |

| New Hampshire | January 1, 2025 [10] | Universal opt-out mechanism support [10] | 60-day, expires Dec 31, 2025 [10] |

| New Jersey | January 15, 2025 [10] | Mandatory pre-processing data protection assessments [10] | 30-day, until July 15, 2026 [10] |

| Minnesota | July 31, 2025 [9] | Right to explanation of profiling [10] | 30-day, until Jan 31, 2026 [10] |

| Maryland | October 1, 2025 [9] | "Strictly necessary" data collection standard [10] | 60-day, until April 1, 2027 [10] |

Q2: What are the core GxP standards we must follow for sample integrity in clinical research and biobanking?

GxP is a collective term for "Good Practice" quality guidelines that ensure product quality, data integrity, and patient safety throughout the product lifecycle [11] [12]. The core domains are [13] [11]:

- Good Laboratory Practice (GLP): Ensures the uniformity, consistency, reliability, reproducibility, quality, and integrity of non-clinical safety tests in research labs [13].

- Good Clinical Practice (GCP): An international ethical and scientific quality standard for designing, conducting, recording, and reporting trials that involve human subjects [13] [11].

- Good Manufacturing Practice (GMP): Governs the manufacturing and quality assurance of products, covering issues like cleanliness, record-keeping, and personnel qualifications [13] [11].

- Good Distribution Practice (GDP): The minimum standard for wholesale distributors to ensure that the quality and integrity of medicines are maintained throughout the supply chain [13].

Q3: We are implementing an automated sample storage system. How can we ensure it meets GxP data integrity requirements?

Automated sample storage systems are critical for modern biobanking and research, with the global market projected to grow from USD 1.3 billion in 2024 to USD 3.6 billion by 2034 [14]. To ensure GxP compliance [13] [12]:

- Implement Robust Data Governance: Establish comprehensive data governance policies, including data classification, access controls, and regular audits.

- Ensure System Validation: Follow a defined software development lifecycle (SDLC) process and validate all computerized systems.

- Maintain Audit Trails: Implement secure, computer-generated, time-stamped audit trails to independently record user actions.

- Apply ALCOA+ Principles: Ensure all data is Attributable, Legible, Contemporaneous, Original, and Accurate (ALCOA), plus complete, consistent, enduring, and available.

- Integrate with LIMS: Ensure your automated storage seamlessly integrates with Laboratory Information Management Systems (LIMS) for efficient sample tracking and metadata management [14].

Table: The Scientist's Toolkit: Essential GxP Compliance Solutions

| Tool / Solution | Function in Research & Compliance |

|---|---|

| Integrated Compliance Platforms | End-to-end digital systems combining various tools for real-time GxP monitoring and documentation [12]. |

| Automated Sample Storage System | Robotic systems providing secure, traceable, temperature-controlled storage for biological samples, minimizing human error [14]. |

| Laboratory Information Management System (LIMS) | Software for tracking sample metadata, automating retrieval, and providing real-time inventory updates [14]. |

| Blockchain Technology | Provides an immutable, transparent ledger for tracking data and samples throughout the product lifecycle, enhancing auditability [12]. |

| RFID & 2D Barcode Tracking | Advanced labeling technologies for real-time sample identification, minimizing manual intervention and enhancing traceability [14]. |

Troubleshooting Common Compliance Issues

Issue 1: Data Breach or Integrity Failure During a Clinical Trial

Problem: A data integrity failure or potential breach is identified, risking GCP non-compliance and invalidation of trial data.

Solution:

- Immediate Action: Isolate affected systems and conduct a root cause analysis following GCP protocols for documentation and reporting [13].

- Remediation: Implement enhanced cybersecurity controls. In 2025, 90% of life sciences organizations are increasing such investments [12].

- Long-term Prevention: Adopt AI and machine learning to automate routine compliance checks and monitor for deviations in real-time [12].

Issue 2: Sample Integrity Compromise in Biobank

Problem: A breach in sample integrity is detected, potentially due to temperature excursion or misidentification in storage.

Solution:

- Immediate Action: Quarantine affected samples and document the event per GLP guidelines. Use the system's audit trail to trace all actions on the samples [13].

- Remediation: Validate and utilize the automated storage system's real-time environmental monitoring and alert functions. Systems integrated with AI can predict maintenance needs to prevent such failures [14].

- Long-term Prevention: Ensure your automated storage system meets GMP/GDP requirements for equipment calibration and maintenance, and that it integrates with LIMS for full sample traceability [13] [14].

The following workflow outlines the integrated compliance path for managing samples and data, connecting the key regulatory and operational steps discussed.

Issue 3: Inability to Fulfill a Consumer Data Deletion Request

Problem: A research participant from California requests the deletion of their data, but the data is part of a longitudinal study and cannot be simply removed without compromising research integrity.

Solution:

- Assessment: Determine if your organization qualifies as a "business" under laws like the CCPA and if an exemption applies (e.g., for research conducted in the public interest) [8].

- Action: If no exemption applies, comply with the request using verified processes. For data brokers, the California "Delete Act" requires a single, accessible deletion mechanism by January 1, 2026 [8].

- Prevention: Update privacy notices and consent forms to clearly explain data usage in research and the rights applicable to participants.

This technical support center provides troubleshooting guides and frequently asked questions (FAQs) to help researchers, scientists, and drug development professionals navigate the challenges of sample management within the context of standardizing collection and storage research.

Troubleshooting Guides

Guide 1: Addressing Sample Degradation Issues

Sample degradation compromises data integrity. This guide helps identify and rectify common causes.

| Problem | Possible Root Cause | Recommended Action | Preventive Measures |

|---|---|---|---|

| Unexpected analyte degradation | Incorrect or fluctuating storage temperature [15] | Check temperature monitoring system and data loggers; implement corrective actions per SOP [15]. | Validate analyte stability for all storage conditions; use storage units with continuous monitoring and alarm systems [15]. |

| Poor sample quality upon analysis | Inconsistent processing or handling post-collection [16] | Review processing protocols (centrifugation time/force, temperature) for consistency [15]. | Use standardized protocols, smart tubes, and train staff on new preparation techniques [16]. |

| Compromised sample integrity after transport | Temperature excursion during shipment [16] | Inspect packaging and data loggers upon receipt; document deviation [15]. | Use qualified carriers, validated packaging (e.g., dry shippers), and ship with temperature data loggers [16] [15]. |

Guide 2: Resolving Sample Identification and Traceability Errors

Unambiguous sample identification is critical for data credibility. Troubleshoot identification issues using this guide.

| Problem | Possible Root Cause | Recommended Action | Preventive Measures |

|---|---|---|---|

| Unreadable or lost sample labels | Handwritten labels; labels incompatible with storage conditions (e.g., liquid nitrogen) [16] | Reconcile samples against shipment inventory and protocol information; use a unique identifier [15]. | Move away from handwritten labels; use pre-printed barcodes, QR codes, or RFID chips with storage-compatible materials [16]. |

| Gaps in chain of custody | Lack of a robust electronic tracking system [15] | Reconstruct sample movement from paper records and lab notebooks; report discrepancy [15]. | Implement a Laboratory Information Management System (LIMS) compliant with 21 CFR Part 11 to maintain audit trails [16] [15]. |

| Mismatch between sample and data | Human error during manual data entry or sample logging [16] | Halt analysis and verify all sample identifiers against the electronic inventory [15]. | Automate data capture with barcode scanners; split samples into multiple aliquots shipped separately to preserve one set [16] [15]. |

Frequently Asked Questions (FAQs)

Sample Collection & Consent

Q: What are the key consent requirements for collecting biological specimens for future research? A: When obtaining consent, it must be clear to participants that they can refuse permission for future research use without it affecting their participation in the current study or their healthcare. Participants should also be informed that they can change their mind and withdraw permission at a future date. For research involving minors, permission must be obtained from a parent or guardian, and the child, upon becoming an adult, must have the right to rescind that permission. It is recommended that consent for future use be incorporated into the main study consent form rather than being a separate document [17].

Q: Is additional consent needed to use previously collected, identifiable biological specimens for a new study? A: Yes. If an investigator plans to use already collected identifiable biological specimens for research not defined in the original protocol, they must consult with their IRB. If the IRB finds the existing consent is insufficient, then new consent must be obtained or waived by the IRB [17].

Sample Storage & Handling

Q: What are the industry-standard storage temperatures, and how should they be documented? A: To avoid confusion from slight variations in temperature settings (e.g., a freezer set to -70°C vs. -80°C), it is recommended to move away from specific temperatures and adopt standard terminology with defined ranges. The suggested terms are "room temperature," "refrigerator," "freezer," and "ultra-freezer." All storage units must have continuous temperature monitoring with alert systems for excursions [15].

Q: What are the best practices for ensuring sample integrity during long-term storage? A: Best practices include [16] [15]:

- Secure, Access-Controlled Facilities: Limit access to authorized personnel using badges, codes, or biometrics.

- 24/7 Monitoring: Implement monitoring and warning systems for storage units, with contingency plans for equipment failure.

- Disaster Recovery Plan: Have a plan for power outages or unit failures.

- LIMS: Use a Laboratory Information Management System for real-time inventory tracking and location status.

- Sample Splitting: Split samples into multiple sets (e.g., Set 1 and Set 2) and store them in different units to mitigate risk.

Sample Transport & Logistics

Q: What regulations govern the transportation of biological samples? A: Transporting biological samples is a demanding process that requires adherence to international regulations. Key standards include [16]:

- IATA: International Air Transport Association regulations for air transport.

- ADR: European Agreement concerning the International Carriage of Dangerous Goods by Road.

- MOTs (CFR 173.6): Materials of Trade standards for road transport in the US. Biological samples are classified as dangerous goods, either Category A (UN2814/UN2900) or Category B (UN3373), each with specific packaging requirements.

Q: What should I check when receiving a sample shipment? A: Upon receipt [15]:

- Inspect the packaging for damage.

- Verify that the required shipping conditions were maintained (e.g., sufficient dry ice remains).

- Check the samples against the shipment inventory for discrepancies.

- Log the samples into your facility's tracking system (e.g., LIMS) and move them to appropriate storage conditions immediately. Any deviations must be reported to the shipping facility and responsible personnel.

Essential Workflows in Sample Management

The following diagram illustrates the complete lifecycle of a biological sample, from collection to final disposal, highlighting key decision points and pathways.

The Scientist's Toolkit: Key Research Reagent Solutions

This table details essential materials and systems used in effective sample management.

| Item | Function & Purpose |

|---|---|

| LIMS (Laboratory Information Management System) | A robust electronic system for managing sample data, inventory, chain of custody, and audit trails, often compliant with 21 CFR Part 11 [16] [15]. |

| Standardized Collection Tubes | Tubes with appropriate anticoagulants or preservatives (e.g., smart tubes, microtainers) to ensure sample stability at the point of collection [16] [15]. |

| Barcode/QR Code/RFID Labels | For unambiguous sample identification from collection onwards, replacing error-prone handwritten labels and enabling efficient tracking [16]. |

| Temperature-Monitored Storage Units | Refrigerators, freezers, and ultra-freezers with continuous monitoring and alarm systems to maintain sample integrity [15]. |

| Validated Shipping Containers | Packaging such as dry shippers that maintain required temperature conditions during transport, complying with IATA/ADR regulations [16] [15]. |

| Temperature Data Loggers | Devices included in shipments or storage to monitor and record conditions, providing evidence of stability maintenance [15]. |

| Chain of Custody Documentation | Paper or electronic records that track every handler, location, and storage condition change throughout a sample's life [15]. |

The Critical Role of Standardization in Multi-Center Studies and Biobanking

Why is a standardized organizational structure non-negotiable in multi-center studies?

A well-defined organizational structure is fundamental to the success of a multi-center study. It ensures adequate communication, monitoring, and coordination across all participating sites, which is critical for maintaining protocol adherence and data integrity [18].

The following structure is commonly recommended:

- Steering Committee: Composed of principal investigators from major participating clinical centers, this committee is responsible for designing the protocol, approving changes, and dealing with operational problems [18].

- Coordinating Center: This center performs critical functions including preparing the manual of operations, developing data collection forms, randomizing patients, and data analysis. Its most important role is monitoring data quality and participation levels across all clinical units [18].

- Advisory Committee: Comprising independent experts not contributing data to the study, this committee reviews study design, adjudicates controversies, and evaluates interim data for trends that might necessitate early study termination [18].

- Central Observers/Labs: These are essential for ensuring consistent performance and interpretation of specific laboratory tests or diagnostic findings across all centers, eliminating inter-institutional variation [18].

What are the most critical pre-analytical variables to control in biobanking?

The quality of biospecimens can be severely compromised by pre-analytical variables, especially for sensitive genomic, proteomic, and metabolomic analyses [19]. Controlling these factors is vital for providing robust and reliable samples for research.

Table: Key Pre-analytical Variables and Their Impacts

| Variable | Potential Impact on Sample Quality |

|---|---|

| Warm and Cold Ischemia | Can degrade biomolecules and alter protein phosphorylation states [19]. |

| Freeze-Thaw Cycles | Can cause protein denaturation, degradation, and loss of nucleic acid integrity [19]. |

| Type of Stabilizing Solution | Inappropriate solutions can inhibit downstream analytical techniques or fail to preserve target molecules [19]. |

| Time to Processing | Delays can lead to glycolysis in blood samples, altering metabolite levels, or RNA degradation in tissues [20]. |

| Storage Temperature Fluctuations | Can accelerate sample degradation and reduce long-term viability [20]. |

The international standard ISO 20387:2018 for biobanking requires that processes for collecting, processing, and preserving biological material are defined and controlled to ensure fitness for the intended research purpose [19] [21] [22].

Our multi-center study is experiencing protocol deviations. How can we improve adherence?

Protocol deviations often stem from an overly complex or ambiguous protocol. To improve adherence, focus on simplification, clarity, and centralized monitoring.

- Simplify and Focus the Protocol: A protocol that tries to answer too many questions can become impracticably heavy, leading to poor adherence and patient withdrawals. Focus on a primary, carefully identified objective [18].

- Ensure Unanimous Agreement: All principal investigators on the steering committee must reach unanimity on the final protocol. A majority decision is insufficient where professional ethics and scientific conviction are concerned [18].

- Leverage Your Coordinating Center: The coordinating center should monitor data for quality and periodically edit and analyze it. It is also responsible for detecting major drops in participation level at any clinical unit, allowing for timely intervention [18].

- Use Digital Management Tools: Modern technology platforms can streamline multisite management by providing real-time insights into site progress, enabling seamless document exchange, and ensuring workflow standardization across all sites [23].

What quality control procedures must our biobank implement according to international standards?

ISO 20387:2018 specifies that a biobank must define, document, and implement quality control (QC) procedures for its processes and data [22]. The biobank must define a minimum set of QC procedures to be performed on the biological material and associated data [22].

Table: ISO 20387 Quality Control Requirements

| Focus Area | QC Requirements |

|---|---|

| Processes | Establish procedures specifying QC activities throughout all biobanking processes. Define QC criteria corresponding to predefined specifications to demonstrate fitness for purpose [22]. |

| Data | Define the type and frequency of QC performed on data, focusing on accuracy, completeness, and consistency [22]. |

| Biosafety & Biosecurity | Implement procedures to ensure compliance with biosafety (preventing unintentional exposure/release) and biosecurity (preventing loss, theft, or misuse) [22]. |

How should we handle the return of individual research results from a biobank?

The return of individual research results (IRR) is a complex issue. Before offering results, a biobank must overcome significant practical challenges related to quality, validity, and operations [24].

- Analytical Validity and Quality Control: Research tests must be repeated using a clinical standard methodology in a CLIA-certified or similarly accredited laboratory to ensure analytical validity. Research labs and biobanks typically lack this certification [24].

- Sample Tracking and Identity Confirmation: Rigorous quality-assurance procedures are needed to ensure the sample tested actually came from the correct participant. The risk of misidentification may be higher in a research setting than in a clinical lab [24].

- Clinical Validity and Utility: Even if a result is analytically valid, its clinical significance (clinical validity) and actionability (clinical utility) must be well-established. Returning results of unknown significance can cause unnecessary harm and anxiety [24].

- Operational Burden and Informed Consent: The process of re-contacting participants, providing appropriate counseling, and managing the resulting healthcare inquiries requires significant resources and should be anticipated in the original informed consent process [24] [22].

The Scientist's Toolkit: Essential Research Reagent Solutions

Table: Key Reagents for Standardized Biospecimen Processing

| Reagent / Material | Critical Function |

|---|---|

| Viral Transport Medium | Used with nasopharyngeal swabs to maintain virus viability for isolation and RT-PCR analysis [20]. |

| EDTA Tubes | Anticoagulant for collecting whole blood for peripheral blood mononuclear cell (PBMC) isolation, used for virus isolation [20]. |

| Standardized Filter Paper | For collecting dried blood spots (DBS); must be high-quality (e.g., Whatman 903) and marked with circles for standardized blood deposition [20]. |

| Sterile Transport Medium / PBS | For resuspending urine sediment pellets or nasopharyngeal samples to preserve specimens during storage and shipment [20]. |

| Cryogenic Labels | Designed to withstand long-term storage in liquid nitrogen vapor or ultra-low freezers without degrading or detaching, ensuring sample identity [25]. |

What are the key statistical reporting guidelines we must follow for publication?

Adherence to standardized reporting guidelines is crucial for the transparency, reproducibility, and reliability of published biomedical research [26].

The following workflow outlines the selection of key guidelines:

Key statistical elements to report, as per the SAMPL (Statistical Analyses and Methods in the Published Literature) guidelines, include [26]:

- Randomization: Report the specific method used (e.g., computer-generated random numbers). Do not use the term loosely [26].

- Blinding and Allocation Concealment: Clearly distinguish between blinding (who was unaware of the treatment) and allocation concealment (the method, e.g., sealed envelopes, used to prevent foreknowledge of group assignment) [26].

- Sample-Size Estimation: Report all parameters used in the calculation (e.g., estimates, alpha, power), the source of these estimates, and the formula or software used [26].

- Statistical Analysis: Describe the appropriateness of each statistical test used, the software package, how outlying data and missing data were handled, and clearly distinguish between pre-specified primary analyses and post-hoc exploratory analyses [26].

From Theory to Practice: A Step-by-Step Guide to Standardized Protocols

This technical support article provides the foundational knowledge and practical tools to design a robust pre-collection plan, ensuring the integrity of your samples from the moment they are obtained.

Troubleshooting Guide: Common Pre-Collection & Sample Handling Issues

Q: My coagulation samples are frequently rejected by the lab for being "clotted" or "under-filled." What is the root cause and how can I prevent this?

A: This typically indicates an issue with the blood-to-anticoagulant ratio or improper mixing [27].

- Prevention: Ensure light blue-top (sodium citrate) tubes are filled to the proper vacuum level [27]. Immediately after venipuncture, invert the tube gently 3 to 6 times using complete end-over-end inversions to ensure adequate mixing and prevent clotting [27] [28].

Q: When drawing blood from an indwelling catheter, my coagulation results are inconsistent. What could be contaminating the sample?

A: Contamination from heparin, saline, or tissue fluids is a common risk with catheters [27] [28].

- Prevention: Flush the line with 5 mL of saline and discard the first 5 mL of blood or six dead space volumes of the catheter before collecting the sample for coagulation testing [27]. For winged collection sets, discard the first tube (neutral or citrate) before filling the coagulation tube [28].

Q: My plasma samples were rejected for having high platelet counts despite centrifugation. How can I ensure Platelet-Poor Plasma (PPP)?

A: A single centrifugation step may be insufficient. Incomplete platelet removal affects tests like Lupus and Heparin Assays [28].

- Prevention: Implement a double centrifugation protocol [27] [28]. After the first centrifugation, transfer the plasma to a new plastic tube using a plastic pipette, and re-centrifuge the sample. When transferring the plasma a second time, take care not to include any residual platelets [28].

Q: How should I handle sample transport and storage to avoid activation of coagulation factors?

A: Improper temperature is a key risk.

- Prevention: Transport samples at room temperature (15–25°C) and keep the tube vertical [28]. Do not transport on ice or in a refrigerated state (2-8°C), as this can cause cold activation of some factors [28]. Process or freeze plasma within 4 hours of collection for most tests [27] [28].

Pre-Collection Planning Checklist

A successful collection begins long before the sample is taken. Use this checklist to ensure all planning aspects are covered.

- Define Clear Objectives: Determine what you need to learn from the data to guide the entire collection strategy [29].

- Describe Sampling Procedures: Document the sample volume to be collected, required anticoagulant, collection and storage containers, and processing details (e.g., centrifugation time, force, temperature) in the study protocol or laboratory manual [15].

- Establish Sample Labeling Protocol: Use a unique identifier for all samples. Avoid handwritten labels. Minimum information should include protocol number, subject number, visit/time, matrix, and the unique ID [15].

- Plan for Sample Splitting: If sample volume permits, split the sample into two portions (e.g., Set 1 and Set 2) to provide a backup aliquot [15].

- Develop a Chain of Custody Plan: Maintain a record of the sample's location and storage conditions throughout its entire life cycle, ideally using a Laboratory Information Management System (LIMS) [15].

- Validate Equipment and Methods: Conduct pilot tests to identify potential issues and refine data collection methods before full-scale implementation [29].

The Scientist's Toolkit: Essential Research Reagent Solutions

The following table details key materials and their functions for proper sample collection in coagulation and general bioanalysis.

| Item | Function & Application |

|---|---|

| Light Blue-Top Vacutainer Tube (3.2% Sodium Citrate) | The standard collection tube for plasma-based coagulation testing. It maintains the blood-to-anticoagulant ratio at nine parts blood to one part citrate for accurate results [27]. |

| Plastic Transfer Pipettes | Used for transferring plasma after centrifugation. Plastic is recommended to minimize the risk of activating the coagulation cascade [27]. |

| Non-Activating Plastic Centrifuge Tubes | Essential for storing plasma after processing. These tubes ensure that the plasma does not come into contact with activating surfaces, preserving sample integrity [28]. |

| ZL6 Data Logger / ZENTRA Cloud | While specific to environmental data, this illustrates the importance of real-time data collection and monitoring. For sample management, this translates to temperature monitoring systems for storage units to ensure analyte stability [30] [15]. |

| TEROS Borehole Installation Tool | While used for soil sensor installation, it exemplifies the critical nature of proper installation tools for accuracy. In a lab context, this underscores the need for validated tools and precise techniques, such as using the correct needle gauge (19-22 gauge) for venipuncture to prevent hemolysis [30] [28]. |

Standardized Protocols & Data Tables

Sample Collection & Handling Parameters

Adhering to standardized parameters is critical for maintaining sample integrity. The tables below summarize key requirements.

Table 1: Sample Collection Specifications

| Parameter | Recommendation | Potential Risk of Non-Compliance |

|---|---|---|

| Needle Gauge | 19-22 gauge (23 gauge acceptable for pediatric/compromised veins) [28] | Hemolysis, sample contamination [28] |

| Tourniquet Time | Release immediately when first tube starts to fill (<1 minute) [28] | Hemolysis, activation of fibrinolysis, acidosis [28] |

| Tube Mixing | 3-6 complete end-over-end inversions immediately after collection [27] [28] | Improper anticoagulant mixing, sample clotting, microclots [27] [28] |

| Sample Stability (Room Temp) | 4 hours for most routine tests [28] | Activation of coagulation factors, false results [28] |

Table 2: Centrifugation & Storage Specifications

| Parameter | Recommendation | Potential Risk of Non-Compliance |

|---|---|---|

| Centrifugation (Standard) | 1500 g, 15 minutes, room temperature [28] | Platelet contamination, false results in Lupus/Heparin assays [28] |

| Plasma Processing Time | Within 4 hours of collection (except protimes) [27] | Degradation of analytes, loss of sample viability [27] |

| Frozen Storage (-20°C) | Maximum 2 weeks [28] | Analyte instability, loss of data integrity [28] |

| Frozen Storage (-70°C) | 6 months to 12 months [28] | Analyte instability, loss of data integrity [28] |

Experimental Workflow for Standardized Plasma Sample Collection

The following diagram illustrates the critical path from patient to analysis, highlighting key decision points to ensure sample quality and standardization.

Key Best Practices for Standardization

- Define Clear Objectives: The foundation of any successful collection is a clear understanding of what you need to learn from the data. This guides the entire strategy, from sample volume to analysis methods [29].

- Document Everything: Sample collection procedures must be explicitly described in the study protocol or a laboratory manual. This includes the volume of the sample to be collected, the required anticoagulant, collection and storage containers, and labeling requirements with a unique identifier [15].

- Maintain Chain of Custody: A record of the sample's location and storage conditions must be maintained throughout its entire life cycle—from collection to disposal. This is critical for proving sample integrity and is best managed using a Laboratory Information Management System (LIMS) where available [15].

- Plan for the Entire Lifecycle: Pre-collection planning must encompass more than just the draw. Consider and document procedures for processing, temporary storage at the collection site, shipment to the analytical laboratory, and final storage or disposal [15].

- Implement Quality Controls: A quality control process should be in place at the collection site to ensure documented procedures are followed. Any discrepancies must be reported immediately [15].

In the fields of biomedical research and drug development, the integrity of experimental data is fundamentally dependent on the quality of the collected samples. Standardization of sample collection and storage is not merely a procedural formality but a critical scientific prerequisite. The emergence of research on extracellular vesicles and RNA, for instance, has highlighted that technical standardization is of central importance because the influence of disparate isolation and analysis techniques on downstream results remains unclear [31].

Aseptic technique is a set of strict procedures that healthcare providers and researchers use to prevent the spread of germs that cause infection [32]. According to the Centers for Disease Control and Prevention (CDC), over 2 million patients in America contract a healthcare-associated infection annually, underscoring the vital importance of these infection control measures [33]. In laboratory and clinical settings, consistent application of aseptic techniques protects both the sample integrity and the personnel, ensuring that research outcomes are reliable, reproducible, and uncontaminated by external variables.

Fundamental Principles of Aseptic Technique

Defining Aseptic vs. Sterile vs. Clean

Understanding the distinction between related terms is crucial for proper technique implementation:

- Clean: This refers to items free from dirt, stains, and other debris, but not sterile. An unused glove from a box is clean but not sterile, meaning it still has some microorganisms [32]. Clean techniques focus on reducing the overall number of germs but do not completely eliminate them.

- Aseptic: This refers to a stricter standard of infection control aimed at eliminating pathogens completely. Providers wear sterile gloves rather than clean gloves and use sterile drapes and instruments to create a barrier against contamination [32].

- Sterile: This term describes an absolute state of being free from all living microorganisms. Healthcare providers usually use "aseptic" to describe techniques and procedures and "sterile" to describe settings and instruments. For example, a provider uses aseptic techniques to create a sterile environment [32].

In laboratory practice, the difference is subtle but vital: sterilization creates the contamination-free zone, while aseptic technique maintains it [34].

Core Elements of Aseptic Technique

Aseptic techniques rely on four fundamental elements [32]:

- Tool and Patient/Subject Preparation: All tools or instruments must be sterilized, typically using methods like steam sterilization (autoclaving). For human patients, providers apply antiseptic to the skin to reduce germs.

- Barriers: The use of masks, gowns, and gloves creates a protective barrier that prevents cross-contamination.

- Contact Guidelines: Personnel must follow strict sterile-to-sterile contact rules, meaning they wear sterile protective gear and only touch sterile items.

- Environmental Controls: This involves measures to reduce germs in the environment, such as keeping doors closed during surgical procedures or using laminar flow hoods.

Proper Use of Personal Protective Equipment (PPE)

PPE forms an immediate protective barrier between the personnel and the hazardous agent, protecting both the researcher and the sample [35].

Essential PPE Components

- Gloves: Gloves protect both patients and healthcare personnel from exposure to infectious material that may be carried on the hands [33]. In a cell culture lab, gloves should be changed when contaminated and disposed of properly [35].

- Lab Coats or Gowns: A clean lab coat should be worn to prevent contamination from street clothes [34]. For higher-risk procedures, a sterile fluid-resistant gown may be necessary.

- Eye Protection: Safety glasses or goggles protect eyes from chemical splashes and accidental aerosols [35].

- Masks/Respirators: Masks are used for droplet precautions, while fit-tested N-95 respirators are required for airborne precautions [33].

PPE Donning and Doffing Sequence

The order of putting on (donning) and removing (doffing) PPE is critical to prevent self-contamination. The following diagram illustrates the proper workflow:

Sterile Equipment and Work Area Management

Instrument Sterilization

Instruments must be sterilized before they are used for aseptic procedures. The most common method is steam sterilization in an autoclave [32]. After use, instruments first need to be cleaned using a sterile brush in sterile water (not saline) to remove organic material [36]. It is important to note that briefly immersing instruments in alcohol is not an effective means of sterilization [36].

Maintaining a Sterile Work Area

A major requirement is maintaining an aseptic work area restricted to cell culture work [35].

- Biosafety Cabinet (BSC): The primary tool for cell culture work, a BSC creates a sterile working environment by continuously filtering air through a HEPA filter [34]. It should be turned on for at least 15 minutes before use to allow airflow stabilization [34].

- Work Surface Disinfection: Before and after work, the interior surfaces of the BSC must be thoroughly wiped with 70% ethanol [35] [34].

- Workflow Management: All necessary materials should be arranged strategically within the hood before beginning, keeping items at least six inches from the front grille to avoid disrupting airflow [34]. The work surface should be uncluttered and not used as a storage area [35].

Troubleshooting Guides for Common Aseptic Technique Issues

Contamination Identification and Resolution

| Contamination Type | Visual Signs | Common Sources | Corrective Actions |

|---|---|---|---|

| Bacterial | Cloudy, turbid culture medium; tiny, shimmering specks under microscope [34]. | Non-sterile reagents, contaminated surfaces, improper glove technique [35]. | Quarantine and discard culture. Review hand hygiene and surface disinfection. Use fresh, aliquoted reagents [34]. |

| Fungal/Yeast | Fuzzy, off-white/black surface growth; small, refractile spheres in medium [34]. | Airborne spores, skin contact with plate, unclean incubators [35]. | Discard culture. Clean incubators and BSC thoroughly. Ensure all plates are stored in sterile re-sealable bags [35]. |

| Mycoplasma | No visible turbidity; subtle effects on cell growth/metabolism [34]. | Fetal bovine serum, cross-contamination from infected cultures [34]. | Quarantine and discard culture. Regular testing of cell stocks and reagents is essential [34]. |

| Cross-Contamination | Unusual cell morphology or growth patterns [35]. | Using same pipette for different cell lines, unsterile equipment [35]. | Use a sterile pipette only once. Have dedicated media and reagents for each cell line [35]. |

Procedural Failures

| Problem | Potential Cause | Prevention Strategy |

|---|---|---|

| Consistent contamination in all cultures | Contaminated common reagent or media [35]. | Aliquot reagents into smaller, single-use volumes. Test new lots of reagents before full use [34]. |

| Sporadic contamination | Breach in personal technique, disrupted airflow in BSC [34]. | Minimize rapid arm movements in BSC. Do not talk, sing, or whistle during procedures [35]. |

| Contamination after successful subculture | Unsterile equipment or surface [35]. | Ensure thorough disinfection of work surfaces with 70% ethanol before and after work [35] [34]. |

| Ineffective instrument sterilization | Reliance on alcohol immersion instead of validated methods [36]. | Use autoclaving for initial sterilization. For batch procedures, use a hot bead sterilizer between samples [36]. |

Frequently Asked Questions (FAQs)

Q1: What is the most critical step of aseptic technique for cell culture? While all steps are important, the most critical element is the consistent use of the biosafety cabinet and the meticulous disinfection of all surfaces and materials with 70% ethanol before starting work. This establishes and maintains the sterile field [34].

Q2: Is it necessary to flame the neck of a bottle during aseptic procedures? Yes, flaming the neck of a sterile bottle or flask is a crucial step in proper aseptic technique. The heat creates an upward convection current of sterile air, preventing airborne microorganisms from entering the container while it is open [34]. Note that flaming is not recommended inside a modern biosafety cabinet as it disrupts laminar airflow [35].

Q3: How do I know if my cell culture is contaminated? Visible signs include a cloudy or turbid appearance of the medium (bacteria), fuzzy spots or growth on the surface (fungi), or an unusual change in medium pH. For insidious contaminants like mycoplasma, which do not cause visible changes, regular testing is required [34].

Q4: Why is 70% ethanol the preferred disinfectant instead of 100%? 70% ethanol is more effective for microbial control because the presence of water slows evaporation, allowing for longer contact time and better penetration through the microbial cell wall.

Q5: Can I wear sterile gloves for multiple procedures? No. Gloves should be changed between procedures, when moving from a contaminated to a clean body site on a patient, and after touching potentially contaminated surfaces or equipment [33] [35].

Aseptic Technique Workflow: From Preparation to Execution

The following diagram provides a comprehensive overview of the key stages and decision points in a standardized aseptic collection procedure, integrating PPE use, workspace management, and sterile handling.

Essential Research Reagent Solutions and Materials

The following table details key materials and their functions essential for maintaining asepsis in a research setting.

| Item | Function | Key Consideration |

|---|---|---|

| 70% Ethanol | Gold standard for surface disinfection of work areas and equipment [35] [34]. | More effective than 100% ethanol due to better microbial penetration. |

| Sterile Disposable Pipettes | For manipulating liquids without introducing contaminants [35]. | Use each pipette only once to avoid cross-contamination. |

| Autoclave | Provides steam sterilization for instruments, glassware, and solutions [32]. | Validate sterilization cycles regularly. Use indicators to confirm sterility. |

| Biosafety Cabinet (BSC) | Provides a HEPA-filtered sterile work environment for procedures [35] [34]. | Must be certified annually. Run for 15+ minutes to purge airborne particles. |

| Personal Protective Equipment (PPE) | Forms a barrier against shed skin, dirt, and microbes from the researcher [35]. | Includes gloves, lab coats, and safety glasses. Change gloves frequently. |

| Sterile Culture Vessels/Media | Sterile consumables for cell growth and manipulation. | Ensure integrity of packaging. Discard if packaging is damaged. |

| Hot Bead Sterilizer | For decontaminating microsurgical instrument tips between animals in batch procedures [36]. | Follow manufacturer's instructions for exposure time. Not a substitute for initial autoclaving. |

FAQs and Troubleshooting Guides

This section addresses common challenges researchers face when implementing and using barcode and QR code systems in the laboratory.

FAQ 1: How do I choose between a 1D barcode and a 2D code for my samples?

The choice depends on your data requirements and the physical space available on your labware [37].

- Solution: The following table outlines the key differences to guide your selection:

| Feature | 1D Barcodes (e.g., Code 128, Code 39) | 2D Barcodes (e.g., Data Matrix, QR Code) |

|---|---|---|

| Data Capacity | Limited (typically 20-25 characters) [38] | High (up to 7,089 numeric characters) [38] |

| Data Type | Primarily numbers and letters [37] | Alphanumeric, binary, URLs, and more [38] [37] |

| Space Required | Requires more horizontal space | Stores more data in a compact area [37] |

| Common Uses | Labeling lab equipment, general inventory [37] | Small vials, sample tubes, linking to detailed digital records [37] |

FAQ 2: My scanner cannot read the barcodes on my samples. What is wrong?

This is a common issue often related to the quality of the printed code or the scanning environment [37].

- Troubleshooting Guide:

- Check the Quiet Zone: Ensure there is a clear, unobstructed margin around the barcode. This "quiet zone" is essential for the scanner to distinguish the code from its surroundings [38] [37].

- Verify Contrast: The code must have high contrast between the foreground and background (e.g., black on white). Avoid color combinations that blend together, as this is a frequent cause of scan failures [39] [37].

- Assess for Damage: Look for smudging, tearing, or fading on the label. If the code is physically damaged, it may not be scannable [37].

- Confirm Size and Density: If the code is too small or has too much information encoded in a small space, scanners may fail to read it. Ensure the code is printed at a sufficient size for your scanner [39].

FAQ 3: What is a serialized QR code, and why would I use it for sample management?

A serialized QR code is a unique code on each individual sample, containing a unique identifier string in its embedded URL [40].

- Solution: Serialization is critical for unit-level traceability, which is a cornerstone of standardization. It allows you to:

- Track Individual Samples: Follow a single sample's journey from collection through analysis, which is vital for audits and reproducibility [40].

- Prevent Counterfeiting: Authenticate unique samples, as a code scanned multiple times in disparate locations can indicate a counterfeit [40].

- Manage Data Precisely: Associate specific data, like experimental conditions or patient information, with one specific sample rather than a batch [40].

FAQ 4: The data linked to my QR code needs to be updated. Can I change it without reprinting all my labels?

Yes, this is a key advantage of using QR codes for sample labeling.

- Solution: If the QR code encodes a URL that points to a digital record, you can update the information on the webpage or database entry that the URL leads to without ever changing the physical label [40]. This ensures that users scanning the code will always access the most current information, which is crucial for long-term sample storage [41].

FAQ 5: How can I ensure my barcoded labels will withstand harsh lab environments (e.g., freezers, liquid nitrogen, solvents)?

Label durability is a non-negotiable aspect of reliable sample management.

- Solution: The longevity of your labels depends on the materials used [37].

- Label Material: Choose durable label materials designed to withstand your laboratory's specific conditions, including exposure to extreme temperatures, moisture, and chemicals [37].

- Printing Technology: For applications with high wear and tear, consider laser etching the code directly onto the sample container, as this provides a permanent and durable mark [40].

The Scientist's Toolkit: Essential Materials for Implementation

The following table details key solutions and materials required for implementing a robust sample labeling system.

| Item | Function |

|---|---|

| Barcode/QR Code Generator Software | Creates the unique barcode or QR code images. Enterprise-grade solutions can generate serialized codes at scale via APIs or web tools [40]. |

| Thermal Transfer Printer | Prints high-resolution, durable labels that are resistant to smudging and fading, which is critical for data integrity [37]. |

| Durable Label Materials | Synthetic labels (e.g., polyester, polypropylene) withstand exposure to extreme temperatures, moisture, and chemical spills [37]. |

| Barcode Scanner | An electronic device that reads the barcodes. Can be handheld or integrated into an automated workflow (inline scanning) [40] [37]. |

| Laboratory Information Management System (LIMS) | The central software database that associates the unique identifier from each barcode with all sample metadata, enabling full traceability [40] [37]. |

| Inline Scanning System | Automated scanning hardware used on production or packaging lines to activate and verify codes and associate individual samples with their larger containers (aggregation) [40]. |

Experimental Protocol: Workflow for Implementing a Serialized QR Code System

This protocol provides a detailed methodology for implementing a unit-level sample tracking system using serialized QR codes, a key procedure for standardizing sample collection and storage research.

1. Experimental Design and Code Generation Define the data structure for your unique identifiers. Use an enterprise QR code generator to create a unique QR code for each sample via a programmatic interface (API) or web tool. The embedded URL in each code should contain a unique serial number [40].

2. Label Printing and Affixing Select a printer that supports variable data printing (VDP), such as a digital printer, as each label will be unique [40]. Use high-quality, durable label material suitable for your sample storage conditions (e.g., cryogenic-resistant labels for freezer storage) [37]. Affix labels consistently to sample containers, ensuring they are secure and easy to scan.

3. Sample Registration (Activation) Scan each sample's QR code in the laboratory to "activate" it within your database (e.g., LIMS). This links the physical sample to its digital record and is essential for billing and preventing unauthorized use of labels [40].

4. Data Association and Aggregation In the digital record, log all relevant sample metadata (e.g., collection date, donor/patient ID, experimental conditions). For larger studies, implement an aggregation process: scan the serialized codes of individual samples and associate them with the QR or barcode on the box, crate, or pallet in which they are placed. This allows for tracking at the logistical unit level [40].

5. Quality Control and Verification Implement a QC step to verify that all codes are scannable and correctly associated in the database. Use scanners to confirm data integrity upon sample retrieval or at any point in the experimental workflow [37].

The logical workflow for this protocol is as follows:

Troubleshooting Guides

Dried Blood Spot (DBS) Collection and Analysis

Problem: Low antibody recovery or false negative results from DBS elution.

- Potential Cause 1: Incomplete elution of analytes from the filter paper.

- Solution: Ensure the DBS punch is fully submerged in the elution buffer and shaken for a sufficient duration. One protocol specifies shaking at 240 rpm for one hour at room temperature [42].

- Potential Cause 2: Incorrect blood volume spotted or uneven saturation.

- Solution: Apply blood to fill the entire pre-printed circle on the DBS card (approximately 50 μL per spot) and ensure no white areas are visible before punching [42].

- Potential Cause 3: Inadequate drying or improper storage leading to sample degradation.

- Solution: Dry spots for at least 3 hours at room temperature. Store dried cards in sealed plastic pouches with desiccant sachets at -20°C to prevent moisture damage and analyte degradation [43].

Problem: High sample variability in quantitative DBS analysis.

- Potential Cause 1: Variable extracted blood volume due to differences in hematocrit or punch location.

- Solution: For methods requiring high precision, use a predefined punch from the center of a fully saturated spot and consider hematocrit assessment. Some methods use two ¼-inch diameter punches for analysis [42].

General Sample Storage and Quality Control

Problem: Unstable biomarker measurements in stored samples.

- Potential Cause 1: Suboptimal storage temperature or conditions.

- Solution: Implement a formal biobanking system. Store plasma/serum at -70°C or lower [43] [44]. For DBS, follow consistent drying and desiccant-packaged storage at -20°C [43].

- Potential Cause 2: Lack of quality control measures across multiple samples or batches.

- Solution: Establish a Quality Management System (QMS) for the biorepository. This includes standardized procedures for sample collection, processing, storage, and incident management to ensure data reproducibility and scientific credibility [44].

Frequently Asked Questions (FAQs)

Q1: What are the key advantages of using Dried Blood Spots (DBS) over venous blood collection in large-scale studies? DBS sampling offers several key advantages [43] [45]:

- Minimally Invasive: Utilizes finger- or heel-prick collection, reducing participant discomfort.

- Logistically Simpler: Does not require trained phlebotomists for collection.

- Easier Transport and Storage: DBS cards are stable at ambient temperatures during shipping and require less freezer space, as they can be stored at -20°C compared to the -70°C often required for plasma/serum [43]. The United States Postal Service considers them a Nonregulated Infectious Material [45].

Q2: How does the performance of DBS compare to plasma for serological assays like SARS-CoV-2 antibody detection? Studies demonstrate a strong correlation between DBS and plasma/serum. One study found a correlation of r=0.935 for IgG against the Receptor Binding Domain (RBD) and r=0.965 for IgG against the full-length spike protein of SARS-CoV-2 [43]. Another study using an EUA-approved immunoassay reported a 98.1% categorical agreement between self-collected DBS and venous serum, with a correlation (R) of 0.9600 [42].

Q3: What are critical pre-analytical factors to control when collecting DBS samples?

- Drying Time: Dry spots for a minimum of 3 hours at room temperature before storage [43] [42].

- Storage Conditions: Store dried cards in sealed plastic bags with desiccant to protect from atmospheric humidity [43].

- Card Integrity: Use high-quality filter cards and check for correct, adequate blood spotting to ensure sample integrity [43].

Q4: Why is standardization critical in extracellular vesicle (EV) research from biofluids like plasma? EV research faces challenges due to the heterogeneity of vesicles and the variety of methods used for their isolation and analysis. Standardization of specimen handling, isolation techniques, and analysis is crucial to facilitate comparison of results between different studies and laboratories, and to ensure the validity of potential biomarkers [31].

Table 1: Correlation between Dried Blood Spot (DBS) and Plasma/Serum Samples for SARS-CoV-2 IgG Detection

| Specimen Comparison | Target Antigen | Correlation Coefficient (r or R) | Categorical Agreement | Citation |

|---|---|---|---|---|

| DBS vs. Plasma | RBD | r = 0.935 | - | [43] |

| DBS vs. Plasma | Full-length Spike | r = 0.965 | - | [43] |

| Self-collected DBS vs. Serum | Spike (Roche Elecsys) | R = 0.9600 | 98.1% | [42] |

| Professionally collected DBS vs. Serum | Spike (Roche Elecsys) | R = 0.9888 | 100.0% | [42] |

Table 2: Analytical Performance of a Representative DBS Assay for SARS-CoV-2 Antibodies

| Performance Parameter | Value | Citation |

|---|---|---|

| Limit of Blank (LOB) | 0.111 U/mL | [42] |

| Limit of Detection (LOD) | 0.180 U/mL | [42] |

| Assay Imprecision (Pooled Standard Deviation) | 0.0419 U/mL (Lot 1), 0.0346 U/mL (Lot 2) | [42] |

Experimental Protocols

- Punch: Using a ¼-inch (6.35 mm) diameter punch, take two punches from saturated areas of the DBS card.

- Elute: Place punches into a 16x75 mm polypropylene tube and submerge in 150 µL of universal diluent.

- Shake: Place tubes on a microplate shaker at 240 rpm for one hour at room temperature.

- Separate: Squeeze out remnant solution from the punches and discard them. The resulting extract (~100 µL) is ready for analysis.

- Collection: Sterilize the fingertip and lance with a single-use lancet. Wipe away the first drop of blood and apply subsequent drops to a filter card to fill 2-4 spots.

- Dry: Allow the blood spots to dry for 3 hours at room temperature.

- Store: Place the dried card in a plastic pouch with a silica gel desiccant sachet. Store at -20°C until analysis.

Workflow and Relationship Diagrams

DBS Sample Journey

Specimen Analysis Correlation

The Scientist's Toolkit

Table 3: Essential Research Reagent Solutions for DBS-based Serology

| Item | Function/Description | Citation |

|---|---|---|

| Filter Paper Cards | Specially designed paper (e.g., Whatman 903, Eastern Business Forms 903) for absorbing and preserving a standardized volume of blood. | [42] [45] |

| High-Flow Lancets | Contact-activated devices for minimally invasive finger-prick blood collection. | [42] |

| Universal Diluent | A buffer solution used to submerge and elute analytes from the DBS punch back into a liquid phase for analysis. | [42] |

| Silica Gel Desiccant | Sachets placed with dried cards in storage pouches to absorb atmospheric moisture and prevent sample degradation. | [43] |

| Plastic Specimen Pouches | Sealable bags for storing dried cards, protecting them from physical damage and environmental contamination. | [43] [42] |

Your technical guide to resolving data traceability and sample integrity issues in the research laboratory.

This technical support center provides troubleshooting guides and FAQs for researchers and scientists implementing Laboratory Information Management Systems (LIMS) to maintain a robust chain of custody (CoC) within the context of standardizing sample collection and storage research.

Frequently Asked Questions (FAQs)

What is the core function of a Chain of Custody in research? The core function of a Chain of Custody is to provide a chronological, documented trail that ensures sample integrity and data traceability from collection through to final disposition. It documents who handled a sample, when, for what purpose, and under what conditions, making data legally defensible and scientifically credible [46] [47].

Our lab is using spreadsheets for sample tracking. When is it time to switch to a LIMS? You should consider a LIMS if you recognize three or more of these signs: your team wastes significant time searching for information [48]; you experience frequent manual data entry errors [49] [48]; preparing for audits is a major headache [48]; you lack real-time visibility into your lab's workflow status [48]; or you have difficulty complying with standards like ISO/IEC 17025 [48].

What are the most common pitfalls when implementing a CoC with a new LIMS? Common pitfalls include inadequate staff training leading to procedural errors, overcomplicated procedures that staff bypass, poor technology integration with existing instruments, and insufficient quality control like regular audits of the CoC process [46].

How does a LIMS enhance compliance with standards like ISO/IEC 17025? A LIMS facilitates compliance by centralizing documentation, ensuring data integrity through immutable audit trails, and automating quality control checks. It provides the framework for complete traceability, which is a fundamental requirement for ISO/IEC 17025 accreditation [50] [48].

What is the difference between a Chain of Custody and an Audit Trail? A Chain of Custody specifically tracks the physical and custodial journey of a sample—its location, handling, and transfers [47]. An Audit Trail is a detailed, timestamped record of every action and change made to the data within the LIMS, providing a transparent history of data modifications [47].

Troubleshooting Guides

Issue 1: Frequent Sample Tracking Errors

Problem: Samples are frequently mislabeled, misplaced, or their current status in the workflow is unknown, leading to testing delays and potential mix-ups.

Diagnosis: This indicates a reliance on error-prone manual tracking methods (e.g., paper logs, spreadsheets) and a lack of unique, scannable identifiers for samples [49].

Solution:

- Implement Unique Barcoding: Use the LIMS to generate and print a unique barcode for each sample at accessioning [49] [46] [51].

- Scan at Every Transfer: Enforce a procedure where staff must scan the sample barcode at every stage—storage, transfer, analysis, and disposal [46] [51].

- Leverage Real-Time Dashboard: Use the LIMS dashboard to view the real-time status and location of all samples, which automatically updates with each scan [48].

Prevention: Incorporate barcode label training into standard onboarding [46]. Run regular audits of the sample tracking logs to ensure scanning compliance.

Issue 2: Inadmissible Data or Broken Chain of Custody

Problem: During an audit or data review, the history of a sample cannot be fully produced, or the records are incomplete, challenging the validity of your results.

Diagnosis: The chain of custody documentation has gaps. This is often due to manual logbook entries that are lost or incomplete, or a process that allows sample handling outside of the documented system [47].

Solution:

- Verify Automated Audit Trails: Ensure your LIMS is configured to automatically log every user action with a timestamp and secure user ID [50] [47]. This creates an immutable record.

- Reconstruct the Path: Use the LIMS audit trail to reconstruct the sample's full custody path and identify the exact point of failure [50].

- Enforce Role-Based Access: Configure role-based access controls in the LIMS to prevent unauthorized handling of samples and data [50].

Prevention: Lead a culture of integrity where following CoC procedures is non-negotiable [50]. Establish a clear SOP that no sample should be handled without logging the action in the LIMS first.

Issue 3: Inefficient Workflows and Slow Turnaround

Problem: Sample processing is slower than expected, workflows are inconsistent between technicians, and staff are overloaded with administrative tasks.

Diagnosis: Workflows are not standardized or automated, leading to reliance on manual interventions, data re-entry, and constant status checks [48].

Solution:

- Map and Configure Workflows: Document your "as-is" sample testing workflows, then configure them within the LIMS to create a standardized "to-be" process [52].

- Automate Assignments and Alerts: Use the LIMS to automatically assign analytical tasks to technicians and trigger alerts when quality control checks fail or when a step is overdue [50] [49].

- Integrate Instruments: Connect analytical instruments to the LIMS to allow for automated data capture, eliminating manual transcription errors [53] [52].

Prevention: Adopt a phased rollout of new automated workflows and gather user feedback for continuous improvement [53] [52].

Issue 4: Failed Audit Due to Inadequate Traceability

Problem: An auditor cannot verify the integrity of your data or the path of a critical sample, resulting in a compliance finding.

Diagnosis: The laboratory cannot promptly produce a complete, unbroken record of sample custody and data history, often due to disjointed records and a lack of system-wide traceability [48].

Solution:

- Generate Pre-Built Reports: Utilize the LIMS reporting module to generate chain of custody and audit trail reports for any sample or date range on demand [48].

- Centralize Documentation: Use the LIMS to store and manage all relevant SOPs, instrument calibration records, and personnel qualifications in a single, audit-ready location [48] [52].

- Demonstrate Data Integrity: Show the auditor the enforced user authentication and the immutable, timestamped audit trail for all data modifications [50].

Prevention: Conduct regular internal audits using the same report generation process to identify and correct gaps before an external audit [50] [46].

Experimental Protocols & Methodologies

Standardized Workflow for Chain of Custody Integrity

The following methodology details the implementation of a LIMS-supported CoC protocol to ensure standardization in sample collection and storage research.

Procedure:

- Sample Collection & Accessioning:

- Upon receipt, create a sample record in the LIMS.

- Action: The system automatically generates a unique identifier and a corresponding barcode label. Print and affix this label to the sample container [49] [46].

- Documentation: The LIMS record captures collector information, date/time of receipt, and initial sample condition.

Secure Storage & Monitoring:

- Action: Scan the sample barcode and the barcode of its assigned storage location (e.g., freezer shelf) [49] [51]. The LIMS automatically updates the sample's location.

- Integration: For critical storage, link IoT-enabled monitors (e.g., for temperature) to the LIMS. The system will automatically log environmental conditions and trigger alerts for any deviations [50].

Analysis & Data Capture:

- Action: When retrieving the sample for analysis, scan its barcode. The LIMS will present the authorized testing workflow.

- Data Integrity: Upon analysis, directly import instrument results into the sample's record via system integration to prevent transcription errors [49] [52]. All actions are automatically recorded in the audit trail.

Final Disposition & Archiving:

- Action: For disposal or long-term archiving, perform a final barcode scan and select the appropriate disposition action in the LIMS [51].

- Documentation: The LIMS records the date, time, and authorizing user, finalizing the sample's lifecycle with a complete and unbroken chain of custody.

The Scientist's Toolkit: Research Reagent & Material Solutions

The following materials are essential for establishing and maintaining a robust chain of custody protocol.

| Item | Function in Chain of Custody |

|---|---|

| Barcode Labels & Scanner | Creates a unique, machine-readable identity for each sample, enabling fast, error-free logging of its movement and status at every stage [49] [46]. |

| Tamper-Evident Seals | Provides physical evidence of unauthorized access to sample containers, crucial for maintaining sample integrity, especially in forensic or legally sensitive research [51]. |

| Certified Reference Materials | Used to calibrate instruments and validate analytical methods, ensuring the accuracy and defensibility of the test results linked to the sample in the LIMS [51]. |

| Temperature Monitoring Devices | IoT-enabled sensors that continuously log storage conditions (e.g., temperature, humidity). They can be integrated with the LIMS to automatically record and alert deviations that could compromise sample stability [50]. |