Optimizing YOLOv5n for Egg Detection: A Parameter Tuning Guide for Biomedical Research

This article provides a comprehensive guide for researchers and scientists on optimizing YOLOv5n parameters for accurate and efficient egg detection in biomedical applications, such as parasite diagnostics.

Optimizing YOLOv5n for Egg Detection: A Parameter Tuning Guide for Biomedical Research

Abstract

This article provides a comprehensive guide for researchers and scientists on optimizing YOLOv5n parameters for accurate and efficient egg detection in biomedical applications, such as parasite diagnostics. It covers foundational concepts of YOLOv5n and its optimization challenges, details a step-by-step methodology for hyperparameter evolution using genetic algorithms, addresses common troubleshooting scenarios like overfitting and poor convergence, and presents a framework for validating model performance against state-of-the-art methods. The content is tailored to empower professionals in drug development and clinical research to deploy robust, automated detection systems, thereby enhancing diagnostic throughput and reliability.

Understanding YOLOv5n and the Egg Detection Challenge

YOLOv5n Architecture and Core Components

YOLOv5n is the nano-sized variant of the YOLOv5 (You Only Look Once) family of models, which are single-stage object detectors known for their exceptional speed and accuracy [1] [2]. Its architecture is strategically designed to be lightweight, making it suitable for deployments with limited computational resources.

The model is structured into three key parts [1]:

- Backbone: A Convolutional Neural Network (CNN) that aggregates and forms image features at different granularities. This is the initial feature extractor.

- Neck: A series of layers that mix and combine the image features from the backbone before passing them forward to the head. YOLOv5n uses a Feature Pyramid Network (FPN) and a Path Aggregation Network (PAN) structure to enhance feature propagation [3].

- Head: The final section consumes features from the neck and performs the core steps of box and class prediction, outputting the detected objects' bounding boxes, confidence scores, and class labels [1].

A key characteristic of YOLOv5n is its use of a .yaml configuration file to define the model. This file specifies critical scaling parameters—depth_multiple for adjusting the number of modules and width_multiple for adjusting the number of channels in convolutional layers—which are the primary differentiators between the nano (n), small (s), medium (m), large (l), and extra-large (x) variants [1].

Diagram 1: YOLOv5n Core Architecture

Relevance for Biomedical Image Analysis

The lightweight nature of YOLOv5n makes it highly relevant for biomedical image analysis, where computational power may be limited, yet rapid and accurate detection is crucial. Its efficacy has been demonstrated in various biomedical applications, proving particularly valuable for detecting small objects in complex images.

In parasitology, YOLOv5n serves as an excellent baseline model for the automated detection of parasite eggs in microscope images [3]. This application is critical for diagnosing intestinal parasitic infections in resource-limited settings. Furthermore, research has shown that modifications to the standard YOLOv5n architecture can yield even better performance. One study replaced the standard FPN in the neck with an Asymptotic Feature Pyramid Network (AFPN) and modified the C3 module in the backbone to a C2f module [3]. This enriched gradient flow and improved spatial context integration, resulting in a lightweight model called YAC-Net that outperformed the baseline YOLOv5n in precision, recall, and mAP@0.5, while also reducing the number of parameters [3].

Beyond parasitology, optimized YOLOv5 architectures have been successfully applied to other medical domains. For instance, a modified YOLOv5m model was enhanced with a Squeeze-and-Excitation (SE) block to improve channel-wise feature relationships, leading to superior detection of kidney stones in CT scans [4]. Another study utilized YOLOv5 for detecting semiconductor components in reel package X-ray imagery, showcasing its capability for small-object detection in an industrial context that shares similarities with biomedical analysis [5].

Table 1: Performance of YOLOv5n and Modified Architectures in Biomedical Detection

| Model / Study | Application | Key Metric | Result | Model Size / Parameters |

|---|---|---|---|---|

| YOLOv5n (Baseline) [3] | Parasite Egg Detection | mAP@0.5 | Baseline | ~1.9M parameters |

| YAC-Net (YOLOv5n modified) [3] | Parasite Egg Detection | mAP@0.5 | 0.9913 (2.7% improvement) | 1,924,302 parameters |

| Improved YOLOv5 [5] | Semiconductor X-ray | mAP | 0.622 (vs. 0.349 for original) | Not Specified |

| Optimized YOLOv5m [4] | Kidney Stone CT Scans | Inference Speed | 8.2 ms per image | ~41 MB |

Experimental Protocols for Parameter Optimization

For a thesis focused on parameter optimization of YOLOv5n for egg detection, a rigorous and reproducible experimental protocol is essential. The following methodology outlines the key steps.

Dataset Preparation and Annotation

The foundation of any effective model is a high-quality dataset. Images should be annotated in the YOLO format, where each image has a corresponding text file containing one bounding box annotation per line in the format: <object-class-ID> <X center> <Y center> <Box width> <Box height> [6]. These coordinates are normalized to the image dimensions, ranging from 0 to 1. The dataset should be split into training, validation, and test sets, typically following an 80/10/10 ratio. A dataset configuration file (e.g., egg_data.yaml) must be created to specify the paths to these splits and the number and names of classes [6].

Model Training and Optimization Techniques

Training is initiated using the train.py script. For parameter optimization, several key approaches are recommended [6] [3]:

- Transfer Learning: It is highly recommended to start from pre-trained weights (e.g.,

yolov5n.pt) rather than training from scratch, especially with datasets of limited size. This leverages features learned from a large dataset like COCO [6]. - Hyperparameter Tuning: The hyperparameters for training, including learning rate, momentum, and loss components, are defined in a

hyp.scratch.yamlorhyp.finetune.yamlfile [6]. Using genetic evolution or grid search to optimize these parameters can lead to significant performance gains. - Architectural Modifications: Based on the success of models like YAC-Net, researchers can experiment with specific modifications to the YOLOv5n architecture, such as replacing the FPN with an AFPN for better multi-scale feature fusion or integrating attention modules like the SE block to recalibrate channel-wise feature responses [3] [4].

- Data Augmentation: YOLOv5's built-in data loader performs online augmentations, including scaling, color space adjustments, and mosaic augmentation (which combines four images into one). These techniques help the model generalize better and are particularly effective for addressing the "small object problem" [1] [6].

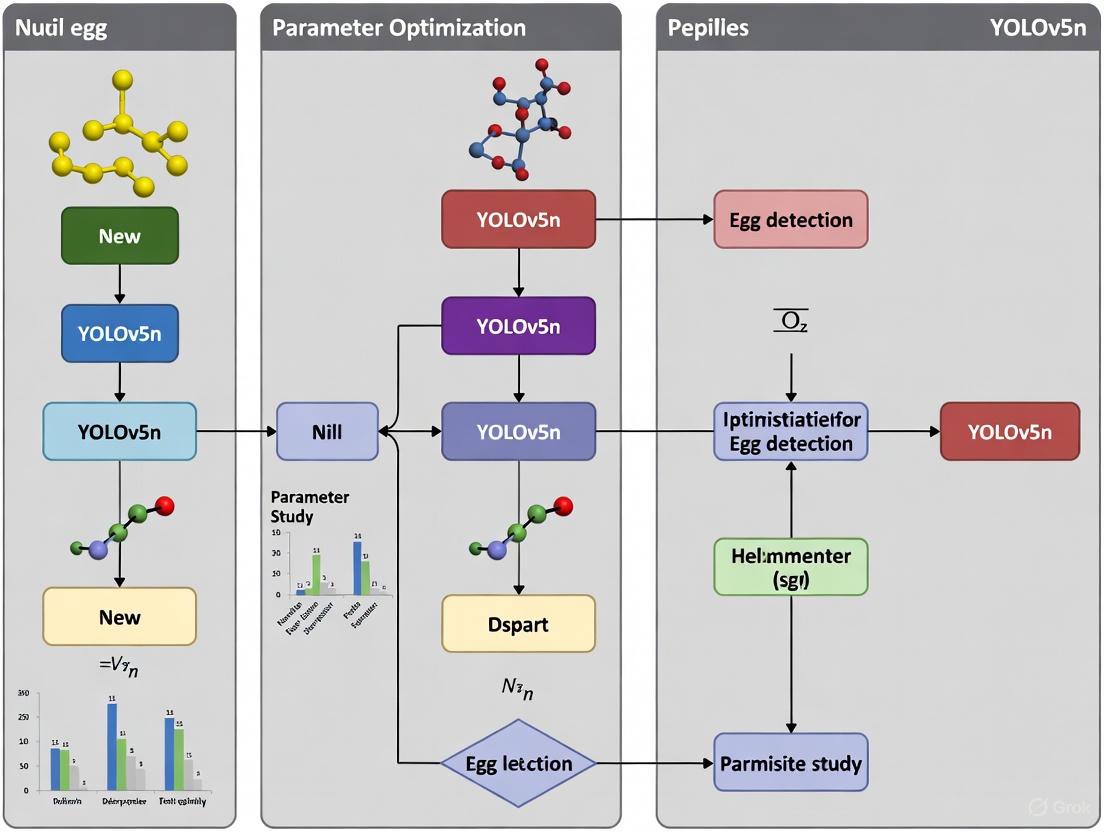

Diagram 2: Parameter Optimization Workflow

Performance Evaluation

Model performance should be evaluated on the held-out test set using standard object detection metrics [6]:

- Precision: Measures how many of the detected eggs are correct.

- Recall: Measures how many of the actual eggs in the images were detected.

- Mean Average Precision (mAP): The primary metric for object detection, calculated as the average precision across all classes and recall levels. mAP@0.5 and mAP@0.5:0.95 are commonly reported [6].

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools and Materials for YOLOv5n-based Egg Detection Research

| Item / Solution | Function / Purpose | Example / Specification |

|---|---|---|

| Microscopy & Imaging System | Acquires high-quality digital images of samples for model input. | Microscope with X-Y stage and high-definition camera [3]. |

| Annotation Platform | Enables manual labeling of images to create the ground-truth dataset. | Roboflow [6] or other platforms supporting YOLO format export. |

| Computing Environment | Provides the hardware and software necessary for model training and experimentation. | Python 3.8+, PyTorch 1.8+, CUDA-compatible GPU (e.g., NVIDIA V100) [7] [8]. |

| YOLOv5 Codebase | The core implementation of the model used for training, validation, and export. | Ultralytics YOLOv5 GitHub repository [2]. |

| Experiment Tracking Tool | Logs training metrics, hyperparameters, and results for comparison and reproducibility. | TensorBoard, Weights & Biases, or Comet ML [9]. |

Troubleshooting Guides and FAQs

Q: How can I improve the performance of my YOLOv5n model on small parasite eggs? A: Several strategies can be employed:

- Architectural Modification: Replace the standard FPN in the model's neck with an Asymptotic Feature Pyramid Network (AFPN). This structure better integrates spatial contextual information from non-adjacent levels, which is beneficial for detecting small objects [3].

- Data Augmentation: Leverage YOLOv5's built-in mosaic augmentation, which combines four images into one. This is specifically designed to help the model learn to recognize smaller objects and improve generalization [1] [6].

- Anchor Box Tuning: Allow the model to auto-learn anchor boxes based on the distribution of bounding box sizes in your specific custom dataset. This ensures the preset anchor dimensions are well-suited for the morphology of parasite eggs [1].

Q: My model training is slow. How can I speed it up? A: To accelerate the training process:

- Increase Batch Size: Use the largest batch size that your GPU memory allows. You can use the

--batch-size -1argument to enable YOLOv5's AutoBatch feature, which automatically finds the optimal batch size [8] [2]. - Utilize Multiple GPUs: If available, configure your training script to use multiple GPUs. This distributes the workload and can significantly reduce training time [9].

- Cache Images: Use the

--cacheargument during training (e.g.,--cache ramor--cache disk) to store pre-loaded images in memory or on disk, which minimizes data loading overhead [6].

Q: Which parameters should I monitor during training to assess model convergence and performance? A: While loss is a primary indicator, you should also continuously monitor the following key metrics [9] [6]:

- Precision and Recall: To understand the model's accuracy and its ability to find all relevant objects.

- mAP (mean Average Precision): Specifically, mAP@0.5 and mAP@0.5:0.95 are the most important metrics for evaluating overall object detection performance. These metrics can be visualized in the training logs and through integrated tools like TensorBoard or Weights & Biases [9].

Q: I am getting poor results even after many epochs. What could be wrong? A: This common issue can often be traced to the dataset or initial setup:

- Verify Dataset and Labels: The quality and format of your data are paramount. Ensure your annotations are accurate and adhere to the YOLO format. Incorrect or low-quality labels will prevent the model from learning effectively [9].

- Leverage Pretrained Weights: If you are training "from scratch" (with randomly initialized weights), try initializing your model with pretrained weights (e.g.,

yolov5n.pt) instead. This practice, known as transfer learning, often leads to faster convergence and better performance, especially with smaller datasets [9] [6]. - Check Class Distribution: An imbalance in your dataset, where one class has many more instances than others, can cause the model to become biased. Analyze your dataset to ensure a relatively balanced distribution of classes [9].

The critical role of automated egg detection in parasite diagnostics and public health

FAQs and Troubleshooting Guide

This section addresses common challenges researchers face when optimizing YOLOv5n models for detecting parasite eggs in microscopic images.

Q1: My YOLOv5n model has high precision but low recall, missing many parasite eggs in complex backgrounds. How can I improve detection sensitivity?

- Problem Analysis: This is a classic class imbalance issue, often caused by a high number of background patches versus relatively few parasite egg instances in the training data. It can also stem from anchor box sizes that are not well-suited to the dimensions of the target eggs.

- Recommended Solutions:

- Data Augmentation: Implement mosaic augmentation and random affine transformations. These techniques, native to YOLOv5, improve the model's robustness to object scale and translation, helping it generalize better to eggs in varied contexts [10].

- Anchor Box Optimization: Use the AutoAnchor function in YOLOv5 to automatically recalculate anchor boxes based on your custom dataset's ground truth boxes. This ensures the preset anchor sizes better match the actual size distribution of parasite eggs in your images [10].

- Neck Architecture Modification: Consider replacing the standard PANet in the neck with a weighted Bi-directional Feature Pyramid Network (BiFPN). This enhancement improves the fusion of features from different scales, which is crucial for detecting small objects like parasite eggs [11].

Q2: How can I prevent overfitting when my annotated dataset of parasite egg images is limited?

- Problem Analysis: Overfitting occurs when a model learns the training data too well, including its noise and irrelevant details, and fails to perform on new, unseen data. This is a significant risk with small datasets.

- Recommended Solutions:

- Advanced Data Augmentation: Leverage YOLOv5's built-in augmentations, including copy-paste augmentation, MixUp, and HSV (Hue, Saturation, Value) color space adjustments. These methods artificially expand your dataset and encourage the model to learn more invariant features [10].

- Transfer Learning: Start with a model pre-trained on a large, diverse dataset like COCO. This provides a strong foundational understanding of general features (edges, textures) before fine-tuning on your specific parasite egg dataset [12].

- Regularization Techniques: Employ the Exponential Moving Average (EMA) during training. EMA stabilizes the training process by averaging model parameters over past steps, which reduces generalization error and helps prevent overfitting [10].

Q3: What is the most effective method for creating a high-quality dataset for parasite egg detection?

- Problem Analysis: The accuracy of any deep learning model is fundamentally limited by the quality, quantity, and consistency of its training data. This is especially critical in medical diagnostics.

- Recommended Solutions:

- Standardized Annotation: Use a dedicated annotation tool like Roboflow to ensure consistent and accurate bounding boxes are drawn around parasite eggs. This creates a unified ground truth for model training [12].

- Multi-Source Data Collection: To increase dataset diversity and size, combine images from multiple sources, such as public hospitals, research institutions, and standardized repositories like the IEEE Dataport [12].

- Robust Data Splitting: Split your annotated dataset into training (70%), validation (20%), and testing (10%) sets. This standard practice allows for unbiased evaluation of the model's final performance [12].

Q4: How can I optimize YOLOv5n for deployment in resource-limited field settings?

- Problem Analysis: Deploying models in field clinics or on portable devices requires a balance between speed, model size, and accuracy.

- Recommended Solutions:

- Model Selection: YOLOv5n is the nano-version of YOLOv5, specifically designed to be fast and lightweight, making it an excellent starting point for edge devices [12].

- Simplified Neck Structure: Fuse the GSConv module with the neck network. This design reduces computational complexity and model parameters while maintaining good accuracy, which is ideal for environments with limited GPU power [11].

- Mixed Precision Training: Train your model using mixed precision (FP16). This reduces memory usage and increases computational speed on supported hardware, facilitating faster inference in the field [10].

Experimental Protocols and Data

This section provides detailed methodologies and quantitative results from key studies in automated egg detection.

Detailed Protocol: Intestinal Parasite Egg Detection using YOLOv5

The following workflow is adapted from a study that achieved a mean Average Precision (mAP) of approximately 97% [12].

- Dataset Collection & Annotation:

- Collect microscopic images of stool samples at 10x magnification. The dataset should include various parasite eggs (e.g., Hookworm, Hymenolepsis nana, Taenia, Ascaris lumbricoides).

- Annotate all parasite eggs in the images using a tool like Roboflow, drawing bounding boxes to define the ground truth [12].

- Data Pre-processing & Augmentation:

- Resize all images to a uniform resolution of 416x416 pixels to match the YOLOv5 input requirements.

- Apply augmentation techniques to increase dataset diversity and prevent overfitting. These include vertical flipping and rotational augmentation [12].

- Model Configuration & Training:

- Split the dataset into 70% training, 20% validation, and 10% testing.

- Configure the YOLOv5n model. Key hyperparameters to consider are:

- Train the model, validating on the held-out validation set.

- Model Evaluation:

- Evaluate the final model on the unseen test set. Key metrics include precision, recall, and mean Average Precision at an IoU threshold of 0.5 (mAP@0.5).

Diagram 1: YOLOv5n Experimental Workflow

Detailed Protocol: Video-based Detection for Unwashed Chicken Eggs

This protocol outlines an approach for detecting defects on moving, unwashed eggs, achieving a final accuracy of 96.4% [11].

- System Design:

- Design a conveyor system with rollers that cause eggs to undergo compound rotation and translation, ensuring the camera captures the entire egg surface.

- Set up a ring LED light source and a industrial camera (e.g., 1920x1080 resolution, 120 fps) with its imaging plane parallel to the horizontal movement plane of the eggs.

- Model Enhancement:

- Modify the YOLOv5 backbone by integrating the Coordinate Attention (CA) mechanism to improve feature representation.

- Fuse BiFPN and GSConv with the neck network to enhance multi-scale feature fusion and reduce computational cost [11].

- Object Tracking & Temporal Integration:

- Use the ByteTrack algorithm to assign a consistent ID to each egg as it moves across video frames.

- To make a final classification decision for each egg, aggregate the detection results from multiple consecutive frames (e.g., 5 frames) associated with its ID [11].

The table below summarizes quantitative results from various automated egg detection studies.

Table 1: Performance Comparison of Automated Egg Detection Methods

| Detection Target | Methodology | Key Metrics | Performance | Citation |

|---|---|---|---|---|

| Intestinal Parasite Eggs | YOLOv5 | Mean Average Precision (mAP) | ~97% | [12] |

| Detection Time | 8.5 ms/sample | |||

| Unwashed Chicken Eggs (Cracks) | Improved YOLOv5 + ByteTrack | Accuracy | 96.4% | [11] |

| Precision / Recall / mAP@0.5 | +2.2% / +4.4% / +4.1% (vs. baseline) | |||

| Chicken Egg Damage | GoogLeNet (CNN) | Classification Accuracy | 98.73% | [13] |

| Defective Eggs (Cracked, Bloody, Dirty) | CNN (DenseNet201) + BiLSTM | Classification Accuracy | 99.17% | [14] |

| General Egg Grading & Defects | RTMDet + Random Forest | Classification Accuracy / Weight R² | 94.8% / 96.0% | [15] |

The Scientist's Toolkit: Research Reagent Solutions

This table lists essential materials and software tools for developing automated egg detection systems.

Table 2: Essential Research Tools and Reagents for Egg Detection Experiments

| Item Name | Function / Application | Specification Example |

|---|---|---|

| Roboflow | A web-based tool for annotating images, creating datasets, and applying augmentations for computer vision projects. | Used for drawing bounding boxes around parasite eggs to create training data [12]. |

| Kubic FLOTAC Microscope (KFM) | A portable digital microscope for autonomously analyzing fecal specimens for parasite eggs in both field and laboratory settings [16]. | Provides standardized image acquisition for building consistent datasets. |

| Ultralytics YOLOv5 | A state-of-the-art, open-source object detection model architecture known for its speed and accuracy. The 'n' (nano) version is optimized for edge devices. | Serves as the core detection algorithm; can be modified with attention modules like CA [11]. |

| Industrial Camera | High-resolution, high-frame-rate image acquisition for capturing clear images of moving objects. | e.g., Dahua A3200CU000 camera (1920x1080, 120 fps) for video-based egg tracking [11]. |

| ByteTrack | A simple and effective multi-object tracker that keeps track of object identities across video frames. | Used to associate detection results of the same egg across multiple video frames for robust classification [11]. |

| Coordinate Attention (CA) Module | A lightweight attention mechanism that enhances CNN features by capturing long-range dependencies along both spatial dimensions. | Integrated into the YOLOv5 backbone to improve feature representation for small objects [11]. |

This technical support center provides troubleshooting guides and FAQs to help researchers address specific challenges in microscopic egg detection, framed within the context of parameter optimization for YOLOv5n in egg detection research.

Troubleshooting Guides & FAQs

FAQ: How can I improve detection when eggs are clustered or overlapping?

Clustering and occlusion prevent the model from distinguishing individual egg instances.

- Solution 1: Architectural Modification with AFPN: Replace the standard Feature Pyramid Network (FPN) in YOLOv5n with an Asymptotic Feature Pyramid Network (AFPN). AFPN fully fuses spatial contextual information across different levels through its hierarchical and asymptotic aggregation structure. This adaptive fusion helps the model select beneficial features and ignore redundant information, improving the detection of closely packed objects and reducing computational complexity [17].

- Solution 2: Optimize Pre-processing with Image Enhancement: For low-light or low-contrast images that exacerbate clustering issues, apply pre-processing techniques. Using color spaces like Log RGB, which offers a higher dynamic range (32-bit) and superior illumination invariance compared to standard sRGB, can help reveal subtle details and improve the separation of adjacent eggs [18] [19].

FAQ: Why does my model perform poorly with a complex or noisy background?

Complex backgrounds introduce features that the model may confuse with the target eggs, leading to false positives and reduced precision.

- Solution 1: Integrate an Attention Mechanism: Add a Convolutional Block Attention Module (CBAM) to the YOLOv5n architecture. CBAM forces the model to pay more attention to key informational features in the image while suppressing less useful background features. This enhances the model's perception of target information [20].

- Solution 2: Employ Advanced Feature Extraction: Modify the backbone network by replacing the C3 module with a C2f module. The C2f module enriches gradient flow information, improving the backbone's feature extraction capability and allowing it to better discriminate between target eggs and background noise [17].

- Solution 3: Improve Optical Sectioning (Microscopy Technique): Ensure your imaging setup provides optimal optical sectioning. Confocal microscopy, for instance, uses a pinhole to suppress out-of-focus light, significantly reducing background signal. Using an array-based detector can further enhance background removal by discriminating between in-focus and out-of-focus light [21].

FAQ: How can I address insufficient or uneven illumination in my images?

Poor lighting leads to low contrast, color distortion, and a low signal-to-noise ratio, severely impacting detection accuracy [18].

- Solution 1: Troubleshoot the Microscope: Confirm the light source intensity is set correctly and that the substation condenser is properly positioned and centered. Ensure the field diaphragm is opened appropriately and that the correct size field stop is being used. For high-magnification oil objectives, verify that the oil contact between the condenser and slide is not broken [22].

- Solution 2: Utilize a Dedicated Low-Light Model: Consider implementing a specialized network like NLE-YOLO, which is designed for low-light conditions. It incorporates modules like C2fLEFEM to suppress high-frequency noise and enhance key information, and an Attentional Receptive Field Block (AMRFB) to broaden the receptive field and improve feature extraction in suboptimal lighting [18].

Experimental Protocols for YOLOv5n Optimization

Protocol 1: Enhancing Feature Fusion with AFPN

This protocol outlines the steps to replace the YOLOv5n's neck with an Asymptotic Feature Pyramid Network (AFPN) to better handle multi-scale objects like egg clusters [17].

Workflow Diagram: AFPN Integration for Egg Detection

Methodology:

- Baseline Establishment: Train and evaluate the standard YOLOv5n model on your egg dataset to establish a performance baseline (Precision, Recall, mAP@0.5).

- Neck Replacement: Modify the model architecture by replacing the existing FPN/PANet in the neck with the AFPN structure.

- Model Retraining: Retrain the modified model (YAC-Net architecture) on the same dataset, ensuring all other hyperparameters (e.g., learning rate, batch size) remain consistent with the baseline training for a fair comparison.

- Performance Evaluation: Compare the precision, recall, F1 score, and mAP@0.5 of the improved model against the baseline. The expected outcome is an improvement in all metrics, particularly recall and mAP, with a potential reduction in the total number of model parameters [17].

Protocol 2: Optimizing Color Space for Improved Contrast

This protocol evaluates the impact of different color space conversions as a pre-processing step to enhance image contrast and dynamic range, aiding in the separation of eggs from complex backgrounds [19].

Methodology:

- Dataset Sourcing: Utilize a dataset of raw microscope images (e.g., in DNG format) to preserve maximum spectral information.

- Color Space Conversion: Convert the raw images into several color spaces for experimentation. Key candidates should include:

- sRGB: The standard, compressed 8-bit format (baseline).

- Linear RGB: A 16-bit format that preserves data fidelity.

- Log RGB: A 32-bit format that offers an enhanced dynamic range and illumination invariance [19].

- Model Training & Evaluation: Train separate, identical YOLOv5n models on datasets composed of images from each color space. Evaluate and compare the mean Average Precision (mAP) and mean Average Recall (mAR) across all models.

Table: Quantitative Comparison of Color Space Performance on Object Detection Models

| Color Space | Bit Depth | Key Characteristics | Reported mAP Improvement | Reported mAR Improvement |

|---|---|---|---|---|

| sRGB | 8-bit | Standard, compressed format | Baseline | Baseline |

| Linear RGB | 16-bit | Preserves data fidelity | Significant improvement over sRGB | Significant improvement over sRGB |

| Log RGB | 32-bit | Enhanced dynamic range, illumination invariance | 27.16% higher than sRGB [19] | 34.44% higher than sRGB [19] |

The Scientist's Toolkit: Research Reagent Solutions

Table: Essential Materials and Computational Tools for Egg Detection Research

| Item Name | Function / Application |

|---|---|

| YOLOv5n Model | The baseline, lightweight one-stage object detection model upon which improvements are built [17]. |

| Asymptotic Feature Pyramid Network (AFPN) | A neck module that improves multi-scale object detection by adaptively fusing spatial context from different feature levels, ideal for clustered objects [17]. |

| C2f Module | A replacement for the C3 module in the backbone; enriches gradient flow to improve feature extraction and reduce information loss [17]. |

| Convolutional Block Attention Module (CBAM) | An attention mechanism that enhances model focus on key target regions (eggs) by sequentially applying channel and spatial attention, suppressing irrelevant backgrounds [20]. |

| Log RGB Color Space | A 32-bit color representation used in image pre-processing to enhance dynamic range and improve detection of subtle image details under varying illumination [19]. |

| Microscope Slide Sealant (e.g., Petroleum Jelly) | Used to seal the edges of coverslips on aqueous mounts, preventing specimen drift caused by evaporation and facilitating stable observation and image capture [22]. |

| Optical-Grade Lens Tissue & Cleaning Solvent | Essential for cleaning microscope slide, coverslip, and objective front lens surfaces to remove dust, fibers, and oil that can cause artifacts and obscure the specimen [22]. |

Frequently Asked Questions

FAQ 1: What is the fundamental difference between a convex and a non-convex loss function?

A convex loss function has a bowl-shaped curve where the line segment between any two points on the graph lies above or on the graph itself [23] [24]. This property guarantees that the function has only a single global minimum, making optimization more straightforward. In contrast, a non-convex loss function has a wavy landscape with multiple peaks and valleys. The line segment between two points may lie below the graph, creating numerous local minima where optimization algorithms can become trapped [23] [24] [25].

FAQ 2: Why is the local minima problem particularly relevant for optimizing deep learning models like YOLOv5n in egg detection?

The loss functions of deep learning models, including YOLOv5n used for egg detection, are inherently non-convex due to the complex, multi-layer architecture and high number of parameters [24]. This complexity creates a rugged loss landscape with many local minima. During optimization, algorithms may converge to a local minimum—a point that is optimal only in a small region—instead of the global minimum that represents the best possible model performance. This can result in a suboptimal model that fails to achieve the highest possible detection accuracy for tasks like identifying cracked eggs or parasites [26] [16].

FAQ 3: What practical strategies can help YOLOv5n escape local minima during training?

Several optimization techniques can mitigate the risk of getting stuck in local minima:

- Stochastic Gradient Descent (SGD): Instead of using the entire dataset to compute the gradient, SGD uses a single random sample at a time. This introduces noise into the parameter updates, which can help bounce the model out of shallow local minima [25].

- Mini-batch Gradient Descent: This is a hybrid approach that uses small, randomly selected subsets (mini-batches) of the data. It combines the stability of batch gradient descent with the noise-induced escape capability of SGD [25].

- Random Restart: Training the model multiple times with different random initializations of parameters increases the probability that one of the runs will converge towards a better, and potentially global, minimum [25].

FAQ 4: How can I assess if my YOLOv5n model has converged to a local minimum?

A strong indicator is when your training loss stops improving (converges) but the model's performance on a validation set (e.g., measured by mAP - mean Average Precision) remains unsatisfactorily low. This suggests the model has found a suboptimal solution. Furthermore, if small changes in the training setup—such as a different random seed or learning rate—lead to significantly different final performance, it often signifies that the model is navigating a non-convex landscape with multiple local minima.

Troubleshooting Guide: Overcoming Local Minima in Egg Detection Research

Problem: The YOLOv5n model for detecting cracked or parasite eggs has plateaued at a suboptimal performance level, likely stuck in a local minimum.

Symptoms:

- Training loss does not decrease over several epochs.

- Validation metrics like precision, recall, and mAP remain low and unstable.

- Model performance varies drastically with different parameter initializations.

Solution: Implement a combined strategy using advanced optimizers and tailored training protocols.

Step-by-Step Resolution:

Switch to a Robust Optimizer: Replace standard SGD with an optimizer that has built-in mechanisms to handle non-convex landscapes. Adam (Adaptive Moment Estimation) is often a good choice as it uses adaptive learning rates for each parameter, which can help navigate flat regions and saddle points commonly found near local minima [27].

Apply a Learning Rate Schedule: Instead of a fixed learning rate, use a schedule that gradually reduces the learning rate during training. Starting with a higher rate can help escape local minima early on, while carefully lowering it later allows for fine-tuned convergence. Cyclical learning rates can also be explored.

Utilize Multi-Domain Training (if applicable): If your egg data comes from different sources (e.g., different farms, lighting conditions, or camera setups), employ a multi-domain training strategy. As shown in cracked egg detection research, using methods like Maximum Mean Discrepancy (MMD) can help the model learn domain-invariant features, which improves generalization and robustness on unseen data, effectively leading to convergence at a better minimum [26].

Experimental Data & Performance

Table 1: Impact of Multi-Domain Training on YOLO Model Robustness for Cracked Egg Detection

This table summarizes experimental results from a study that tackled domain shift in cracked egg detection. Using a multi-domain training strategy with Maximum Mean Discrepancy (MMF-MMD) improved model performance on previously unseen egg data [26].

| Model Variant | Training Data | mAP on Unseen Domain 4 | mAP on Unseen Domain 5 | Notes |

|---|---|---|---|---|

| YOLOv5n | Single Domain (Domain 2) | 78.6% | Not Reported | Baseline performance on a single data source [26]. |

| YOLOv5n | All Domains (1,2,3) | 81.9% | Not Reported | Simple combination of all training data [26]. |

| YOLOv5n | Multi-Domain w/ NSFE-MMD | 86.6% | 87.9% | Proposed method; enhanced extraction of domain-invariant features [26]. |

| YOLOv8 | Single Domain (Domain 2) | 84.4% | Not Reported | Baseline for a newer model architecture [26]. |

| YOLOv8 | Multi-Domain w/ NSFE-MMD | 88.8% | Not Reported | Demonstrates the method's effectiveness on different architectures [26]. |

Table 2: Comparison of Gradient Descent Variants for Non-Convex Optimization

This table outlines the core characteristics of different gradient descent algorithms, which are crucial for training models on non-convex loss functions [25].

| Algorithm | Mechanism | Advantages | Disadvantages | Suitability for Non-Convex Problems |

|---|---|---|---|---|

| Batch Gradient Descent (BGD) | Uses the entire training set to compute each update. | Stable convergence, smooth path to minimum. | Computationally slow per step; can get stuck in local minima. | Low; lacks mechanisms to escape local minima [25]. |

| Stochastic Gradient Descent (SGD) | Uses a single, randomly selected sample per update. | Fast updates; inherent noise helps escape local minima. | Noisy convergence path; can overshoot. | High; the stochastic noise is beneficial for escaping local minima [25]. |

| Mini-Batch Gradient Descent | Uses a small, random subset (mini-batch) of data per update. | Balances speed (SGD) and stability (BGD). | Introduces some noise, but less than SGD. | High; a good practical compromise and widely used [25]. |

The Scientist's Toolkit

Table 3: Essential Research Reagents and Computational Materials for Egg Detection Experiments

| Item | Function in Research | Example from Literature |

|---|---|---|

| Multi-Domain Egg Dataset | Provides varied data to train robust models that generalize to unseen environments. | Eggs from different origins (Wuhan, Qingdao) and acquisition systems (static light box, dynamic production line) [26]. |

| YOLOv5n Model | A lightweight, one-stage object detection model ideal for real-time applications and deployment on resource-constrained hardware. | Used as the base detector for cracked eggs and parasite eggs [26] [16]. |

| Maximum Mean Discrepancy (MMD) | A statistical measure used to compare distributions. Helps align feature distributions from different domains during training. | Applied in a multi-domain training strategy to improve YOLO model performance on unknown egg domains [26]. |

| Jetson AGX Orin / Embedded System | A powerful embedded computer for deploying and running trained models in real-time industrial settings. | Used to deploy an enhanced YOLOv8s model for real-time egg quality monitoring in a cage environment [28]. |

Workflow and Conceptual Diagrams

Hyperparameter FAQs & Troubleshooting

This guide addresses common questions and issues researchers encounter when optimizing YOLOv5n for specialized detection tasks, such as in egg detection research.

FAQ 1: My model performance drops significantly when I resume training from a checkpoint. What hyperparameters should I adjust?

A sudden drop in performance, where metrics like mAP fall from 0.5 to nearly 0.1 within a few epochs, is often caused by an improperly configured learning rate for the continuation of training [29].

- Primary Cause: The learning rate at the start of the new training session is likely too high, causing the model to overshoot the optimized weights from the previous training (

best.pt) [29]. - Solution: When resuming training, reduce the initial learning rate (

lr0). A good strategy is to restart from the last saved checkpoint using the--resumeargument and use a lowerlr0value, such as one-tenth of its previous value, to allow for finer weight adjustments [30] [29].

FAQ 2: Which data augmentations are most critical for object detection in agricultural products like eggs?

The choice of augmentation should reflect the real-world conditions of your application. For egg detection on a processing line, certain transformations are more relevant than others [31].

- Recommended Augmentations:

- Color Adjustments (HSV): Crucial for handling variations in lighting conditions on a production line. Adjusting Hue (

hsv_h), Saturation (hsv_s), and Value (hsv_v) can simulate different lighting, making the model robust to these changes [32] [31]. - Geometric Transformations: Translation (

translate) and scaling (scale) help the model recognize objects that are partially visible or at different distances from the camera, which can occur due to conveyor speed or camera angle variations [32].

- Color Adjustments (HSV): Crucial for handling variations in lighting conditions on a production line. Adjusting Hue (

- Augmentations to Use Cautiously:

- Rotation and Shear: Large rotations or shear are generally not applicable for eggs on a conveyor belt, as the overall orientation is typically stable. Small values can be used for minor positional variations, but extreme values do not reflect real-world scenarios and can degrade performance [32].

FAQ 3: How can I systematically find the best hyperparameter values for my specific dataset?

Manual tuning can be inefficient. YOLOv5 includes a Hyperparameter Evolution feature that uses a genetic algorithm to automatically find optimal values [33].

- Methodology: The genetic algorithm performs the base training scenario hundreds of times over multiple generations. In each generation, it mutates hyperparameters and selects the best-performing offspring based on a fitness function to create the next generation [33].

- Fitness Function: The default fitness is a weighted combination of metrics: 90% mAP@0.5:0.95 and 10% mAP@0.5 [33].

- Protocol:

- Start with a base training command using your dataset and a pre-trained model.

- Append the

--evolveargument (e.g.,python train.py --data your_dataset.yaml --weights yolov5n.pt --evolve). - The process will run for a default of 300 generations, saving the best hyperparameters to a YAML file (

runs/evolve/hyp_evolved.yaml). A minimum of 300 generations is recommended for reliable results [33]. - Use the evolved hyperparameters for your final model training.

Quantitative Hyperparameter Reference Tables

Core Training Hyperparameters and Their Functions

This table outlines the key hyperparameters that control the YOLOv5n optimization process [30] [33].

| Hyperparameter | Default Value | Description & Function in Optimization |

|---|---|---|

lr0 |

0.01 | The initial learning rate (SGD=1E-2, Adam=1E-3). It controls the step size during weight updates; too high causes instability, too low leads to slow convergence [30] [33]. |

lrf |

0.01 | The final learning rate as a fraction of lr0 (lr0 * lrf). Used with schedulers to gradually reduce the learning rate, allowing finer tuning in later training stages [30] [33]. |

momentum |

0.937 | The momentum factor for SGD or beta1 for Adam. It helps accelerate convergence by adding a fraction of the previous update to the current one, smoothing the path through the loss landscape [30] [33]. |

weight_decay |

0.0005 | The L2 regularization term. It penalizes large weights in the model, helping to prevent overfitting by encouraging smaller, simpler weights [30] [33]. |

warmup_epochs |

3.0 | The number of epochs for a linear learning rate warm-up. Gradually increasing the learning rate from a low value stabilizes training in the initial phases [30] [33]. |

Data Augmentation Parameters and Their Visual Effects

This table describes key augmentation parameters in YOLOv5 that enhance model generalization [32].

| Hyperparameter | Default | Range | Purpose & Visual Effect |

|---|---|---|---|

hsv_h |

0.015 | 0.0 - 1.0 | Hue adjustment. Shifts image colors while preserving relationships. Useful for simulating different lighting conditions (e.g., a banana under sunlight vs. indoor light) [32]. |

hsv_s |

0.7 | 0.0 - 1.0 | Saturation adjustment. Modifies color intensity. Helps models handle different weather and camera settings (e.g., a vivid vs. faded traffic sign) [32]. |

hsv_v |

0.4 | 0.0 - 1.0 | Brightness/Value adjustment. Changes image brightness. Essential for performance under varying lighting (e.g., an apple in sun vs. shade) [32]. |

translate |

0.1 | 0.0 - 1.0 | Image translation. Shifts images horizontally/vertically. Teaches the model to detect partially visible objects and be robust to positional changes [32]. |

scale |

0.5 | ≥ 0.0 | Image scaling. Zooms images in or out. Enables the model to handle objects at different distances and sizes [32]. |

fliplr |

0.5 | 0.0 - 1.0 | Left-right flip probability. A simple and effective augmentation for datasets where object orientation is not fixed, like aerial imagery [32] [31]. |

Experimental Protocols for Egg Detection Research

Protocol 1: Establishing a Baseline with Default Hyperparameters

Before optimization, establish a performance baseline [34].

- Dataset Preparation: Organize your annotated egg images in YOLO format, with a dataset YAML file defining paths and class names (e.g.,

egg_dataset.yaml) [34]. - Initial Training: Train the YOLOv5n model using default hyperparameters.

- Validation: Evaluate the resulting

best.ptmodel on your validation set. The reported mAP will serve as your baseline for future comparisons.

Protocol 2: Hyperparameter Evolution for Egg Detection

This protocol uses automated search to optimize hyperparameters for your specific data [33].

- Setup: Ensure your dataset and environment are correctly configured.

- Execute Evolution: Run the training command with the

--evolveflag. For a more extensive search, increase the number of generations. - Apply Results: The best-found hyperparameters are saved in

runs/evolve/hyp_evolved.yaml. Use this file for your final training.

Workflow Visualization

Hyperparameter Optimization Workflow

This diagram illustrates the systematic process for optimizing YOLOv5n hyperparameters, from baseline establishment to final model evaluation.

The Scientist's Toolkit: Research Reagent Solutions

This table lists essential "research reagents" – the key software and data components required for conducting YOLOv5n hyperparameter optimization experiments.

| Item | Function in Research |

|---|---|

| YOLOv5n Pre-trained Weights | Provides the foundational model architecture (.pt file). Transfer learning from these weights, trained on large datasets like COCO, significantly accelerates convergence and improves final accuracy on custom tasks like egg detection [30] [34]. |

| Annotated Custom Dataset (YOLO format) | The core "reagent" for your specific experiment. Requires images with corresponding .txt label files specifying bounding boxes. For egg detection, this dataset must represent the visual conditions of the production environment [34]. |

| Dataset Configuration YAML | A configuration file (.yaml) that informs the training script about the dataset's location, the paths to training/validation images, and the list of class names [34]. |

| Hyperparameter Configuration File | A YAML file (e.g., hyp.yaml) that defines all augmentation and optimization hyperparameters. This is the primary file modified during manual tuning or generated by the evolution process [33]. |

| Hyperparameter Evolution Script | The built-in genetic algorithm (train.py --evolve) that automates the search for optimal hyperparameter values, saving significant researcher time compared to manual grid searches [33]. |

A Step-by-Step Methodology for Hyperparameter Evolution in YOLOv5n

Frequently Asked Questions

Q1: What are baseline hyperparameters and why are they critical for my YOLOv5n egg detection research? Baseline hyperparameters are the default, unoptimized settings for your model's training process. Establishing a baseline is the most important first step in any experiment as it provides a performance benchmark. This allows you to determine if subsequent optimization efforts are genuinely improving your model or adding unnecessary complexity. Researchers should always train with default hyperparameters first before considering any modifications to establish this crucial performance baseline [35] [36].

Q2: How do I define a fitness function for optimizing my egg detection model?

In the context of genetic algorithm-based hyperparameter tuning in YOLOv5, the fitness function is a single metric that quantifies the performance and quality of your model. It acts as a guide for the optimization process, evaluating potential solutions (hyperparameter sets) and directing the algorithm toward optimal performance [37] [38]. For object detection tasks, this function is typically a weighted combination of key metrics like precision, recall, and mean Average Precision (mAP). A common default fitness function used is fitness = 0.1 * mAP@0.5 + 0.9 * mAP@0.5:0.95, which strongly emphasizes performance across multiple Intersection over Union (IoU) thresholds [38].

Q3: My model's fitness score is poor from the start. Should I immediately begin hyperparameter evolution? No. A poor initial fitness score is most often a data or configuration issue, not a hyperparameter one. Immediately tuning hyperparameters can waste computational resources and obscure the root cause. Prioritize investigating your dataset first. Check for common problems like incorrect label paths, inconsistent annotations, too few images, or a lack of variety in your training data. Verify your dataset's integrity before proceeding to hyperparameter tuning [36].

Q4: What is the recommended number of epochs to establish a reliable baseline for a custom egg dataset? For a baseline training run, start with 300 epochs [35] [36]. This provides sufficient time to observe whether the model is learning effectively or if it is starting to overfit. If overfitting occurs early (e.g., validation loss starts increasing while training loss continues to decrease), you can reduce this number. Conversely, if the model is still improving after 300 epochs, you may train longer, for instance, 600 or 1200 epochs, especially for complex detection tasks [35].

Q5: Which metrics, beyond the fitness score, should I continuously monitor during training? While the fitness score is a key summary metric, you should actively monitor its underlying components to diagnose issues [9]. The table below outlines the key metrics to track:

| Metric | Description | What It Indicates |

|---|---|---|

| Precision | Proportion of correct positive predictions (True Positives / (True Positives + False Positives)) [6]. | How reliable the model's detections are; a low value suggests many false alarms. |

| Recall | Proportion of true positives detected (True Positives / (True Positives + False Negatives)) [6]. | The model's ability to find all relevant objects; a low value suggests many missed detections. |

| mAP@0.5 | Mean Average Precision at IoU threshold of 0.5 [6]. | Overall detection accuracy with a lenient overlap requirement. |

| mAP@0.5:0.95 | mAP averaged over IoU thresholds from 0.5 to 0.95 in 0.05 increments [39]. | A stricter, more comprehensive measure of localization accuracy. |

| Box Loss | Bounding box regression loss (Mean Squared Error) [6]. | How well the model is learning to predict the location and size of bounding boxes. |

| Obj Loss | Objectness loss (Binary Cross-Entropy) [6]. | How well the model is estimating the confidence that an object exists. |

| Cls Loss | Classification loss (Cross-Entropy) [6]. | How well the model is distinguishing between different classes (if multiple exist). |

Experimental Protocols

Protocol 1: Establishing a Performance Baseline with YOLOv5n This protocol outlines the standard procedure for initiating training to establish a performance baseline for your egg detection model [35] [6].

- Data Preparation: Ensure your dataset is structured correctly and a YAML file (e.g.,

egg_data.yaml) points to your training and validation splits and defines the class names [6]. - Initialization: Use a pre-trained model. For the YOLOv5n model, this is done with the

--weights yolov5n.ptargument. Transfer learning from pre-trained weights is recommended for small to medium-sized datasets and leads to faster convergence and better performance [35] [36]. - Baseline Training Command: Execute a training run with default settings.

- Analysis: Upon completion, analyze the results in the

runs/train/expdirectory. Key files includeresults.png(loss and metrics plots),confusion_matrix.png, and the validation batch predictions. This establishes your baseline for future comparisons [35].

The following workflow diagram summarizes this baseline establishment process:

Protocol 2: Automated Hyperparameter Tuning with Genetic Evolution Once a baseline is established, you can use this protocol for automated optimization. Ultralytics YOLO uses a genetic algorithm that mutates hyperparameters to maximize the fitness score [38].

- Define a Search Space: Create a dictionary specifying the hyperparameters you wish to tune and their value ranges. You can start with a subset of critical parameters.

- Execute the Tuning Process: Use the

tune()method to run the genetic algorithm for a set number of iterations. Warning: Tuning derived from short runs may not be optimal. For best results, tune under conditions similar to your final training (e.g., same number of epochs) [38]. - Results: The best-performing set of hyperparameters will be saved in a file named

best_hyperparameters.yamlwithin thetunedirectory, which you can use for your final model training [38].

The Scientist's Toolkit: Research Reagent Solutions

The following table details key computational "reagents" and their functions in the YOLOv5n optimization pipeline.

| Item | Function in the Experiment | Reference / Specification |

|---|---|---|

Pretrained Weights (yolov5n.pt) |

Provides a starting point for training, leveraging features learned on a large dataset (e.g., COCO). Dramatically improves convergence and performance on small datasets. | Ultralytics YOLOv5n [35] [6] |

| Hyperparameter Evolution | An automated method using genetic algorithms to mutate hyperparameters to maximize the fitness function, navigating the high-dimensional search space efficiently. | Ultralytics model.tune() [38] |

| Fitness Function | A single, weighted metric that guides hyperparameter evolution by quantifying model performance. It balances precision and recall across different IoU thresholds. | Default: 0.1*mAP@0.5 + 0.9*mAP@0.5:0.95 [38] |

| MCA Module | An attention mechanism that can be integrated into the network to enhance feature representation by dynamically learning the importance of each channel in the feature map. | Multiple Channel Attention (MCA) [40] |

| CARAFE Operator | A lightweight up-sampling operator that can reassemble features based on content, potentially preserving more detailed information compared to traditional interpolation. | Lightweight Content-aware Re-assembly of Features (CARAFE) [40] |

Hyperparameter evolution using genetic algorithms (GA) provides an powerful method for automatically optimizing the numerous training parameters in YOLOv5 models. Unlike traditional grid or manual searches that become computationally intractable in high-dimensional spaces, genetic algorithms efficiently navigate the complex hyperparameter landscape by mimicking natural selection processes. For researchers working on specialized detection tasks like egg detection, this approach systematically evolves better hyperparameter sets over generations of training experiments, ultimately leading to improved model performance metrics such as mean Average Precision (mAP) and recall. The implementation is particularly valuable for optimizing YOLOv5n, a lightweight version suitable for deployment in resource-constrained environments common in agricultural and biological research settings [33] [38].

Frequently Asked Questions (FAQs)

Q1: What is hyperparameter evolution and how does it differ from manual tuning?

Hyperparameter evolution is an automated optimization method that uses genetic algorithms to find optimal training parameters, whereas manual tuning relies on researcher intuition and trial-and-error. The genetic approach creates successive "generations" of hyperparameter sets by selecting the best performers from previous rounds and introducing mutations to explore new combinations. This method is particularly effective for YOLOv5's approximately 30 hyperparameters because it efficiently handles the high-dimensional search space and unknown correlations between parameters that make grid searches computationally prohibitive [33] [41].

Q2: Which hyperparameters can be optimized using evolution in YOLOv5?

YOLOv5's hyperparameter evolution can optimize a comprehensive set of parameters covering learning rates, data augmentation, and loss function weights. The default search space includes:

- Learning parameters:

lr0(initial learning rate),lrf(final learning rate factor),momentum,weight_decay - Loss weights:

box(bounding box loss gain),cls(classification loss gain),obj(objectness loss gain) - Data augmentation:

hsv_h,hsv_s,hsv_v(HSV augmentation),degrees(rotation),translate,scale,mosaicprobability [33] [38]

Hyperparameter evolution is computationally intensive, typically requiring hundreds of GPU hours for meaningful results. The official documentation recommends a minimum of 300 generations (training runs) for reliable optimization, which can translate to significant computational time depending on your dataset size and model architecture. For reference, a study on urinary particle detection utilizing YOLOv5 with evolutionary genetic algorithm required substantial resources to achieve an 85.8% mAP [33] [42].

Q4: How is "fitness" defined and measured during evolution?

Fitness is the key metric that the genetic algorithm seeks to maximize. In YOLOv5, the default fitness function is a weighted combination of validation metrics: mAP@0.5 contributes 10% of the weight, while mAP@0.5:0.95 contributes the remaining 90%. Precision and recall are typically not included in this fitness calculation. Researchers working on egg detection can customize these weights based on their specific requirements, such as prioritizing recall if missing detection is a critical issue [33] [41].

Q5: Can I resume an interrupted hyperparameter evolution session?

Yes, interrupted evolution sessions can be resumed. In the Ultralytics YOLO ecosystem, you can pass resume=True to the tune() method, and it will automatically continue from the last saved state. For custom implementations, maintaining detailed logs of each generation's parameters and fitness scores enables seamless resumption of the optimization process [38].

Troubleshooting Guides

Problem: Poor Fitness Improvement Over Generations

Symptoms: Fitness scores plateau or show minimal improvement across multiple generations.

Solutions:

- Verify your base scenario: Ensure your initial model training (without evolution) produces reasonable results. Evolution optimizes around a base scenario, so a poorly performing starting point limits potential gains [33].

- Expand the search space: Overly restrictive parameter ranges may prevent the algorithm from finding optimal regions. Review your parameter bounds against established defaults [38].

- Increase mutation rate: The default mutation probability is 80% with 0.04 variance. Consider increasing variance to 0.1 for more exploratory search in early generations [41] [43].

- Check parameter correlations: Some parameters may have interdependent effects that require coordinated adjustment.

Problem: Excessive Training Time Per Generation

Symptoms: Each evolution iteration takes impractically long, limiting the number of generations achievable.

Solutions:

- Reduce dataset size: Use a representative subset of your full dataset during evolution, then do final training with optimized parameters on the complete dataset [38].

- Adjust training epochs: Fewer epochs per generation can provide sufficient signal for hyperparameter evaluation while dramatically reducing iteration time.

- Enable caching: Use the

--cacheoption to speed up data loading [33] [41]. - Distribute across multiple GPUs: Implement parallel evolution using the provided multi-GPU scripts to process multiple generations simultaneously [33].

Problem: Unstable Training During Evolution

Symptoms: Training losses show NaN values or extreme fluctuations between generations.

Solutions:

- Constrain learning rate bounds: Overly high learning rates can cause divergence. Restrict

lr0to evidence-based ranges (1e-5 to 1e-1) [38]. - Review augmentation limits: Excessive data augmentation parameters (particularly

degrees,shear,perspective) can create unrealistic training samples. Conservative bounds often perform better. - Check batch size compatibility: Ensure your batch size is appropriate for your model size and GPU memory. The

yolov5nmodel typically works with batch sizes of 16-64 [44]. - Monitor gradient norms: Implement gradient clipping if explosions are detected, though this may require modifying the training script.

Problem: Optimized Parameters Don't Generalize to Full Training

Symptoms: Parameters that perform well during evolution yield poor results when applied to full training.

Solutions:

- Align evolution and final training conditions: Ensure your evolution settings (image size, epochs, dataset) closely match your final training setup [38].

- Avoid underfitting during evolution: Insufficient epochs during evolution may favor parameters that converge quickly but plateau early.

- Validate with multiple seeds: The stochastic nature of training means single runs may not represent true parameter quality. Consider evaluating promising parameters with multiple random seeds.

- Inspect for overfitting to validation set: If using a small validation set during evolution, parameters may overfit to that specific data.

Hyperparameter Search Spaces and Results

Default Hyperparameter Evolution Ranges

The following table summarizes the default search space for YOLOv5 hyperparameter evolution, based on the official Ultralytics documentation and research implementations [33] [38]:

| Parameter | Description | Default Value | Search Range | Type |

|---|---|---|---|---|

lr0 |

Initial learning rate | 0.01 | (1e-5, 1e-1) | Float |

lrf |

Final learning rate factor | 0.01 | (0.01, 1.0) | Float |

momentum |

SGD momentum/Adam beta1 | 0.937 | (0.6, 0.98) | Float |

weight_decay |

Optimizer weight decay | 0.0005 | (0.0, 0.001) | Float |

warmup_epochs |

Warmup epochs | 3.0 | (0.0, 5.0) | Float |

box |

Box loss gain | 0.05 | (0.02, 0.2) | Float |

cls |

Class loss gain | 0.5 | (0.2, 4.0) | Float |

hsv_h |

Image HSV-Hue augmentation | 0.015 | (0.0, 0.1) | Float |

hsv_s |

Image HSV-Saturation augmentation | 0.7 | (0.0, 0.9) | Float |

hsv_v |

Image HSV-Value augmentation | 0.4 | (0.0, 0.9) | Float |

degrees |

Image rotation (+/- deg) | 0.0 | (0.0, 45.0) | Float |

translate |

Image translation (+/- fraction) | 0.1 | (0.0, 0.9) | Float |

scale |

Image scale (+/- gain) | 0.5 | (0.0, 0.9) | Float |

fliplr |

Image flip left-right probability | 0.5 | (0.0, 1.0) | Float |

Sample Evolution Results from Research Studies

The table below showcases published results demonstrating the effectiveness of hyperparameter evolution across different domains:

| Study/Application | Model | Base mAP | Evolved mAP | Improvement | Key Parameters Optimized |

|---|---|---|---|---|---|

| Urinary Particle Detection [42] | YOLOv5l | Not specified | 85.8% | Significant | Learning rates, augmentation |

| Kidney Bean Brown Spot [45] | YOLOv5-SE-BiFPN | 89.8% (Precision) | 94.7% (Precision) | +4.9% Precision | Learning rate, augmentation parameters |

| Lion-Head Goose Detection [46] | YOLOv8s (Improved) | 86.3% (mAP50) | 96.4% (mAP50) | +10.1% | Data augmentation, loss weights |

Experimental Protocol for Egg Detection Research

Base Model Setup

- Model Selection: Begin with YOLOv5n for its balance of performance and efficiency suitable for egg detection scenarios [46].

- Dataset Preparation: Curate a diverse set of egg images under various conditions (lighting, orientations, backgrounds). Ensure representative validation and test sets.

- Initial Configuration: Use default hyperparameters from

data/hyps/hyp.scratch-low.yamlas your baseline [33]. - Fitness Definition: Implement a custom fitness function if standard mAP weights don't align with egg detection priorities:

Evolution Implementation

- Initial Population: Start with the default hyperparameters and generate variations using:

- Multi-GPU Parallelization: Accelerate evolution using distributed training:

- Monitoring: Track progress through the automatically generated

evolve.csvand visualization plots [33]. - Termination Criteria: Run for a minimum of 300 generations or until fitness plateaus for 50 consecutive generations.

Validation and Application

- Cross-Validation: Apply the best-found parameters to multiple training runs with different random seeds to ensure consistency.

- Full Training: Use the optimized hyperparameters for extended training on your complete dataset:

- Ablation Studies: Systematically test the contribution of key evolved parameters by reverting them to defaults while keeping others optimized.

Workflow Visualization

The Scientist's Toolkit: Research Reagent Solutions

| Component | Function in Research | Implementation Example |

|---|---|---|

| Genetic Algorithm Core | Drives the evolution process by selecting, crossing over, and mutating hyperparameter sets | Custom implementation using tournament selection and Gaussian mutation [43] |

| Fitness Function | Quantifies performance to guide selection | Weighted combination of mAP metrics: w = [0.0, 0.0, 0.1, 0.9] for [P, R, mAP@0.5, mAP@0.5:0.95] [33] |

| Parameter Logging | Tracks evolution progress and results | Automatic generation of evolve.csv with fitness scores and hyperparameters [33] |

| Visualization Tools | Provides insights into evolution dynamics | utils.plots.plot_evolve() generating subplots for each hyperparameter [41] |

| Distributed Training Framework | Enables parallel evolution across multiple GPUs | Bash scripts with staggered GPU launches to maximize resource utilization [33] |

FAQ: Hyperparameter Troubleshooting for YOLOv5n in Egg Detection

Why do my model's performance metrics crash when I continue training from a previous checkpoint?

A sudden drop in performance, such as mAP falling significantly, often occurs due to an inappropriately high learning rate when resuming training. The model has already converged to a good state in the previous training, and a large learning rate can cause it to overshoot the optimal point in the loss landscape [29]. To fix this, reduce the initial learning rate (lr0) by an order of magnitude (e.g., from 0.01 to 0.001) for the continued training run.

Which hyperparameters should I prioritize for tuning to improve detection of small objects like eggs?

For detecting small objects such as eggs, focus on these key hyperparameters [38] [47]:

- Learning Rate (

lr0andlrf): Crucial for stable convergence and finding a good minimum. - Loss Gains (

boxandcls): Directly affect the emphasis on bounding box accuracy and class prediction. - Augmentations (

degrees,translate,scale): Help the model generalize to objects at various sizes, angles, and positions.

What is a recommended methodology for establishing a baseline before hyperparameter tuning?

Start with the default hyperparameters provided by YOLOv5, which are optimized for the COCO dataset [33] [48]. Train your YOLOv5n model on your egg dataset using these defaults. The resulting performance metrics establish your baseline. Subsequently, use hyperparameter evolution to methodically improve upon this baseline [33].

Practical Hyperparameter Ranges for YOLOv5n

The table below summarizes the default values and practical search spaces for key hyperparameters, synthesized from official documentation and research. These ranges are a solid starting point for optimizing a YOLOv5n model for egg detection tasks [33] [38].

Table 1: Key Hyperparameter Defaults and Search Ranges

| Hyperparameter | Description | Default Value (YOLOv5) [33] | Practical Search Space [38] |

|---|---|---|---|

lr0 |

Initial learning rate (SGD/Adam). | 0.01 | (1e-5, 1e-1) |

lrf |

Final learning rate factor (lr0 * lrf). |

0.01 | (0.01, 1.0) |

momentum |

SGD momentum or Adam beta1. | 0.937 | (0.6, 0.98) |

weight_decay |

L2 regularization factor to prevent overfitting. | 0.0005 | (0.0, 0.001) |

warmup_epochs |

Epochs for linear learning rate warmup. | 3.0 | (0.0, 5.0) |

box |

Loss gain for bounding box regression. | 0.05 | (0.02, 0.2) |

cls |

Loss gain for classification. | 0.5 | (0.2, 4.0) |

hsv_h |

Image HSV-Hue augmentation (fraction). | 0.015 | (0.0, 0.1) |

hsv_s |

Image HSV-Saturation augmentation (fraction). | 0.7 | (0.0, 0.9) |

hsv_v |

Image HSV-Value (brightness) augmentation (fraction). | 0.4 | (0.0, 0.9) |

translate |

Image translation (+/- fraction). | 0.1 | (0.0, 0.9) |

scale |

Image scale (+/- gain). | 0.5 | (0.0, 0.9) |

Experimental Protocol: Hyperparameter Tuning via Genetic Evolution

This methodology details the process of using genetic evolution for hyperparameter optimization, as implemented in Ultralytics YOLOv5 [33].

The diagram below illustrates the iterative cycle of hyperparameter evolution.

Step-by-Step Procedure

- Initialization: Begin your experiment using the default hyperparameters defined in the

hyp.scratch-low.yamlfile [33]. - Base Training: Execute an initial training run to establish a baseline fitness. Use a command like:

- Evolution: Initiate the hyperparameter evolution process by appending the

--evolveflag. For a substantial search, specify a high number of generations (e.g., 300-1000). The command for a single GPU is: - Genetic Operations: The algorithm creates new generations of hyperparameters primarily through mutation. It applies small, random changes with an 80% probability and a 0.04 variance to the best-performing hyperparameters from previous generations [33]. Crossover is not currently used in this implementation.

- Fitness Evaluation: The default fitness function in YOLOv5 is a weighted combination of metrics: mAP@0.5 contributes 10% of the weight, and mAP@0.5:0.95 contributes the remaining 90%. Precision and recall are not directly part of the fitness calculation [33].

- Completion and Application: After all generations are complete, the optimized hyperparameters are saved in a YAML file (e.g.,

runs/evolve/hyp_evolved.yaml). Use this file for all subsequent training on your specific egg dataset.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials and Software for YOLO-based Egg Detection Research

| Item | Function / Description | Example Use Case |

|---|---|---|

| YOLOv5n Model | A compact object detection model ideal for rapid prototyping and deployment on hardware with limited computational resources [48]. | Baseline model for detecting small parasite eggs in microscopic images [49]. |

| Ultralytics YOLO | The Python library that provides the framework for training, validating, and exporting YOLO models. | Core codebase for implementing hyperparameter tuning and running experiments [33] [38]. |

| Roboflow | A web-based tool for building, preprocessing, annotating, and versioning computer vision datasets [50]. | Preparing and augmenting a custom dataset of tree or poultry eggs [50]. |

| Genetic Algorithm | An optimization method inspired by natural selection, used in YOLOv5 for hyperparameter evolution and anchor box selection ("autoanchor") [33] [48]. | Automatically searching for the best hyperparameter values over hundreds of generations [33]. |

| Microscopic Imaging System | Equipment for capturing high-resolution images of small biological specimens. | Acquiring source images for pinworm egg detection in medical parasitology [49]. |

| TensorBoard / Weights & Biases | Logging tools for tracking experiment metrics, losses, and predictions during model training [48]. | Visualizing the change in mAP across epochs to diagnose convergence issues. |

This technical support guide provides detailed troubleshooting and methodological support for researchers optimizing data augmentation parameters in YOLOv5n for egg detection tasks. Data augmentation is a critical technique for artificially expanding training datasets by applying various transformations to existing images, which improves model robustness, reduces overfitting, and enhances generalization to real-world scenarios [32]. For specialized applications like egg detection in agricultural and medical research, fine-tuning these parameters is essential for achieving high-precision models capable of functioning in diverse and challenging environments [3] [39].

The following sections offer structured guidance on parameter optimization, experimental protocols, and solutions to common challenges encountered during model training.

Core Augmentation Parameters and Quantitative Values

For researchers working with egg images, specific augmentation parameters require careful tuning to balance variability and the preservation of critical morphological features. The table below summarizes key parameters, their value ranges, and application notes based on established practices in YOLO training and relevant research.

Table 1: Core Data Augmentation Parameters for Egg Image Analysis

| Parameter | Description | Default Value | Typical Range | Research Considerations for Egg Images |

|---|---|---|---|---|

HSV-Hue (hsv_h) |

Shifts image colors while preserving relationships [32]. | 0.015 [32] [51] | 0.0 - 0.5 [32] | Use conservatively; drastic hue shifts may render egg color diagnostically unreliable [39]. |

HSV-Saturation (hsv_s) |

Modifies the intensity of colors [32]. | 0.7 [32] [51] | 0.0 - 1.0 [32] | Helps model adapt to different lighting conditions in fields or labs [32] [39]. |

HSV-Value (hsv_v) |

Changes the brightness of the image [32]. | 0.4 [32] [51] | 0.0 - 1.0 [32] | Critical for simulating varied lighting in paddy fields or under microscopes [3] [39]. |

Translation (translate) |

Shifts images horizontally and vertically by a fraction of image size [32]. | 0.1 [32] | 0.0 - 1.0 [32] | Teaches model to recognize partially visible eggs [32]. |

Scaling (scale) |

Resizes images by a random factor [32]. | 0.5 [32] | ≥ 0.0 [32] | Essential for detecting eggs at various distances and sizes; over-scaling can lose small targets [35] [39]. |

Troubleshooting Guides and FAQs

Frequently Asked Questions

Q1: My validation metrics (mAP, precision) are worse with data augmentation compared to without it. Why does this happen, and how can I resolve it?

This is a common issue that often points to an overly aggressive augmentation policy that is distorting the essential features of the eggs.

- Potential Cause 1: Excessive Color Distortion. High values for

hsv_h,hsv_s, orhsv_vcan alter the image to a point where eggs no longer resemble their real-world counterparts. For example, the color of Pomacea canaliculata eggs is a key identifier, and excessive hue changes can destroy this information [39]. - Solution: Systematically reduce the HSV augmentation values. Start by setting them to zero to establish a baseline, then gradually increase them (e.g.,

hsv_h=0.01,hsv_s=0.3,hsv_v=0.2) while monitoring validation performance [32] [52]. - Potential Cause 2: Extreme Geometric Transformations. High

translateorscalevalues might crop out eggs entirely or shrink them to a size where they become undetectable, effectively adding noisy, erroneous samples to your training set. - Solution: Reduce the

translatevalue to ensure eggs remain largely within the frame. Forscale, avoid extreme zoom-out values that make eggs too small. Monitor thetrain_batch*.jpgimages generated at the start of training to visually confirm your augmentation policy is producing plausible variants [35] [52].

Q2: I am detecting parasite eggs in complex backgrounds. Which augmentations are most critical for improving model robustness?

The key is to prepare the model for the "feature contamination" and occlusions found in real-world environments like stool samples or paddy fields [39].

- Critical Augmentations:

- HSV Variations (

hsv_s,hsv_v): These are paramount for simulating changes in staining intensity, lighting conditions, and microscope settings [3] [32]. They help the model learn to focus on the egg's shape and texture rather than relying on a specific color profile. - Translation (

translate): This helps the model learn to detect eggs that are not perfectly centered and are often surrounded by debris [32]. - Mosaic Augmentation: This technique, which combines four training images into one, is highly beneficial. It forces the model to learn to identify eggs in a dense, complex context and with a wider variety of backgrounds, significantly improving robustness [32] [51].

- HSV Variations (

Q3: My model is overfitting to the training data despite using augmentation. What should I adjust?

Overfitting occurs when the model learns the training data too well, including its noise, and fails to generalize to new data.

- Strategy 1: Increase Augmentation Strength. Counter-intuitively, you may need more augmentation. Gradually increase the range of your transformations, particularly

translateandscale, to introduce more variability. You can also enable other techniques likemixuporcutmixwhich are effective regularizers [32] [51]. - Strategy 2: Review Your Dataset. The root cause may be an insufficient dataset. Ensure you have at least 1,500 images per class and a diverse set of images representative of different conditions [35]. Verify that your labels are accurate and consistent, as poor labeling is a major source of poor model performance [35].

- Strategy 3: Tune Hyperparameters. If augmentation alone is not enough, consider adjusting training hyperparameters. Reducing the loss component gain for objectness (

hyp['obj']) can help reduce overfitting. Additionally, training for more epochs with a cosine learning rate scheduler (--cos-lr) can help the model converge more effectively [35].

Experimental Protocol for Parameter Optimization

For researchers conducting systematic experiments on augmentation parameters, the following workflow provides a reproducible methodology.