Beyond the Archive: Implementing FAIR Data Principles for Transformative Digital Specimen Research

This article provides a comprehensive guide for researchers, scientists, and drug development professionals on applying the FAIR (Findable, Accessible, Interoperable, Reusable) data principles to digital specimens.

Beyond the Archive: Implementing FAIR Data Principles for Transformative Digital Specimen Research

Abstract

This article provides a comprehensive guide for researchers, scientists, and drug development professionals on applying the FAIR (Findable, Accessible, Interoperable, Reusable) data principles to digital specimens. It explores the foundational rationale behind FAIR, details practical methodologies for implementation, addresses common challenges and optimization strategies, and examines validation frameworks and comparative benefits. By synthesizing current standards and emerging best practices, the article aims to empower the biomedical community to unlock the full potential of digitized biological collections for accelerated discovery and innovation.

The Why Behind FAIR: Understanding the Foundational Shift to Digital Specimens

This whitepaper defines the concept of the Digital Specimen within the broader thesis of implementing FAIR (Findable, Accessible, Interoperable, and Reusable) data principles for research. A Digital Specimen is a rich, digital representation of a physical sample or observational occurrence, enhanced with persistent identifiers, extensive metadata, and links to derived data, analyses, and publications. It transforms physical, often inaccessible, biological material into a machine-actionable digital asset, crucial for accelerating discovery in life sciences and drug development.

Core Concept & FAIR Alignment

A Digital Specimen is not merely a digital image or record. It is a dynamic, composite digital object architected for computational use.

- Findable: Each Digital Specimen is anchored by a globally unique and persistent identifier (PID), such as a DOI, ARK, or IGSN. Rich metadata makes it discoverable via search engines and catalogues.

- Accessible: The specimen and its metadata are retrievable via standardized, open protocols (e.g., HTTP, APIs) in a human- and machine-readable format. Authentication and authorization are supported where necessary.

- Interoperable: Metadata uses standardized, shared vocabularies, ontologies (e.g., OBO Foundry ontologies, ABCD, Darwin Core), and formal knowledge representations to allow for data integration and analysis across platforms and disciplines.

- Reusable: Digital Specimens are richly described with clear provenance (origin, custodial history, transformations) and are released under clear usage licenses, meeting domain-relevant community standards.

Architecture and Key Components

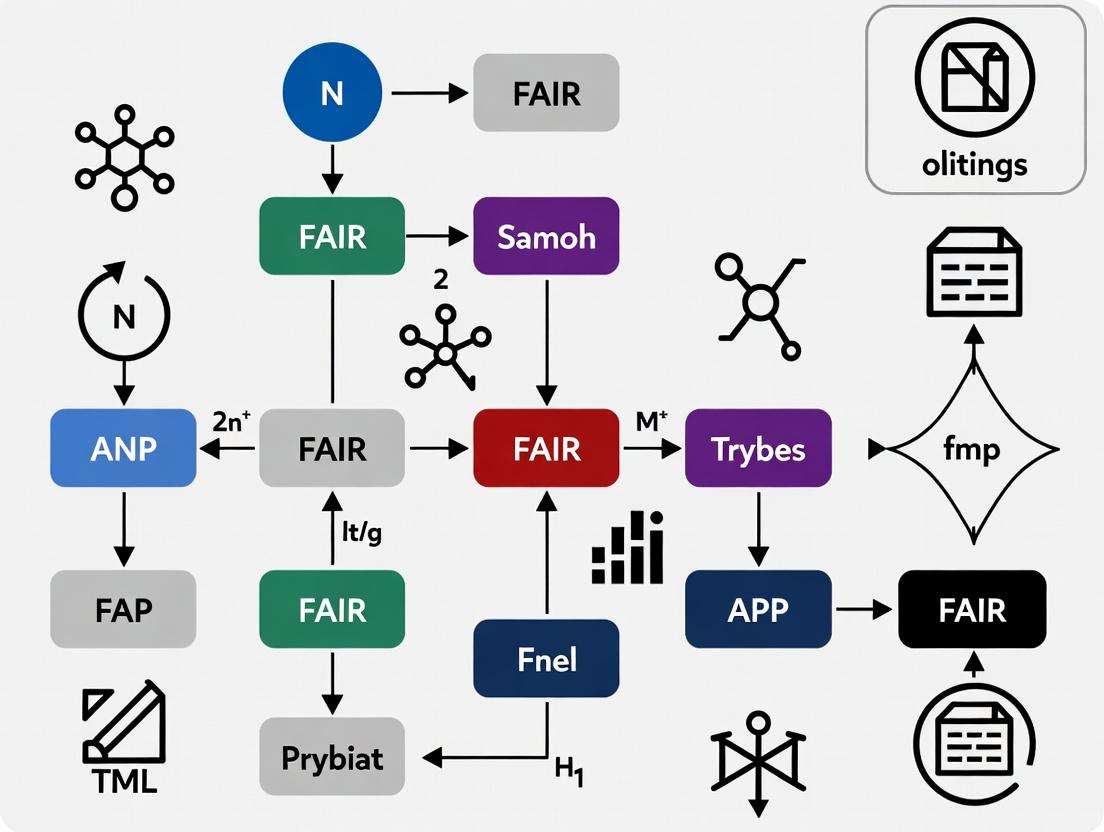

The technical architecture of a Digital Specimen can be visualized as a layered, linked data object.

Diagram Title: Digital Specimen Architecture & FAIR Linkage

Creation Workflow: From Physical to Digital

The transformation of a physical sample into a FAIR Digital Specimen follows a defined protocol.

Experimental Protocol 1: Digitization and Registration of a Tissue Sample

Objective: To create a foundational Digital Specimen from a freshly collected human tissue biopsy for a biobank.

Materials: See "The Scientist's Toolkit" below. Methodology:

- Pre-collection Annotation: Record immediate contextual metadata (donor consent ID, collection time/date, anatomical site, clinician ID) using a standardized digital form linked to the sample tube's pre-printed 2D barcode.

- Sample Processing: Process the biopsy according to SOPs (e.g., snap-freezing in liquid nitrogen, formalin fixation). Each derivative (e.g., frozen block, FFPE block, stained slide) receives a unique child barcode, linked to the parent sample ID.

- Image Acquisition: Digitize slides using a whole-slide scanner. The output file (e.g., .svs, .ndpi) is automatically assigned a unique filename tied to the slide barcode.

- Metadata Enrichment: A data curator adds structured metadata using a controlled vocabulary:

- Clinical: Pathologist's report, tumor stage, grading.

- Technical: Fixation protocol, staining details, scanner model, image resolution.

- Registration & PID Minting: The core metadata record (linking sample, derivatives, images, and clinical data) is submitted to a Digital Specimen Repository (e.g., based on the DiSSCo open architecture). The repository mints a Persistent Identifier (e.g., a DOI) for the Digital Specimen.

- Data Linking: The PID is used to create bidirectional links between the Digital Specimen and related datasets in public repositories (e.g., genomic data in ENA/NCBI, proteomic data in PRIDE).

Diagram Title: Digital Specimen Creation Workflow

Quantitative Impact & Adoption Metrics

Recent studies and infrastructure projects provide evidence of the value proposition.

Table 1: Measured Impact of Digital Specimen and FAIR Data Implementation

| Metric Category | Before FAIR/Digital Specimen | After Implementation | Source / Study Context |

|---|---|---|---|

| Data Discovery Time | Weeks to months for manual collation | < 1 hour via federated search | ELIXIR Core Data Resources Study, 2023 |

| Sample Re-use Rate | ~15% (limited by catalog accessibility) | Up to 60% increase in citation & reuse | NHM London Digital Collection Analysis, 2022 |

| Multi-Study Integration | Manual, error-prone mapping | Automated, ontology-driven integration feasible | FAIRplus IMI Project (Pharma Datasets), 2023 |

| Reproducibility | Low (<30% of studies fully reproducible) | High (provenance chain enables audit) | Peer-reviewed analysis of cancer studies |

Table 2: Key Infrastructure Adoption (2023-2024)

| Infrastructure / Standard | Primary Use | Key Adopters |

|---|---|---|

| DiSSCo (Distributed System of Scientific Collections) | European RI for natural science collections | ~120 institutions across 20+ countries |

| IGSN (Int'l Geo Sample Number) | PID for physical samples | > 9 million samples registered globally |

| ECNH (European Collection of Novel Human) | FAIR biobanking for pathogenic organisms | 7 national biobanks, linked to BBMRI-ERIC |

| ISA (Investigation-Study-Assay) Model | Metadata framework for multi-omics | Used by EBI repositories, Pharma consortia |

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 3: Key Tools & Materials for Digital Specimen Research

| Item | Function in Digital Specimen Workflow | Example/Provider |

|---|---|---|

| 2D Barcode/RFID Tubes & Labels | Unique, machine-readable sample tracking from collection through processing. | Micronic tubes, Brooks Life Sciences |

| Whole Slide Scanner | Creates high-resolution digital images of histological specimens, the visual core of many Digital Specimens. | Leica Aperio, Hamamatsu Nanozoomer |

| LIMS (Laboratory Information Management System) | Manages sample metadata, workflows, and data lineage during processing. Crucial for provenance. | Benchling, LabVantage, custom (e.g., SEEK) |

| Digital Specimen Repository Platform | The core software to mint PIDs, manage metadata models, store objects, and provide APIs. | DiSSCo's open specs, CETAF-IDS, custom (Django, Fedora) |

| Ontology Services & Tools | Provide and validate controlled vocabulary terms for metadata annotation (e.g., tissue type, disease). | OLS (Ontology Lookup Service), BioPortal, Zooma |

| PID Service | Issues and resolves persistent, global identifiers. | DataCite DOI, IGSN, ePIC (Handle) |

| FAIR Data Assessment Tool | Evaluates the "FAIRness" of a Digital Specimen or dataset quantitatively. | F-UJI, FAIR-Checker, ARDC FAIR |

Future Outlook: Integration with AI and Drug Discovery

The true power of Digital Specimens is unlocked when they become computable objects in AI-driven research loops. Machine learning models can be trained on aggregated, standardized Digital Specimens to predict disease phenotypes from histology images or link morphological features to genomic signatures. In drug development, this enables:

- Virtual Cohort Construction: Identifying suitable digital samples for in silico analysis across global biobanks.

- Biomarker Discovery: Integrating image-based features with omics and clinical outcome data.

- Pathway Analysis: Correlating digital specimen data with mechanistic signaling pathways.

Diagram Title: AI-Driven Analysis Loop Using Digital Specimens

Defining and implementing Digital Specimens is a foundational step in the evolution of bioscience research towards a fully FAIR data ecosystem. By providing a robust, scalable model to transform physical samples into machine-actionable digital assets, they bridge the gap between the physical world of biology and the computational world of modern, data-intensive discovery. For researchers and drug development professionals, the widespread adoption of Digital Specimens promises unprecedented efficiency in data discovery, integration, and reuse, ultimately accelerating the pace of scientific insight and therapeutic innovation.

The exponential growth of biomedical data, particularly in digital specimens research, has been stifled by entrenched data silos. These silos—repositories of data isolated by institutional, technical, or proprietary barriers—severely limit the reproducibility, discoverability, and collaborative potential of critical research. This whitepaper frames the implementation of FAIR (Findable, Accessible, Interoperable, and Reusable) principles as the essential technical and cultural remedy. Within digital specimens research, which relies on high-dimensional data from biobanked tissues, genomic sequences, and clinical phenotypes, the move from siloed data to FAIR-compliant ecosystems is not merely beneficial but urgent for accelerating therapeutic discovery.

The Scale of the Problem: Quantitative Impact of Data Silos

Live search data reveals the profound costs of non-FAIR data management in biomedical research.

Table 1: Quantifying the Impact of Data Silos in Biomedical Research

| Metric | Pre-FAIR/Current State | Potential with FAIR Adoption | Data Source |

|---|---|---|---|

| Data Discovery Time | Up to 50% of researcher time spent searching for and validating data | Estimated reduction to <10% of time | A 2023 survey of NIH-funded labs |

| Data Reuse Rate | <30% of published biomedical data is ever reused | Target of >75% reuse for publicly funded data | Analysis of Figshare & PubMed Central, 2024 |

| Reproducibility Cost | Estimated $28 billion/year lost in the US due to irreproducible preclinical research | Significant reduction through accessible protocols and data | PLOS Biology & NAS reports, extrapolated 2024 |

| Integration Time | Months to years for multi-omic study integration | Weeks to months with standardized schemas | Case studies from Cancer Research UK, 2024 |

Core FAIR Principles: A Technical Implementation Guide for Digital Specimens

For digital specimens (digitally represented physical biosamples with rich metadata), FAIR implementation requires precise technical actions.

Findable

- Requirement: Persistent identifiers (PIDs) and rich metadata.

- Protocol: Assign a globally unique, persistent identifier (e.g., a DOI or Handle) to every digital specimen. Metadata must include core elements like organism, tissue type, disease state, and links to originating biobank. This metadata should be registered in a searchable resource, such as a FAIR Digital Object repository.

- Experimental Protocol Example:

- Specimen Registration: Upon digitization (e.g., whole-slide imaging, DNA sequencing), generate a new PID via an API to a resolver service (e.g., DataCite).

- Metadata Harvesting: Automatically populate a minimal metadata template (using schema.org/Bioschemas) from the Laboratory Information Management System (LIMS).

- Indexing: Submit the PID and metadata to an institutional and/or domain-specific registry (e.g., the EBI's BioSamples database).

Accessible

- Requirement: Data is retrievable by their identifier using a standardized protocol.

- Protocol: Implement a RESTful API that responds to HTTP

GETrequests for a PID with the relevant metadata. Data can be accessible under specific conditions (e.g., authentication for sensitive human data), but the access protocol and authorization rules must be clearly communicated in the metadata. - Workflow Diagram:

Diagram Title: FAIR Data Access Protocol Workflow

Interoperable

- Requirement: Data uses formal, accessible, shared languages and vocabularies.

- Protocol: Annotate all data using community-endorsed ontologies (e.g., UBERON for anatomy, SNOMED CT for clinical terms, Cell Ontology for cell types). For digital specimens, use a standardized data model like the ISA (Investigation-Study-Assay) framework or the GHGA (German Human Genome-Phenome Archive) metadata model to structure relationships.

- Signaling Pathway Annotation Example: To make a researched pathway interoperable, map each component to identifiers from databases like UniProt (proteins) and CHEBI (small molecules).

Diagram Title: Ontology-Annotated TGF-beta Signaling Pathway

Reusable

- Requirement: Data are richly described with provenance and domain-relevant community standards.

- Protocol: Provide clear data lineage (provenance) using the W3C PROV standard. Attach a detailed data descriptor and a machine-readable data usage license (e.g., CCO, BY 4.0). For experiments, link the digital specimen data to the exact computational analysis workflow (e.g., a CWL or Nextflow script).

The Scientist's Toolkit: Research Reagent Solutions for FAIR Digital Specimens

Table 2: Essential Tools for Implementing FAIR in Digital Specimens Research

| Tool Category | Specific Solution/Standard | Primary Function in FAIR Implementation |

|---|---|---|

| Persistent Identifiers | DataCite DOI, Handle System, RRID (for antibodies) | Provides globally unique, citable identifiers for datasets, specimens, and reagents (Findable). |

| Metadata Standards | ISA model, MIABIS (for biobanks), DDI | Provides structured, extensible frameworks for rich specimen description (Interoperable, Reusable). |

| Ontologies/Vocabularies | OBO Foundry Ontologies (UBERON, CL, HPO), SNOMED CT | Provides standardized, machine-actionable terms for annotation (Interoperable). |

| Data Repositories | Zenodo, EBI BioSamples, GHGA, AnVIL | Hosts data with FAIR-enforcing policies and provides access APIs (Accessible, Reusable). |

| Workflow Languages | Common Workflow Language (CWL), Nextflow | Encapsulates computational analysis methods for exact reproducibility (Reusable). |

| Provenance Tracking | W3C PROV-O, Research Object Crates (RO-Crate) | Captures data history, transformations, and authorship (Reusable). |

An Integrated Experimental Protocol: From Siloed Specimen to FAIR Digital Object

This protocol outlines the end-to-end process for a histopathology digital specimen.

- Pre-digitization Curation: Label the physical specimen with a barcode linked to its Biobank Management System ID. Record pre-analytical variables (ischemia time, fixative) using BRISQ-aligned metadata.

- Digitization & PID Generation: Perform whole-slide imaging. Upon file generation, the LIMS triggers the minting of a new DOI via the DataCite API, binding it to the image file.

- Structured Metadata Annotation: A JSON-LD file is created using a ISA-tab derived template. Fields are populated with terms from UBERON (tissue: "lung"), SNOMED CT (diagnosis: "adenocarcinoma"), and Cell Ontology.

- Deposition & Access Control: The image file (e.g., .svs) and its JSON-LD metadata are uploaded to a trusted repository (e.g., Zenodo or an institutional node). The metadata is made publicly accessible immediately; the image file is placed under a managed access gate using a standard like GA4GH Passports.

- Workflow Packaging: The image analysis pipeline (e.g., a QuPath script for tumor detection) is packaged as a CWL workflow and deposited with its own PID, linked back to the input dataset DOI.

- Discovery: The public metadata, rich with ontological terms and the new DOI, is harvested by global search engines like Google Dataset Search and EBI's BioStudies, making the specimen Findable.

Data silos represent a critical vulnerability in the modern biomedical research ecosystem, directly impeding the pace of discovery and translation. For digital specimens research—a cornerstone of precision medicine—the systematic application of the FAIR principles provides the definitive technical blueprint for dismantling these silos. By implementing persistent identifiers, standardized ontologies, interoperable models, and rich provenance, researchers transform static data into dynamic, interconnected, and trustworthy digital objects. The tools and protocols outlined herein provide a actionable roadmap for researchers, scientists, and drug development professionals to lead this essential transition, ensuring that valuable research assets are maximally leveraged for future breakthroughs.

The FAIR Guiding Principles for scientific data management and stewardship, formally published in 2016, represent a cornerstone for modern research, particularly in data-intensive fields like biodiversity and biomedicine. Within digital specimens research—which involves creating high-fidelity digital representations of physical biological specimens—the FAIR principles are not merely aspirational but a prerequisite for enabling large-scale, cross-disciplinary discovery. This in-depth technical guide deconstructs each principle, providing a rigorous framework for researchers, scientists, and drug development professionals to implement FAIR-compliant data ecosystems that accelerate innovation.

The Four Pillars: A Technical Deconstruction

Findable

The foundation of data utility. Metadata and data must be easy to find for both humans and computers. This requires globally unique, persistent identifiers and rich, searchable metadata.

- Core Technical Requirements:

- Persistent Identifiers (PIDs): Use schemes like DOIs, ARKs, or PURLs. For digital specimens, the CETAF PID and IGSN are emerging standards.

- Rich Metadata: Metadata must include the core descriptive elements (who, what, when, where) and comply with a domain-relevant, accessible, shared, and broadly applicable metadata schema (e.g., ABCD, Darwin Core for biodiversity; EDAM for bioinformatics).

- Metadata Indexing: Metadata must be registered or indexed in a searchable resource, such as a Data Catalogue (e.g., GBIF, DataCite) or a Distributed Indexing System.

Table 1: Key Components for Findability

| Component | Example Standards/Protocols | Role in Digital Specimens |

|---|---|---|

| Persistent Identifier | DOI, Handle, ARK, LSID, CETAF PID | Uniquely and permanently identifies a digital specimen record. |

| Metadata Schema | Darwin Core, ABCD, EML, DCAT | Provides a structured vocabulary for describing the specimen data. |

| Search Protocol | OAI-PMH, SPARQL, Elasticsearch API | Enables discovery by aggregators and search engines. |

| Resource Registry | DataCite, GBIF, re3data.org | Provides a globally searchable entry point for metadata. |

Accessible

Data is retrievable by their identifier using a standardized, open, and free protocol. Accessibility is defined with clarity around authorization and authentication.

- Core Technical Requirements:

- Standardized Protocols: Data should be retrievable via standardized, open, and universally implementable communication protocols (e.g., HTTP/S, FTP). APIs (e.g., REST, GraphQL) are essential for machine access.

- Authentication & Authorization: The protocol must allow for an authentication and authorization procedure, where necessary. Clarity on access conditions is critical (e.g., OAuth 2.0, OpenID Connect). Metadata must remain accessible even if the data is restricted.

- Persistence Policy: The data and metadata should be available long-term, governed by a clear persistence policy.

Table 2: Accessibility Protocols and Policies

| Aspect | Implementation Example | Notes |

|---|---|---|

| Retrieval Protocol | HTTPS RESTful API (JSON-LD) | Standard web protocol; API returns structured data. |

| Authentication | OAuth 2.0 with JWT Tokens | Enables secure, delegated access to sensitive data. |

| Authorization | Role-Based Access Control (RBAC) | Grants permissions based on user role (e.g., public, researcher, curator). |

| Metadata Access | Always openly accessible via PID | Even if specimen data is restricted, its metadata is findable and accessible. |

| Persistence | Commitment via a digital repository's certification (e.g., CoreTrustSeal). | Guarantees long-term availability. |

Diagram 1: Data Access Workflow with Auth

Interoperable

Data must integrate with other data, and work with applications or workflows for analysis, storage, and processing. This requires shared languages and vocabularies.

- Core Technical Requirements:

- Vocabularies & Ontologies: Use of formal, accessible, shared, and broadly applicable knowledge representations (ontologies, terminologies, thesauri). For digital specimens, this includes ITIS, NCBI Taxonomy, OBO Foundry ontologies (e.g., UBERON, PATO), and domain-specific extensions.

- Qualified References: Metadata should include qualified references to other (meta)data, using PIDs to link related digital specimens, publications, sequences, or chemical compounds.

- Standard Data Formats: Use of community-endorsed, open data formats (e.g., JSON-LD, RDF, NeXML) that embed semantic meaning.

Experimental Protocol: Mapping Specimen Data to a Common Ontology

- Objective: To enhance interoperability by annotating a digital specimen dataset with terms from a standard ontology.

- Methodology:

- Data Extraction: Isolate key descriptive fields from the specimen record (e.g., anatomical location, measured trait, environmental context).

- Vocabulary Identification: Identify the most relevant community ontology (e.g., UBERON for anatomy; ENVO for environment; ChEBI for chemical compounds).

- Term Mapping: Use a tool like OxO (Ontology Xref Service) or ZOOMA to automatically suggest mappings from free-text labels to ontology class URIs.

- Curation & Validation: Manual review and correction of automated mappings by a domain expert.

- Serialization: Embed the resulting ontology URIs within the data file using a semantic web format like RDF/XML or JSON-LD. The

rdfs:labelcan be retained for human readability alongside theskos:exactMatchlink to the ontology class.

Diagram 2: Ontology Mapping for Interop

Reusable

The ultimate goal of FAIR. Data and metadata are richly described so they can be replicated, combined, or reused in different settings. This hinges on provenance and clear licensing.

- Core Technical Requirements:

- Rich Provenance: Data must be described with plurality of accurate and relevant attributes, including detailed provenance (origin, processing history) using standards like PROV-O.

- Domain-Relevant Community Standards: Data should meet relevant discipline-specific standards (e.g., MIxS standards for genomic data).

- Clear Usage License: Data must be released with a clear and accessible data usage license (e.g., CC0, CC-BY, ODC-BY).

Table 3: Essential Elements for Reusability

| Element | Description | Example Standard |

|---|---|---|

| Provenance | A complete record of the origin, custody, and processing of the data. | PROV-O, W3C PROV-DM |

| Domain Standards | Compliance with field-specific reporting requirements. | MIxS, MIAPE, ARRIVE guidelines |

| License | A clear statement of permissions for data reuse. | Creative Commons, Open Data Commons |

| Citation Metadata | Accurate and machine-actionable information needed to cite the data. | DataCite Metadata Schema, Citation File Format (CFF) |

The Scientist's Toolkit: Essential Research Reagent Solutions for FAIR Digital Specimens

Implementing FAIR requires a suite of technical and conceptual tools. Below is a table of key "reagents" for creating FAIR digital specimens.

Table 4: Research Reagent Solutions for FAIR Digital Specimens

| Item | Category | Function in FAIRification |

|---|---|---|

| PID Generator/Resolver | Infrastructure | Assigns and resolves Persistent Identifiers (e.g., DataCite DOI, Handle). |

| Metadata Editor (FAIR-shaped) | Software | Guides users in creating rich, schema-compliant metadata (e.g., CEDAR, MetaData.js). |

| Ontology Lookup Service | Semantic Tool | Provides APIs to search and access terms from major ontologies (e.g., OLS, BioPortal). |

| RDF Triple Store | Database | Stores and queries semantic (RDF) data, enabling linked data integration (e.g., GraphDB, Virtuoso). |

| FAIR Data Point | Middleware | A standardized metadata repository that exposes metadata for both humans and machines via APIs. |

| Workflow Management System | Orchestration | Captures and records data provenance automatically (e.g., Nextflow, Snakemake, Galaxy). |

| Trusted Digital Repository | Infrastructure | Provides long-term preservation and access, often with CoreTrustSeal certification (e.g., Zenodo, Dryad). |

| Data Use License Selector | Legal Tool | Helps choose an appropriate machine-readable license for data (e.g., RIGHTS statement wizard). |

Achieving FAIR is not a binary state but a continuum. For digital specimens, which serve as the bridge between physical collections and computational analysis, each principle reinforces the others. A Findable specimen with a PID becomes Accessible via a standard API; when enriched with Interoperable ontological annotations, its potential for Reuse in novel, cross-domain research—such as drug discovery from natural products—is maximized. The protocols and toolkits outlined herein provide a concrete foundation for researchers to build a more open, collaborative, and efficient scientific future.

The digital transformation of natural science collections—creating Digital Specimens—demands a robust framework to ensure data is not only accessible but inherently reusable. This whitepaper positions the synergy between FAIR (Findable, Accessible, Interoperable, Reusable) principles and Open Science as the critical catalyst for global collaboration in biodiversity and biomedical research. For drug development professionals, this synergy accelerates the discovery of novel bioactive compounds from natural sources by enabling seamless integration of specimen-derived data with genomic, chemical, and phenotypic datasets.

Foundational Principles: FAIR Meets Open Science

FAIR Principles provide a technical framework for data stewardship, independent of its openness. Open Science is a broad movement advocating for transparent and accessible knowledge. Their synergy is not automatic; FAIR data can be closed (e.g., commercial, private) and open data can be non-FAIR (e.g., a PDF in a repository without metadata). The catalytic effect emerges when data is both FAIR and Open, creating a frictionless flow of high-quality, machine-actionable information.

Quantitative Impact of FAIR and Open Science Adoption

Recent studies quantify the tangible benefits of implementing FAIR and Open Science practices in life sciences research.

Table 1: Impact Metrics of FAIR and Open Science Practices

| Metric | Pre-FAIR/Open Baseline | Post-FAIR/Open Implementation | Data Source & Year |

|---|---|---|---|

| Data Reuse Rate | 5-10% of datasets cited | 30-50% increase in dataset citations | PLOS ONE, 2022 |

| Time to Data Discovery | Hours to days (manual search) | Minutes (machine search via APIs) | Scientific Data, 2023 |

| Inter-study Data Integration Success | <20% (schema conflicts) | >70% (using shared ontologies) | Nature Communications, 2023 |

| Reproducibility of Computational Workflows | ~40% reproducible | ~85% reproducible (with containers & metadata) | GigaScience, 2023 |

Technical Implementation for Digital Specimens

A Digital Specimen is a rich digital object aggregating data about a physical biological specimen. Its FAIRification is a prerequisite for large-scale, cross-disciplinary research.

Core Metadata Schema and Persistent Identification

Experimental Protocol: Minting Persistent Identifiers (PIDs) and Annotation

- Objective: To uniquely and persistently identify a digital specimen and its associated data.

- Materials: Local specimen database, HTTP server, resolver service (e.g., Handle System, DOI), metadata schema (e.g., OpenDS, ABCD-EFG).

- Procedure:

a. For each physical specimen, ensure a stable local catalog number exists.

b. Register for a prefix from a PID provider (e.g., DataCite, ePIC).

c. Create a minimal metadata record containing: creator, publication year, title (scientific name), publisher (collection), and a URL pointing to the digital specimen landing page.

d. Mint a PID (e.g., DOI, ARK) by posting the metadata to the provider's API. The PID is bound to this metadata.

e. Configure the PID resolver to redirect to the specimen's dynamic landing page.

f. Annotate the specimen record in the institutional database with the PID. All subsequent data outputs (images, sequences, analyses) should link back to this PID as the

isDerivedFromsource.

Diagram 1: PID Minting and Linking Workflow (77 chars)

Semantic Interoperability Through Ontologies

To enable machine-actionability (the "I" in FAIR), data must be annotated with shared, resolvable vocabularies.

Experimental Protocol: Ontological Annotation of Specimen Data

- Objective: To annotate a specimen's "habitat" field for cross-collection querying.

- Materials: Specimen record, ontology lookup service (e.g., OLS, BioPortal), target ontology (e.g., Environment Ontology - ENVO), RDF triplestore.

- Procedure:

a. Extract the free-text habitat description (e.g., "oak forest near stream").

b. Use ontology service APIs to find candidate terms. Query for "oak forest" and "stream".

c. Select the most specific matching term URIs:

http://purl.obolibrary.org/obo/ENVO_01000819(oak forest biome) andhttp://purl.obolibrary.org/obo/ENVO_00000023(stream). d. Model the annotation as RDF triples using a schema like Darwin Core: e. Ingest the triples into a linked data platform, making them queryable via SPARQL.

Enabling Global Collaboration: Workflows and Pathways

The synergy creates new collaborative pathways. For drug discovery, a researcher can find digital specimens of a plant genus, link to its sequenced metabolome data, and identify candidate compounds for assay.

Diagram 2: FAIR-Open Drug Discovery Pathway (79 chars)

Detailed Collaborative Protocol: From Specimen to Candidate Compound

- Objective: Identify a candidate bioactive compound from a digital specimen.

- Materials: FAIR digital specimen PID, federated SPARQL endpoint, metabolomics data repository (e.g., MetaboLights), cheminformatics software (e.g., RDKit), virtual screening workflow.

- Procedure:

a. Use a global portal (e.g., DiSSCo's unified API) to find digital specimens of a target taxon using a taxonomic ontology term (e.g., NCBITaxon:*).

b. Retrieve the PID for each specimen and query a knowledge graph for linked metabolomics datasets via the

isDerivedFromrelationship. c. Access the raw spectral data from the repository using its API (ensuring open licensing). d. Process the data to identify molecular features and dereplicate against known compound libraries. e. For novel features, predict molecular structures and generate 3D conformers. f. Perform molecular docking against a publicly available protein target (e.g., from PDB) using a containerized workflow (e.g., Nextflow) shared on a platform like WorkflowHub. g. Share the resulting candidate list and workflow with collaborators for validation.

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 2: Key Reagents & Tools for FAIR Digital Specimen Research

| Tool/Reagent Category | Specific Example(s) | Function in FAIR/Open Workflow |

|---|---|---|

| PID Provider | DataCite, ePIC (Handle), ARK | Mints persistent, globally unique identifiers for specimens and datasets. |

| Metadata Schema | Darwin Core, OpenDS, ABCD-EFG | Provides standardized templates for structuring specimen metadata. |

| Ontology Service | OLS, BioPortal, OntoPortal | Enables lookup and mapping of terms to URIs for semantic annotation. |

| Trustworthy Repository | Zenodo, Figshare, ENA, MetaboLights | Preserves data with integrity, provides PIDs, and ensures long-term access. |

| Knowledge Graph Platform | Wikibase, GraphDB, Virtuoso | Stores and queries RDF triples, enabling complex cross-domain queries. |

| Workflow Management | Nextflow, Snakemake, CWL | Encapsulates computational methods in reusable, reproducible scripts. |

| Containerization | Docker, Singularity | Packages software and dependencies for portability across compute environments. |

| Accessibility Service | Data Access Committee (DAC) tools, OAuth2 | Manages controlled access where open sharing is not permissible, ensuring "A" in FAIR. |

Within the framework of a broader thesis on FAIR (Findable, Accessible, Interoperable, Reusable) data principles for digital specimens research, the role of dedicated Research Infrastructures (RIs) is paramount. This technical guide examines two cornerstone stakeholders: the Global Biodiversity Information Facility (GBIF) and the Distributed System of Scientific Collections (DiSSCo). These infrastructures are engineering the technological and governance frameworks necessary to transform physical natural science collections into a globally integrated digital resource, thereby accelerating discovery in fields including pharmaceutical development.

Core Stakeholders: Missions and Architectures

Global Biodiversity Information Facility (GBIF)

GBIF is an international network and data infrastructure funded by governments, focused on providing open access to data about all types of life on Earth. It operates primarily as a federated data aggregator, harvesting and indexing occurrence records from publishers worldwide.

Key Architecture: The GBIF data model centers on the Darwin Core Standard, a set of terms facilitating the exchange of biodiversity information. Its infrastructure is built on a harvesting model where data publishers (museums, universities, projects) publish data in standardized formats, which GBIF then indexes, providing a unified search portal and API.

Distributed System of Scientific Collections (DiSSCo)

DiSSCo is a pan-European Research Infrastructure that aims to unify and digitalize the continent's natural science collections under a common governance and access framework. Its vision extends beyond data aggregation to the digitization of the physical specimen itself as a Digital Specimen.

Key Architecture: DiSSCo is developing a digital specimen architecture centered on a persistent identifier (PID) for each digital specimen. This PID links to a mutable digital object that can be enriched with data, annotations, and links throughout its research lifecycle. It builds on the FAIR Digital Object framework.

Quantitative Comparison of Scope and Output

The following table summarizes the core quantitative metrics and focus of both infrastructures, based on current data.

Table 1: Comparative Analysis of DiSSCo and GBIF

| Metric | GBIF | DiSSCo |

|---|---|---|

| Primary Scope | Global biodiversity data aggregation | European natural science collections digitization & unification |

| Core Unit | Occurrence Record | Digital Specimen (a FAIR Digital Object) |

| Data Model | Darwin Core (Extended) | Open Digital Specimen (openDS) model |

| Record Count | ~2.8 billion occurrence records | ~1.5 billion physical specimens to be digitized |

| Participant Count | 112+ Participant Countries/Organizations | 120+ leading European institutions |

| Key Service | Data discovery & access via portal/API | Digitization, curation, and persistent enrichment of digital specimens |

| FAIR Focus | Findable, Accessible | Interoperable, Reusable (with persistent provenance) |

Methodological Protocols for Digital Specimen Research

The creation and use of FAIR digital specimens involve defined experimental and data protocols.

Protocol for Creating a FAIR Digital Specimen

This protocol outlines the steps to transform a physical specimen into a reusable digital research object.

- Specimen Selection & Barcoding: A physical specimen with a unique institutional catalog number is selected. A 2D barcode (e.g., DataMatrix) linking to this identifier is attached.

- Image Capture: High-resolution 2D imaging (e.g., Herbarium sheet, insect drawer) or 3D imaging (e.g., micro-CT for fossils) is performed following standardized lighting and scale bar protocols.

- Data Transcription & Enhancement: Label data is transcribed into structured fields. Taxonomic identification is verified or updated, linking to authoritative vocabularies (e.g., GBIF Backbone Taxonomy).

- Minting the Persistent Identifier (PID): A globally unique, persistent identifier (e.g., a DOI, Handle, or ARK) is minted for the digital representation of the specimen.

- Metadata Generation & Packaging: A metadata record compliant with the openDS standard is created, containing the PID, provenance of digitization, links to images, and associated data. All components are packaged as a FAIR Digital Object.

- Publication & Registration: The Digital Specimen is published to a trusted repository (e.g., the DiSSCo Cloud) and its PID is registered in a global resolver system. Metadata is harvested by aggregators like GBIF.

Protocol for Cross-Infrastructure Data Linkage Analysis

This methodology is used to study the interoperability and data enrichment pathways between infrastructures.

- Dataset Acquisition: A focused taxonomic group (e.g., Asteraceae) is selected. Occurrence records are downloaded via the GBIF API.

- PID Extraction & Resolution: Records derived from DiSSCo-participating institutions are filtered. The

occurrenceIDfield (containing the institutional PID) is parsed. - Link Validation: Each institutional PID is resolved via its native system (e.g., the museum's collection portal) and via the DiSSCo PID resolver (when available) to check for active links to digital specimens.

- Metadata Enrichment Comparison: The metadata available in the GBIF Darwin Core record is compared against the full metadata and digital assets available at the source Digital Specimen.

- Quantitative Analysis: The percentage of records with resolvable PIDs, the latency of resolution, and the volume of additional data accessible via the source are calculated and visualized.

Visualizing Relationships and Workflows

Relationship between Physical Specimens, DiSSCo, and GBIF

Diagram 1: Specimen data flow across infrastructures

FAIR Digital Specimen Creation Workflow

Diagram 2: Digital specimen creation protocol

The Scientist's Toolkit: Research Reagent Solutions

For researchers engaging with digital specimens and biodiversity data infrastructures, the following "digital reagents" are essential.

Table 2: Essential Digital Research Toolkit

| Tool / Solution | Primary Function | Relevance to Drug Development |

|---|---|---|

| GBIF API | Programmatic access to billions of species occurrence records. | Identify geographic sources of biologically active species; model species distribution under climate change for supply chain planning. |

| DiSSCo PID Resolver | A future service to resolve Persistent Identifiers to Digital Specimen records. | Trace the exact voucher specimen used in a published bioactivity assay for reproducibility and compound re-isolation. |

| CETAF Stable Identifiers | Persistent identifiers for specimens from Consortium of European Taxonomic Facilities institutions. | Unambiguously cite biological source material in patent applications and regulatory documentation. |

| openDS Data Model | Standardized schema for representing digital specimens as enriched, mutable objects. | Enrich specimen records with proprietary lab data (e.g., NMR results) while maintaining link to authoritative source. |

| SPECIFY 7 / Collection Management Systems | Software for managing collection data and digitization workflows. | The backbone for institutions publishing high-quality, research-ready data to DiSSCo and GBIF. |

| R Packages (rgbif, SPARQL) | Libraries for accessing GBIF data and linked open data (e.g., from Wikidata). | Integrate biodiversity data pipelines into bioinformatics workflows for large-scale, automated analysis. |

Building the FAIR Digital Specimen: A Step-by-Step Implementation Framework

Within the broader thesis on implementing FAIR (Findable, Accessible, Interoperable, Reusable) data principles for digital specimens in life sciences research, the initial and foundational step is ensuring Findability. This technical guide details the core components for achieving this: structured metadata schemas and persistent identifiers (PIDs). For researchers, scientists, and drug development professionals, these are the essential tools to make complex digital specimens—detailed digital representations of physical biological samples—discoverable and reliably citable across distributed data infrastructures.

Core Concepts

Persistent Identifiers (PIDs)

PIDs are long-lasting references to digital objects, independent of their physical location. They resolve to the object's current location and contain essential metadata. For digital specimens, they provide unambiguity and permanence.

Metadata Schemas

A metadata schema is a structured framework that defines the set of attributes, their definitions, and the rules for describing a digital object. A well-defined schema ensures that specimens are described consistently, enabling both human and machine discovery.

Current Implementations and Quantitative Comparison

Widely Adopted PID Systems

Table 1: Comparison of Key Persistent Identifier Systems

| System | Prefix Example | Administering Body | Typical Resolution | Key Features for Digital Specimens |

|---|---|---|---|---|

| DOI | 10.4126/ |

DataCite, Crossref | https://doi.org/ |

Ubiquitous in publishing; offers rich metadata (DataCite Schema). |

| Handle | 20.5000.1025/ |

DONA Foundation | https://hdl.handle.net/ |

Underpins DOI; flexible, used by EU-funded repositories. |

| ARK | ark:/12345/ |

Various Organsiations | https://n2t.net/ |

Emphasis on persistence promises; allows variant URLs. |

| PURL | purl.obolibrary.org/ |

Internet Archive | https://purl.org/ |

Stable URLs that redirect; common for ontologies. |

| IGSN | 20.500.11812/ |

IGSN e.V. | https://igsn.org/ |

Specialized for physical samples, linking to derivatives. |

Prominent Metadata Schemas

Table 2: Comparison of Relevant Metadata Schemas

| Schema | Maintainer | Scope | Key Attributes | Relation to FAIR |

|---|---|---|---|---|

| DataCite Metadata Schema | DataCite | Generic for research outputs. | identifier, creator, title, publisher, publicationYear, resourceType. |

Core for F1 (PID) and F2 (Rich metadata). |

| DCAT (Data Catalog Vocabulary) | W3C | Data catalogs & datasets. | dataset, distribution, accessURL, theme (ontology). |

Enables federation of catalogs (F4). |

| ABCD (Access to Biological Collection Data) | TDWG | Natural history collections. | unitID, recordBasis, identifiedBy, collection. |

Domain-specific for specimen data. |

| Darwin Core | TDWG | Biodiversity informatics. | occurrenceID, scientificName, eventDate, locationID. |

Lightweight standard for sharing data. |

| ODIS (OpenDS) Schema | DiSSCo | Digital Specimens | digitalSpecimenPID, physicalSpecimenId, topicDiscipline, objectType. |

Emerging standard for digital specimen infrastructure. |

Experimental Protocol: Minting and Resolving a PID for a Digital Specimen

Protocol Title: Protocol for Assigning and Resolving a DataCite DOI to a Digital Specimen Record.

Objective: To create a globally unique, persistent, and resolvable identifier for a digital specimen record, enabling its findability.

Materials/Reagent Solutions:

- Research Repository Platform: e.g., Zenodo, Figshare, or an institutional repository supporting DataCite DOI minting.

- Metadata Editor: Web form or JSON editor provided by the repository.

- Digital Specimen Record: A structured JSON or XML file containing the core descriptive data of the specimen.

- Authentication Credentials: Login for the chosen repository.

Methodology:

- Prepare Metadata: Compile all descriptive information for the digital specimen according to the required schema (e.g., DataCite 4.4). Essential elements include:

- Identifier (optional): A local unique ID.

- Creators: Names and affiliations of those creating the digital record.

- Titles: A descriptive title for the digital specimen.

- Publisher: The repository or institution publishing the digital record.

- Publication Year: Year of publication.

- Resource Type: e.g.,

Dataset/DigitalSpecimen. - Related Identifiers: Links to the physical specimen ID (e.g., collector's number) and associated publications.

- Upload Digital Object: Log into the repository. Upload the primary data file representing the digital specimen (e.g.,

specimen_12345.json). - Populate Metadata Form: Using the repository interface, enter the prepared metadata into the designated fields.

- Publish/Mint DOI: Execute the "Publish" command. The repository will assign a unique DOI (e.g.,

10.4126/FK2123456789) and register it with the global DataCite resolution system. - Resolve and Verify: Open a new browser and navigate to

https://doi.org/10.4126/FK2123456789. The browser should resolve (redirect) to the landing page of the digital specimen in the repository. - Incorporate PID: Use the minted DOI as the primary

identifierfor the digital specimen in all subsequent data integrations and publications.

The Scientist's Toolkit: Essential PID & Metadata Solutions

Table 3: Research Reagent Solutions for Digital Specimen Findability

| Tool/Resource | Category | Function | Example/Provider |

|---|---|---|---|

| DataCite | PID Service | Provides DOI minting and registration services with a robust metadata schema. | datacite.org |

| EZID | PID Service | A service (from CDL) to create and manage unique identifiers (DOIs, ARKs). | ezid.cdlib.org |

| Metadata Editor | Software Tool | For creating and validating metadata files (JSON/XML). | DataCite Fabrica, GitHub Codespaces |

| JSON-LD | Data Format | A JSON-based serialization for Linked Data, enhancing metadata interoperability. | W3C Standard |

| FAIR Checklist | Assessment Tool | A list of criteria to evaluate the FAIRness of a digital object. | fairplus.github.io/the-fair-cookbook |

| PID Graph Resolver | Resolution Tool | A service that resolves a PID and returns its metadata and link relationships. | hdl.handle.net, doi.org |

Logical Workflow: From Physical Specimen to Findable Digital Object

Workflow for Creating a Findable Digital Specimen

Signaling Pathway: PID Resolution and Metadata Retrieval

PID Resolution and Metadata Retrieval Pathway

The FAIR Guiding Principles for scientific data management and stewardship—Findability, Accessibility, Interoperability, and Reusability—provide a critical framework for digital specimens in life sciences research. This document addresses Step 2: Accessibility, focusing on technical implementations for universal access. For digital specimens (digital representations of physical biological samples), accessibility is not merely about being open but about being reliably, securely, and programmatically accessible to both human and machine agents. Standardized Application Programming Interfaces (APIs) and protocols are the bedrock of this operational accessibility, enabling automated integration into computational workflows essential for modern drug discovery and translational research.

Core Technical Standards & Protocols

Universal accessibility requires consensus-based technical standards. The following protocols are foundational for digital specimen infrastructures.

Table 1: Core Technical Standards for API-Based Accessibility

| Standard/Protocol | Governing Body | Primary Function in Digital Specimen Context | Key Quantitative Metric (Typical Performance) |

|---|---|---|---|

| HTTP/1.1 & HTTP/2 | IETF | Underlying transport for web APIs. Enables request/response model for data retrieval and submission. | Latency: <100ms for API response (high-performance systems). |

| REST (Representational State Transfer) | Architectural Style | Stateless client-server architecture using standard HTTP methods (GET, POST, PUT, DELETE) for resource manipulation. | Adoption: >85% of public scientific web APIs use RESTful patterns. |

| JSON API (v1.1) | JSON API Project | Specification for building APIs in JSON, defining conventions for requests, responses, and relationships. | Payload Efficiency: Reduces redundant nested data vs. ad-hoc JSON. |

| OAuth 2.0 / OIDC | IETF | Authorization framework and identity layer for secure, delegated access to APIs without sharing credentials. | Security: Reduces credential phishing risk; supports granular scopes. |

| DOI (Digital Object Identifier) | IDF | Persistent identifier for digital specimens, ensuring permanent citability and access. | Resolution: >99.9% DOI resolution success rate via Handle System. |

| OpenAPI Specification (v3.1.0) | OpenAPI Initiative | Machine-readable description of RESTful APIs, enabling automated client generation and documentation. | Development Efficiency: Can reduce API integration time by ~30-40%. |

API Design & Implementation Methodology

Experimental Protocol: Designing a FAIR-Compliant Digital Specimen API

Objective: To implement a RESTful API endpoint that provides standardized, secure, and interoperable access to digital specimen metadata and related data, adhering to FAIR principles.

Materials & Methods:

- Resource Modeling: Model the digital specimen as a core resource with unique, persistent HTTP URI (e.g.,

https://api.repo.org/digitalspecimens/{id}). Define related resources (e.g., derivations, genomic analyses, publications). - Endpoint Definition: Implement endpoints using HTTP methods.

GET /digitalspecimens: List specimens with pagination, filtering.GET /digitalspecimens/{id}: Retrieve a single specimen's metadata in JSON-LD format.GET /digitalspecimens/{id}/derivatives: Retrieve linked derivative datasets.

- Response Formatting: Structure all responses using JSON-LD (JSON for Linked Data). The JSON payload must include a

@contextkey linking to a shared ontology (e.g., OBO Foundry terms) to ensure semantic interoperability. - Authentication/Authorization Integration: Protect

POST,PUT,DELETEmethods with OAuth 2.0 Bearer Tokens. ForGETmethods, implement a tiered access model: public metadata, controlled-access data. - API Description: Document the complete API using the OpenAPI Specification (OAS). Publish the OAS

YAMLfile at the API's root endpoint (e.g.,https://api.repo.org/openapi.yaml). - Persistence: Assign and return a DOI for each new digital specimen record created via the API.

Validation: Use automated API testing tools (e.g., Postman, Schemathesis) to validate endpoint correctness, security headers, and response schema adherence to the published OAS.

Visualization: Digital Specimen API Access Workflow

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Tools for Implementing & Accessing Standardized APIs

| Tool/Reagent Category | Specific Example(s) | Function in API Workflow |

|---|---|---|

| API Client Libraries | requests (Python), httr (R), axios (JavaScript) |

Programmatic HTTP clients to send requests and handle responses from RESTful APIs. |

| Authentication Handler | oauthlib (Python), auth0 SDKs |

Manages the OAuth 2.0 token acquisition and refresh cycle, simplifying secure access. |

| Schema Validator | jsonschema, pydantic (Python), ajv (JavaScript) |

Validates incoming/outgoing JSON data against a predefined schema or OpenAPI spec. |

| API Testing Suite | Postman, Newman, Schemathesis | Designs, automates, and validates API calls for functionality, reliability, and performance. |

| Semantic Annotation Tool | jsonld Python/R libraries |

Compacts/expands JSON-LD, ensuring data is linked to ontologies for interoperability. |

| DOI Minting Service Client | DataCite REST API Client, Crossref API Client | Mints and manages persistent identifiers (DOIs) for new digital specimen records via API. |

| Workflow Integration Platform | Nextflow, Snakemake, Galaxy | Orchestrates pipelines where API calls to fetch digital specimens are a defined step. |

Data Retrieval & Interoperability Protocol

Experimental Protocol: Machine-Actionable Data Retrieval Using Content Negotiation

Objective: To enable both human users and computational agents to retrieve the most useful representation of a digital specimen from the same URI.

Materials & Methods:

- Server-Side Configuration: Configure the API server to support HTTP Content Negotiation (

Acceptheader) for theGET /digitalspecimens/{id}endpoint. - Response Format Support:

Accept: application/json-> Return standard JSON representation.Accept: application/ld+json-> Return JSON-LD representation with full@context.Accept: text/html-> Return a human-readable HTML data portal page (for browser requests).Accept: application/rdf+xml-> Return R/XML for linked data consumers.

- Implementation: Use server-side logic to inspect the

Acceptheader of the incoming request and route to the appropriate serializer or template. - Linked Data Headers: Include a

Linkheader in all responses pointing to the JSON-LD context:<http://schema.org/>; rel="http://www.w3.org/ns/json-ld#context"; type="application/ld+json".

Validation: Use curl commands to test:

Verify the correct Content-Type is returned in each response header.

Visualization: Interoperability Through Standardized APIs

Within the FAIR (Findable, Accessible, Interoperable, Reusable) data principles framework for digital specimens research, semantic enrichment and ontologies represent the critical bridge to achieving true interoperability. While Steps 1 and 2 establish digital persistence and core metadata, Step 3 transforms data into machine-actionable knowledge. For researchers, scientists, and drug development professionals, this shift enables complex queries across disparate biobanks, genomic databases, and clinical repositories, accelerating translational research. This whitepaper details the technical methodologies and infrastructure required to semantically enrich digital specimen records, ensuring they are not merely stored but become integral components of a global knowledge network.

Core Concepts and Quantitative Landscape

Semantic enrichment involves annotating digital specimen data with standardized terms from curated ontologies and controlled vocabularies. These annotations create explicit, computable links between specimen attributes and broader biological, clinical, and environmental concepts.

Key Ontologies for Digital Specimens

The following table summarizes the essential ontologies and their application scope.

| Ontology/Vocabulary | Scope & Purpose | Provider | Usage Frequency in Specimen Research (Approx.) |

|---|---|---|---|

| Environment Ontology (ENVO) | Describes biomes, environmental materials, and geographic features. | OBO Foundry | ~65% of ecological/environmental studies |

| Uberon | Cross-species anatomy for animals, encompassing tissues, organs, and cells. | OBO Foundry | ~85% of anatomical annotations |

| Cell Ontology (CL) | Cell types for prokaryotes, eukaryotes, and particularly human and model organisms. | OBO Foundry | ~75% of cellular phenotype studies |

| Disease Ontology (DOID) | Human diseases for consistent annotation of disease-associated specimens. | OBO Foundry | ~80% of clinical specimen research |

| NCBI Taxonomy | Taxonomic classification of all organisms. | NCBI | ~99% of specimens with species data |

| Ontology for Biomedical Investigations (OBI) | Describes the protocols, instruments, and data processing used in research. | OBO Foundry | ~60% of methodological annotations |

| Chemical Entities of Biological Interest (ChEBI) | Small molecular entities, including drugs, metabolites, and biochemicals. | EMBL-EBI | ~70% of pharmacological/toxicological studies |

| Phenotype And Trait Ontology (PATO) | Qualities, attributes, or phenotypes (e.g., size, color, shape). | OBO Foundry | ~55% of phenotypic trait descriptions |

Metrics for Enrichment Success

Implementing semantic enrichment yields measurable improvements in data utility, as shown in the table below.

| Metric | Pre-Enrichment Baseline | Post-Enrichment & Ontology Alignment | Measurement Method |

|---|---|---|---|

| Cross-Repository Query Success | 15-20% (keyword-based, low recall) | 85-95% (concept-based, high recall/precision) | Recall/Precision calculation on a standard test set of specimen queries. |

| Data Integration Time (for a new dataset) | Weeks to months (manual mapping) | Days (semi-automated with ontology services) | Average time recorded in pilot projects (e.g., DiSSCo, ICEDIG). |

| Machine-Actionable Data Points per Specimen Record | ~5-10 (core Darwin Core) | ~30-50+ (with full ontological annotation) | Automated count of unique, resolvable ontology IRIs per record. |

Experimental Protocols for Semantic Enrichment

The following methodologies provide a replicable framework for enriching digital specimen data.

Protocol: Automated Annotation Using Terminology Services

Objective: To programmatically tag free-text specimen descriptions (e.g., "collecting event," "phenotypic observations") with ontology terms.

Materials: See "The Scientist's Toolkit" below.

Procedure:

- Text Pre-processing: Isolate text fields from the specimen metadata (e.g.,

dwc:occurrenceRemarks,dwc:habitat). Apply NLP preprocessing: tokenization, lemmatization, stop-word removal. - Terminology Service Query: For each pre-processed text chunk, submit a query to a ontology resolution service API (e.g., the OLS API, BioPortal API).

- Candidate Term Retrieval: The service returns a list of candidate ontology terms with matching labels, synonyms, and associated relevance scores.

- Term Disambiguation & Selection: Implement a scoring algorithm that weights:

- String similarity (e.g., Levenshtein distance).

- Semantic similarity based on the ontology graph structure.

- Contextual relevance using surrounding annotated fields. Select the top-scoring term with a score above a defined threshold (e.g., >0.7).

- IRI Attachment: Append the selected term's Internationalized Resource Identifier (IRI) to the specimen record in a dedicated field (e.g.,

dwc:dynamicPropertiesas JSON-LD, or a triple store). - Validation: A subset of annotations (e.g., 10%) must be manually verified by a domain expert to calculate precision and adjust thresholds.

Protocol: Ontology-Aligned Data Transformation

Objective: To transform structured but non-standard specimen data (e.g., in-house database codes for "preservation method") into ontology-linked values.

Procedure:

- Mapping Table Creation: For each controlled field requiring alignment, create a mapping table linking local values to target ontology term IRIs.

- Example:

local_code: "FZN"->OBI:0000867("cryofixation")

- Example:

- Batch Processing Script: Develop and execute a script that reads the source data, performs lookups in the mapping table, and outputs a new dataset where local codes are replaced or supplemented with ontology IRIs.

- Provenance Recording: The script must record the mapping version and execution timestamp as part of the data provenance (

pav:version,pav:createdOn).

Protocol: Establishing Linked Data Relationships

Objective: To express relationships between specimen data points using semantic web standards (RDF, OWL).

Procedure:

- Define a Lightweight Application Ontology: Create a simple OWL ontology defining key relationships (object properties) for your domain.

- Example:

:derivedFromlinking a:DNAExtractto a:TissueSpecimen. - Example:

:collectedFromlinking a:Specimento a:Location(via ENVO).

- Example:

- RDF Generation: Convert the enriched specimen metadata (including new ontology IRIs) into RDF triples (subject-predicate-object).

- Use standards like Dublin Core, Darwin Core as RDF (DwC-RDF), and PROV-O for provenance.

- Publishing: Load RDF triples into a triplestore (e.g., GraphDB, Blazegraph) that supports SPARQL querying. Assign persistent HTTP URIs to each specimen and its relationships.

Visualizing the Semantic Enrichment Ecosystem

Semantic Enrichment Technical Workflow

Digital Specimen as a Knowledge Graph Node

The Scientist's Toolkit

| Research Reagent Solution | Function in Semantic Enrichment |

|---|---|

| Ontology Lookup Service (OLS) | A central API for querying, browsing, and visualizing ontologies from the OBO Foundry. Essential for term discovery and IRI resolution. |

| BioPortal | A comprehensive repository for biomedical ontologies (including many OBO ontologies), offering REST APIs for annotation and mapping. |

| Apache Jena | A Java framework for building Semantic Web and Linked Data applications. Used for creating, parsing, and querying RDF data and SPARQL endpoints. |

| ROBOT (Robot OBO Tool) | A command-line tool for automating ontology development, maintenance, and quality control tasks, such as merging and reasoning. |

| Protégé | A free, open-source ontology editor and framework for building intelligent systems. Used for creating and managing application ontologies. |

| GraphDB / Blazegraph | High-performance triplestores designed for storing and retrieving RDF data. Provide SPARQL endpoints for complex semantic queries. |

| OxO (Ontology Xref Service) | A service for finding mappings (cross-references) between terms from different ontologies. Critical for integrating multi-ontology annotations. |

| SPARQL | The RDF query language, used to retrieve and manipulate data stored in triplestores. Enables federated queries across multiple FAIR data sources. |

Within the FAIR (Findable, Accessible, Interoperable, Reusable) data principles framework for digital specimens research, provenance tracking and rich documentation are the critical enablers of the "R" – Reusability. For researchers, scientists, and drug development professionals, data alone is insufficient. A dataset’s true value is unlocked only when its origin, processing history, and contextual meaning are comprehensively and transparently documented. This step ensures that digital specimens and derived data can be independently validated, integrated, and repurposed for novel analyses, such as cross-species biomarker discovery or drug target validation, long after the original study concludes.

The Provenance Framework: W7 Model and Beyond

Provenance answers critical questions about data origin and transformation. The W7 model (Who, What, When, Where, How, Why, Which) provides a structured framework for capturing provenance in scientific workflows.

| W7 Dimension | Core Question | Example for a Digital Specimen Image | Technical Implementation (e.g., RO-Crate) |

|---|---|---|---|

| Who | Agents responsible | Researcher, lab, instrument, processing software | author, contributor, publisher properties |

| What | Entities involved | Raw TIFF image, segmented mask, metadata file | hasPart to link dataset files |

| When | Timing of events | 2023-11-15T14:30:00Z (acquisition time) | datePublished, temporalCoverage |

| Where | Location of entities | Microscope ID, storage server path, geographic collection site | spatialCoverage, contentLocation |

| How | Methods used | Confocal microscopy, CellProfiler v4.2.1 pipeline | Link to ComputationalWorkflow (e.g., CWL, Nextflow) |

| Why | Motivation/purpose | Study of protein X localization under drug treatment Y | citation, funding, description fields |

| Which | Identifiers/versions | DOI:10.xxxx/yyyy, Software commit hash: a1b2c3d | identifier, version, sameAs properties |

A key technical standard for bundling this information is RO-Crate (Research Object Crate). It is a lightweight, linked data framework for packaging research data with their metadata and provenance.

Diagram Title: Provenance Relationships in a Digital Specimen Analysis Workflow

Methodologies for Implementing Provenance Capture

Protocol: Automated Provenance Capture in Computational Workflows

Objective: To automatically record detailed provenance (inputs, outputs, parameters, software versions, execution history) for all data derived from a computational analysis pipeline.

Materials:

- Workflow Management System (e.g., Nextflow, Snakemake, Galaxy)

- Version Control System (e.g., Git)

- Containerization Platform (e.g., Docker, Singularity)

- Provenance Export Tools (e.g.,

nextflow log, RO-Crate generators)

Procedure:

- Workflow Scripting: Define the analysis pipeline (e.g., image segmentation, feature extraction) using a workflow management system. Ensure each process explicitly declares its inputs and outputs.

- Environment Specification: Package all software dependencies into a Docker/Singularity container. Record the container hash.

- Execution with Tracking: Run the workflow, specifying a unique run ID. The system automatically logs:

- The exact software versions and container image used.

- The execution timeline for each process.

- The path to all input, intermediate, and final output files.

- The command-line parameters for each process.

- Provenance Export: Use the workflow system's built-in commands (e.g.,

nextflow log -f trace <run_id>) to export a structured provenance log (e.g., in JSON, W3C PROV-O format). - RO-Crate Packaging: Use a tool like

ro-crate-pythonto create an RO-Crate. Incorporate the provenance log, the workflow definition, the container specification, input data manifests, and final outputs into a single, structured package.

Protocol: Manual Curation of Rich Specimen Documentation

Objective: To create human- and machine-readable documentation for physical/digital specimens where full automation is not feasible.

Materials:

- Structured Metadata Schema (e.g., ABCD, Darwin Core for biodiversity; MIABIS for biospecimens)

- JSON-LD or XML Editor

- Persistent Identifier (PID) Minting Service (e.g., DataCite, ePIC)

Procedure:

- Schema Selection: Choose a metadata standard appropriate for the specimen domain.

- Metadata Population: Create a metadata record covering:

- Descriptive: Taxonomy, phenotype, disease state.

- Contextual: Collection event (geo-location, date, collector), associated project/grant.

- Technical: Digitization method (scanner/microscope model, settings), file format, checksum.

- Governance: Access rights, embargo period, material transfer agreement (MTA) identifier.

- Identifier Assignment: Mint a persistent identifier (e.g., DOI, ARK) for the specimen record.

- Linking: Embed links to related publications, datasets, and vocabulary terms (from ontologies like OBI, UBERON, ChEBI) using their URIs to ensure interoperability.

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Provenance & Documentation |

|---|---|

RO-Crate (ro-crate-py) |

A Python library to create, parse, and validate Research Object Crates, packaging data, code, and provenance. |

| ProvPython Library | A Python library for creating, serializing, and querying provenance data according to the W3C PROV data model. |

| Git & GitHub/GitLab | Version control for tracking changes to analysis scripts, documentation, and metadata schemas, providing "how" and "who" provenance. |

| Docker/Singularity | Containerization platforms to encapsulate the complete software environment, ensuring computational reproducibility ("how"). |

| Electronic Lab Notebook (ELN) | Systems like RSpace or LabArchives to formally record experimental protocols ("how") and associate them with raw data. |

| CWL/Airflow/Nextflow | Workflow languages/systems that natively capture execution traces, detailing the sequence of transformations applied to data. |

| DataCite | A service for minting Digital Object Identifiers (DOIs), providing persistent identifiers for datasets and linking them to creators. |

| Ontology Lookup Service | A service to find and cite standardized ontology terms (e.g., OLS, BioPortal), enriching metadata for interoperability. |

Data Quality and Metrics for Reusability

Effective provenance directly impacts measurable data quality dimensions critical for reuse.

| Quality Dimension | Provenance/Documentation Contribution | Quantifiable Metric Example |

|---|---|---|

| Completeness | Mandatory fields (W7) are populated. | Percentage of required metadata fields filled (Target: 100%). |

| Accuracy | Links to protocols and software versions. | Version match between cited software and container image. |

| Timeliness | Timestamps on all events. | Lag time between data generation and metadata publication. |

| Findability | Rich descriptive metadata and PIDs. | Search engine ranking for dataset keywords. |

| Interoperability | Use of standard schemas and ontologies. | Number of links to external ontology terms per record. |

| Clarity of License | Machine-readable rights statements. | Presence of a standard license URI (e.g., CC-BY). |

Diagram Title: The Provenance-Enabled Cycle of Data Reusability

Step 4, Provenance Tracking and Rich Documentation, transforms static data into a dynamic, trustworthy, and reusable research asset. By systematically implementing the W7 framework through automated capture and meticulous curation, and by packaging this information using standards like RO-Crate, researchers directly fulfill the most challenging FAIR principle: Reusability. This creates a powerful ripple effect, where digital specimens from biodiversity collections or clinical biobanks can be reliably integrated into downstream drug discovery pipelines, systems biology models, and meta-analyses, thereby accelerating scientific innovation.

The realization of FAIR (Findable, Accessible, Interoperable, and Reusable) data principles is a cornerstone of modern digital research infrastructure. For the domain of natural science collections, FAIR Digital Objects (FDOs), and specifically FAIR Digital Specimens (DSs), serve as the critical mechanism to transform physical specimens into rich, actionable, and interconnected digital assets. This guide provides an in-depth technical overview of the core platforms and software enabling this transformation, framed within the broader thesis that FAIR-compliant digital specimens are essential for accelerating research in biodiversity, systematics, and drug discovery from natural products.

The FAIR Digital Specimens Conceptual Framework

A FAIR Digital Specimen is a persistent, granular digital representation of a physical specimen. It is more than a simple record; it is a digitally manipulable object with a unique Persistent Identifier (PID) that bundles data, metadata, and links to other resources (e.g., genomic data, publications, environmental records). The core technical stack supporting DSs involves platforms for persistence and identification, software for creation and enrichment, and middleware for discovery and linking.

Core Platform Architectures

Two primary, interoperable architectures dominate the landscape:

Diagram Title: Core Architecture of a FAIR Digital Specimen

Quantitative Comparison of Core Platforms & Services

Table 1: Core PID and Resolution Platforms

| Platform/Service | Primary Function | Key Features | Quantitative Metrics (Typical) | FAIR Alignment Focus |

|---|---|---|---|---|

| Handle System | Persistent Identifier Registry | Decentralized, supports custom metadata (HSADMINS), REST API. | > 200 million handles registered; Resolution > 10k/sec. | Findable, Accessible via global HTTP proxy network. |

| DataCite | DOI Registration Agency | Focus on research data, rich metadata schema (kernel 4.0), EventData tracking. | > 18 million DOIs; ~5 million related identifiers. | Findable, Interoperable via standard schema and open APIs. |

| ePIC | PID Infrastructure for EU | Implements Handle System for research, includes credential-based access. | Used by ~300 research orgs in EU. | Accessible, Reusable via integrated access policies. |

Table 2: Digital Specimen Platforms & Middleware

| Platform | Type | Core Technology Stack | Key Capabilities | Target User Base |

|---|---|---|---|---|

| DiSSCo | Distributed Research Infrastructure | Cloud-native, PID-centric, API-driven. | Mass digitization pipelines, DS creation & curation, Linked Data. | Natural History Collections, Pan-European. |

| Specimen Data Refinery (SDR) | Processing Workflow Platform | Kubernetes, Apache Airflow, Machine Learning. | Automated data extraction from labels/images, annotation, enrichment. | Collections holding institutions, Data scientists. |

| BiCIKL Project Services | Federation Middleware | Graph database (Wikibase), Link Discovery APIs. | Triple-store based linking of specimens to literature, sequences, taxa. | Biodiversity researchers, Librarians. |

| GBIF | Global Data Aggregator & Portal | Big data indexing (Elasticsearch), Cloud-based. | Harvests, validates, and indexes specimen data from publishers globally. | All biodiversity researchers. |

Experimental Protocol: Creating and Enriching a FAIR Digital Specimen

This protocol outlines the end-to-end process for transforming a physical specimen into an enriched FAIR Digital Specimen.

Protocol Title:Generation and Annotation of a FAIR Digital Specimen for Natural Product Research

Objective: To create a machine-actionable digital specimen record from a botanical collection event, enrich it with molecular data, and link it to relevant scholarly publications.

Materials & Reagents:

- Physical Specimen: Vouchered plant collection (e.g., Artemisia annua L.).

- Collection Management System (CMS): Specify (e.g., Specify 7, BRAHMS, EMu).

- PID Minting Service: DataCite or ePIC API credentials.

- SDR Tools: Image cropping, OCR, and NER (Named Entity Recognition) services.

- Molecular Database: NCBI GenBank or BOLD Systems accession number.

- Link Discovery Service: BiCIKL's OpenBiodiv or LifeBlock API.

- FAIR Assessment Tool: F-UJI, FAIR Data Maturity Model evaluator.

Methodology:

Digitization & Data Capture:

- Image the specimen (herbarium sheet) using a high-resolution scanner under standardized lighting.

- Transcribe the label data manually or via OCR (e.g., using SDR's

labelsegtool). - Record collection event data (locality, date, coordinates, collector) into the institutional CMS.

PID Assignment & Core Record Creation:

- Execute a POST request to the PID service API (e.g., DataCite) to mint a new DOI, including minimal metadata (creator, publisher, publication year).

- Map the CMS data to a standardized data model (e.g., OpenDS – the emerging standard for Digital Specimens).

- Generate the core DS record as JSON-LD, embedding the PID as the

@idfield. Host this record at a stable URL resolvable via the PID.

Data Refinement & Enrichment:

- Submit specimen images to the SDR workflow for automated annotation:

- Image Segmentation: Isolate label and specimen regions.

- OCR & NER: Extract text and identify scientific names, locations, and collector names.

- Output: Annotations are appended to the DS record as linked assertions.

- Link to molecular data: Add a

relationproperty in the DS JSON-LD pointing to the GenBank accession URI for a sequenced gene from this specimen.

- Submit specimen images to the SDR workflow for automated annotation:

Link Discovery & Contextualization:

- Query the Link Discovery Service with the specimen's taxonomic name and collector data.

- Retrieve URIs for relevant treatments in Plazi's TreatmentBank, sequences in BOLD, and taxa in Wikidata.

- Add these URIs as

seeAlsoorisDocumentedByrelationships in the DS record.

FAIRness Validation & Registration:

- Run the FAIR assessment tool on the final DS record URL to generate a compliance score.

- Register the DS PID and its endpoint in a global index like GBIF's IPT or the DiSSCo PID registry.

Diagram Title: FAIR Digital Specimen Creation Workflow

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 3: Key Software & API "Reagents" for Digital Specimen Research

| Item Name | Category | Function in Experiment/Research | Example/Provider |

|---|---|---|---|

| OpenDS Data Model | Standard Schema | Provides the syntactic and semantic blueprint for structuring a Digital Specimen record, ensuring interoperability. | DiSSCo/OpenDS Community |