Balancing the Scales: Advanced Data Augmentation Techniques for Imbalanced Parasite Image Datasets in Biomedical AI

Imbalanced datasets represent a critical bottleneck in developing robust AI models for parasite detection and drug discovery.

Balancing the Scales: Advanced Data Augmentation Techniques for Imbalanced Parasite Image Datasets in Biomedical AI

Abstract

Imbalanced datasets represent a critical bottleneck in developing robust AI models for parasite detection and drug discovery. This article provides a comprehensive guide for researchers and drug development professionals on leveraging data augmentation to overcome this challenge. We explore the foundational causes and impacts of data imbalance in parasitology, detail a suite of methodological solutions from classical transformations to generative AI, address key troubleshooting and optimization strategies for real-world application, and present a rigorous framework for model validation and comparative analysis. By synthesizing current best practices and emerging trends, this work aims to equip scientists with the knowledge to build more accurate, generalizable, and clinically viable diagnostic and research tools.

The Imbalance Problem in Parasitology: Understanding the Data Scarcity Challenge

Defining Data Imbalance and Its Prevalence in Parasite Imaging

In the field of digital parasitology, data imbalance occurs when the number of images across different classes of parasites or host cells is significantly unequal. This is a prevalent issue in microscopy image datasets, where some parasite species, life cycle stages, or infected cells are naturally rarer or more difficult to capture than others. For researchers and drug development professionals, this imbalance can severely bias automated detection and classification models, leading to inaccurate diagnostic tools. This guide addresses the core challenges and solutions associated with data imbalance in parasite imaging, providing a structured troubleshooting resource for your experimental workflows.

The tables below summarize the nature and prevalence of class imbalance as documented in recent parasitology research, providing a benchmark for your own dataset analysis.

Table 1: Documented Class Imbalance in Parasite Imaging Datasets

| Parasite/Focus | Dataset Description | Class Distribution & Imbalance Ratio | Citation |

|---|---|---|---|

| Multi-stage Malaria Parasites | 1,364 images; 79,672 cropped cells from BBBC | RBCs: 97.2%, Leukocytes: 0.2%, Schizonts: 0.7%, Trophozoites: 0.5%, Gametocytes: 0.8%, Rings: 0.6% [1] | [1] |

| Nuclei Detection (Histopathology) | 1,744 FOVs; >59,000 annotated nuclei (CSRD) | 'Tumor': 21,088, 'Lymphocyte': 13,575, 'Fibroblast': 8,639, 'Mitotic_figure': 70 instances [2] | [2] |

| Multi-class Parasite Organisms | 34,298 samples of 6 parasites and host cells | Specific ratios not provided; noted as a "diverse dataset" with inherent imbalance [3] | [3] |

Table 2: Impact of Imbalance on Model Performance

| Performance Aspect | Description of Impact |

|---|---|

| Model Bias | Models prioritize features of the majority class (e.g., uninfected RBCs), as their detection leads to higher overall accuracy scores [4] [2]. |

| Minority Class Performance | Low sensitivity for rare parasite stages (e.g., schizonts, gametocytes) or species, which are often clinically critical [1] [5]. |

| Metric Misleading | High accuracy can mask poor performance on minority classes, making F1-score a more reliable metric for imbalanced datasets [6]. |

Frequently Asked Questions (FAQs)

Q1: My model has a 96% accuracy, but it fails to detect the most critical parasite stage. Why? This is a classic sign of data imbalance. Your model is likely biased towards the majority class (e.g., uninfected cells). Accuracy becomes a misleading metric when classes are imbalanced. A model that simply always predicts "uninfected" will achieve high accuracy if that class dominates the dataset. To get a true picture, examine class-specific metrics like precision, recall, and F1-score for the under-represented parasite stage [2] [1].

Q2: What is the fundamental difference between data-level and classifier-level solutions? Solutions to data imbalance fall into two main categories:

- Data-Level Solutions: These techniques modify the training dataset itself to create a more balanced class distribution. Examples include oversampling the minority class (e.g., using augmentation) or undersampling the majority class [2] [7].

- Classifier-Level Solutions: These techniques adjust the learning algorithm without changing the input data. This includes using cost-sensitive learning, where a higher penalty is assigned to misclassifying minority class samples, or employing loss functions specifically designed for imbalance, such as Focal Loss [2] [7].

Q3: Is data augmentation always necessary to improve predictions on imbalanced datasets? Not necessarily. While data augmentation is a widely used and powerful tool, some research suggests that adjusting the classifier's decision cutoff or using cost-sensitive learning without augmentation can sometimes yield similar results. The optimal approach depends on your specific dataset and the severity of the imbalance [8].

Troubleshooting Guides

Problem: Poor Detection of Rare Parasite Life Cycle Stages

Issue: Your deep learning model performs well on common stages (e.g., rings) but fails to identify rare stages like schizonts or gametocytes.

Solution Steps:

- Quantify the Imbalance: Begin by calculating the exact distribution of all classes in your dataset, similar to the analysis shown in Table 1. This confirms the severity of the problem [1].

- Apply Advanced Data Augmentation: For the minority stages, go beyond simple rotations and flips. Use more sophisticated techniques like the modified copy-paste method, where you carefully paste instances of rare parasites onto other background images, ensuring you do not create unrealistic overlaps [2].

- Modify the Loss Function: Implement a class-balanced or weighted loss function. This forces the model to pay more attention to the minority classes during training by assigning a higher penalty for misclassifying them [2] [7]. The Focal Loss function is particularly effective as it also reduces the loss contribution from easily classified majority class examples [7].

- Leverage Transfer Learning: Consider using a Deep Transfer Graph Convolutional Network (DTGCN). This approach transfers knowledge from a source domain and uses graph networks to establish topological correlations between classes, which can bridge the gap caused by imbalanced class distributions [1].

Problem: Handling High-Density Images with Overlapping Instances

Issue: In thick blood smears or histopathology images, cells and parasites often overlap, and the background is complex. Standard augmentation can exacerbate foreground-background imbalance.

Solution Steps:

- Use Instance Segmentation Models: Employ models like Mask R-CNN, which are designed for instance segmentation. This allows the model to learn the precise boundaries of each object, which is crucial for counting in dense scenes [2].

- Implement a Hybrid Augmentation Strategy: Combine the modified copy-paste augmentation with a weight-balancing method in the loss function. This hybrid approach specifically addresses the dual imbalance of too few minority class instances and the potential disruption of the foreground-background ratio [2].

- Validate with Care: After augmentation, visually inspect a sample of generated images to ensure that pasted instances look natural and that the overall image quality and context are preserved.

Experimental Protocols for Imbalanced Data

Protocol 1: Modified Copy-Paste Augmentation with Weighted Loss

This hybrid methodology is designed to rectify class imbalance without compromising the detection of objects in dense images [2].

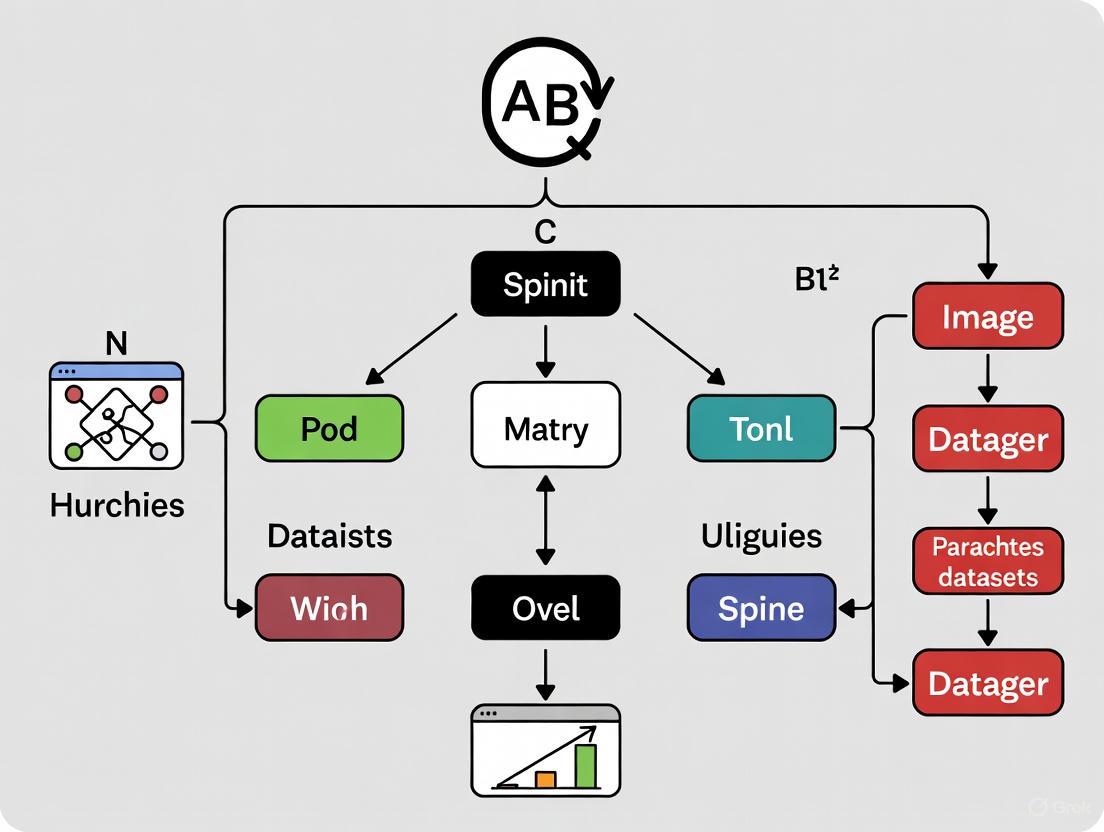

Workflow Diagram:

Step-by-Step Methodology:

- Instance Extraction: Use an instance segmentation model like Mask R-CNN (with a Feature Pyramid Network backbone like ResNeXt101-32x8d) to generate precise masks for all objects, especially those from minority classes [2].

- Background Preparation: Use images from your dataset that contain relevant background but may be lacking in minority class instances.

- Paste with Care: For each minority class instance, copy its mask and paste it onto a prepared background image. The key modification is to programmatically avoid pasting new instances in a way that creates unrealistic overlaps with existing objects [2].

- Train with Weighted Loss: Train your detection model (e.g., Mask R-CNN) on the augmented dataset. Use a weighted loss function (e.g., Stochastic Gradient Descent with class weights) in the classification head to further penalize errors on the minority classes [2].

Protocol 2: Deep Transfer Learning with Graph Convolutional Networks

This protocol is effective for transferring knowledge from a balanced source domain to an imbalanced or unlabeled target domain, such as when you have limited images of a rare parasite [1].

Workflow Diagram:

Step-by-Step Methodology:

- Feature Extraction: Train or use a pre-trained Convolutional Neural Network (CNN) on your source dataset (e.g., a public dataset with good class balance) to act as a feature extractor [1].

- Graph Building: Build a source transfer graph that captures the discriminative morphological characteristics and the relationships between different parasite classes from the source domain [1].

- Knowledge Transfer: Feed the unlabeled target data (your imbalanced dataset) through the feature extractor. The Graph Convolutional Network (GCN) is then implemented to establish topological correlations between the source class groups and the unlabeled target samples. This step effectively bridges the distribution gap caused by imbalance [1].

- Parasite Recognition: The model learns to recognize multi-stage malaria parasites in the target dataset by leveraging the transferred graph feature representations, significantly improving recognition of under-represented stages [1].

The Scientist's Toolkit

Table 3: Essential Research Reagents & Computational Tools

| Item/Tool Name | Function/Application in Research | Example in Parasite Imaging |

|---|---|---|

| Giemsa Stain | Stains parasite chromatin purple and cytoplasm blue, enabling visualization under a microscope [4] [1]. | Standard for staining Plasmodium parasites in thin and thick blood smears for creating image datasets [1] [5]. |

| Romanowsky Stain | A group of stains (including Giemsa) preferred in tropical climates for its stability in humidity [6]. | Used for thick blood smears in automated systems for detecting Plasmodium vivax [6]. |

| Mask R-CNN | A deep learning model for instance segmentation; detects, classifies, and generates a pixel-wise mask for each object [2]. | Used for nuclei detection in histopathology and can be adapted for segmenting individual parasites in dense blood smear images [2]. |

| Graph Convolutional Network (GCN) | A neural network that operates on graph-structured data, capturing relationships between entities [1]. | Used in DTGCN models to correlate features from balanced and imbalanced datasets for multi-stage parasite recognition [1]. |

| Focal Loss | A modification of standard cross-entropy loss that down-weights the loss for well-classified examples, focusing training on hard-to-classify instances [7]. | Improves object detection performance for rare parasite stages in highly imbalanced datasets [7]. |

Frequently Asked Questions

FAQ 1: What are the primary causes of data imbalance in parasite image datasets? Data imbalance in parasite image datasets stems from two main sources: biological and logistical. Biologically, some parasite species or life stages are inherently rare or difficult to obtain in clinical samples, leading to a natural under-representation in datasets [9]. Logistically, in resource-limited settings—where the disease burden is often highest—the collection of large, balanced datasets is hampered by a scarcity of skilled personnel, limited laboratory equipment, and challenges in maintaining consistent staining quality across samples [10] [6].

FAQ 2: Beyond collecting more images, what techniques can address class imbalance? A range of data augmentation and algorithmic techniques can effectively address imbalance without solely relying on new data collection. Traditional data augmentation manipulates existing images through transformations like rotation and scaling to artificially expand the dataset [11]. For more complex challenges, deep learning-based augmentation can generate realistic synthetic image variations. Algorithmically, one-class classification (OCC) is a powerful approach that learns a model using only samples from the majority class, treating the rare class (e.g., a rare parasite) as an anomaly [9].

FAQ 3: How does one-class classification work for rare parasite detection? One-class classification (OCC) frames the problem as anomaly detection. Instead of learning to distinguish between multiple classes, the model is trained exclusively on images of the majority class (e.g., uninfected cells). It learns the "normal" feature patterns of that class. During inference, when presented with a new image, the model identifies anything that deviates significantly from this learned norm as an anomaly or outlier, which would correspond to the rare, parasitic organism [9]. The Image Complexity-based OCC (ICOCC) method further enhances this by applying perturbations to images; a model that can correctly classify the original and perturbed versions is forced to learn more robust and inherent features of the single class [9].

FAQ 4: What is an ensemble learning approach, and why is it effective? Ensemble learning combines predictions from multiple machine learning models to improve overall accuracy and robustness. Instead of relying on a single model, an ensemble leverages the strengths of diverse architectures. For example, one study combined a custom CNN with pre-trained models like VGG16, VGG19, ResNet50V2, and DenseNet201 [10]. This approach is effective because different models may learn complementary features from the data. By integrating them, the ensemble reduces variance and is less likely to be misled by the specific limitations of any one model, which is particularly beneficial for complex and variable medical images [10].

Troubleshooting Guides

Problem: Model performance is poor on rare parasite classes. Diagnosis: The model is biased towards the majority class due to severe data imbalance.

Solution Guide:

- Implement Deep Learning Data Augmentation: Use advanced techniques, such as Generative Adversarial Networks (GANs), to synthesize high-quality, artificial images of the rare parasite classes. This increases their representation in the training set without requiring new sample collection [11].

- Adopt a One-Class Classification Framework: Reframe the problem. Train a model to recognize only the majority class (e.g., uninfected cells) and flag the rare parasites as anomalies. The ICOCC method has shown state-of-the-art performance on imbalanced medical image datasets [9].

- Utilize Ensemble Learning: Combine multiple models to capture a wider range of features. A two-tiered ensemble using hard voting and adaptive weighted averaging has been shown to outperform standalone models in malaria detection [10].

Problem: Inconsistent image quality is hampering model generalization. Diagnosis: Variations in staining, lighting, and microscope settings create noise that the model learns instead of the biological features.

Solution Guide:

- Integrate an Image Quality Assessment Module: As part of your preprocessing pipeline, use a classifier to automatically assess input image quality. One study used feature extraction (e.g., histogram bins, texture analysis with GLCM) and an SVM to achieve a 95% F1-score for this task, filtering out poor-quality images before they reach the main model [6].

- Apply Standardized Pre-processing:

- Color Space Conversion: Convert images from RGB to grayscale to reduce complexity for initial morphological feature analysis [12].

- Segmentation: Use Otsu thresholding and watershed techniques to differentiate foreground from background and identify regions of interest (e.g., cells) [12] [6].

- Noise Reduction: Apply smoothing filters like Gaussian or median filters to reduce noise and artifacts in the microscopic images [10].

Experimental Protocols & Data

| Technique Category | Specific Method | Key Performance Metrics | Application Context |

|---|---|---|---|

| Ensemble Learning | Adaptive ensemble of VGG16, VGG19, ResNet50V2, DenseNet201 [10] | Accuracy: 97.93%, F1-Score: 0.9793 [10] | Malaria parasite detection in red blood cell images |

| One-Class Classification | Image Complexity OCC (ICOCC) with perturbation [9] | Outperformed four state-of-the-art methods on four clinical datasets [9] | Anomaly detection in imbalanced medical images |

| Deep Learning Augmentation | Use of GANs for image synthesis and denoising [10] | Improves model robustness and generalization on scarce data [10] [11] | Generating artificial data for minority classes |

| Transfer Learning & Optimization | Fine-tuning VGG19, InceptionV3, InceptionResNetV2 with Adam/SGD optimizers [12] | Highest Accuracy: 99.96% (InceptionResNetV2 + Adam) [12] | Multi-species parasitic organism classification |

Detailed Protocol: Image Complexity-Based One-Class Classification (ICOCC)

This protocol is adapted from the method proposed to handle imbalanced medical image data [9].

Objective: To train a deep learning model to detect anomalies (rare parasites) using only samples from a single, majority class (e.g., uninfected cells).

Materials:

- A curated dataset of images from the majority class.

- A deep learning framework (e.g., TensorFlow, PyTorch).

Methodology:

- Perturbation: For each image in the training set, generate a set of perturbed versions. Effective perturbations include:

- Displacement: Randomly translating parts of the image.

- Rotation: Applying slight rotations.

- Combining displacement and rotation has been shown to be particularly effective [9].

- Labeling: Assign the original image a label of "1" and all its perturbed versions a label of "0".

- Model Training: Train a Convolutional Neural Network (CNN) as a binary classifier to distinguish between the original images (class 1) and the perturbed images (class 0).

- Inference: When a new test image is presented, the model will assign a high probability for "class 1" if the image's features are consistent with the learned patterns of the majority class. A low probability indicates an anomaly, i.e., a potential rare parasite.

Logical Workflow: The following diagram illustrates the ICOCC process.

Detailed Protocol: Ensemble Learning for Robust Detection

This protocol is based on an optimized transfer learning approach for malaria diagnosis [10].

Objective: To improve diagnostic accuracy and robustness by combining predictions from multiple pre-trained models.

Materials:

- A labeled dataset of parasitized and uninfected cells.

- Pre-trained CNN architectures (e.g., VGG16, ResNet50V2, DenseNet201).

Methodology:

- Model Selection & Fine-tuning: Select multiple pre-trained models. Fine-tune each model on your specific parasite dataset.

- Prediction Generation: Each model in the ensemble processes the input image and outputs a prediction (e.g., parasitized or uninfected).

- Evidence Combination: Use a two-tiered combination strategy:

- Hard Voting: The final classification is based on the majority vote from all models.

- Adaptive Weighted Averaging: Dynamically assign weights to each model's prediction based on its performance on a validation set, giving more influence to stronger models [10].

- Final Decision: The combined evidence from all models is used to make the final, robust classification.

Ensemble Architecture: The following diagram shows the flow of data through the ensemble system.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for Parasite Image Analysis Experiments

| Item | Function & Application |

|---|---|

| Romanowsky-Stained Thick Blood Smears | A stable staining method preferred in humid, tropical climates for visualizing malaria parasites and host cells [6]. |

| Pre-trained Deep Learning Models (VGG19, InceptionV3, ResNet50, etc.) | Provides a powerful starting point for feature extraction through transfer learning, often achieving >99% accuracy in classification tasks when fine-tuned [12]. |

| Optimizers (Adam, SGD, RMSprop) | Algorithms used to fine-tune model parameters during training; choice of optimizer can significantly impact final performance (e.g., Adam achieved 99.96% accuracy with InceptionResNetV2) [12]. |

| Otsu Thresholding & Watershed Algorithm | Image processing techniques used to segment and separate overlapping cells in smears, crucial for identifying individual regions of interest [12] [6]. |

| Convolutional Autoencoders (CAE) | A type of neural network used for one-class classification and anomaly detection by learning to reconstruct "normal" input images [9]. |

Frequently Asked Questions (FAQs) on Bias in Diagnostic AI

FAQ 1: What are the most common sources of bias in medical AI models for diagnostics? Bias can be introduced at multiple stages of the AI development pipeline. The most common sources include:

- Data Bias: This occurs when the training datasets are not representative of the target population. For instance, models trained predominantly on data from specific demographic groups (e.g., overrepresenting non-Hispanic Caucasian patients) will have worse performance and algorithm underestimation for underrepresented groups [13]. Other data issues include non-randomly missing patient data, such as more incomplete records for low socioeconomic patients, and the failure to capture important data like Social Determinants of Health (SDoH) [13].

- Model Development Bias: This happens when the model's design and evaluation are flawed. An overreliance on whole-cohort performance metrics (like overall accuracy) can obscure poor performance for minority subgroups. If a model is only optimized for high accuracy on a majority class, it will perform much worse on underrepresented patient groups [13].

- Annotation Bias: The labels used to train AI models may reflect the cognitive biases of the human experts who create them, thereby perpetuating and amplifying existing healthcare disparities [13].

FAQ 2: How does imbalanced data specifically lead to model failure in parasite detection? In parasite detection, imbalanced data is a fundamental challenge. Models are often trained on datasets where images of infected cells (the minority class) are vastly outnumbered by images of uninfected cells (the majority class). Most machine learning algorithms have an inherent bias toward the majority class [14]. The consequence is a model that achieves high accuracy by simply always predicting "uninfected," thereby failing completely at its primary task: identifying parasites. This leads to a high rate of false negatives, where infected cells are misclassified, potentially resulting in misdiagnosis and inadequate treatment for patients [15] [14].

FAQ 3: What performance metrics should I use to detect bias in imbalanced classification tasks? For imbalanced datasets, standard metrics like accuracy are misleading and should not be relied upon alone. Instead, you should use a combination of metrics that are sensitive to class imbalance [16] [17].

Table: Key Performance Metrics for Imbalanced Classification

| Metric | Focus | Interpretation in Parasite Detection |

|---|---|---|

| Precision | The accuracy of positive predictions. | Of all cells predicted as infected, how many were truly infected? (Low precision means many false alarms). |

| Recall (Sensitivity) | The ability to find all positive instances. | Of all truly infected cells, how many did the model correctly identify? (Low recall means many missed infections). |

| F1-Score | The harmonic mean of precision and recall. | A single metric that balances the concern between false positives and false negatives. |

| ROC-AUC | The model's ability to separate classes across all thresholds. | A threshold-independent measure of overall ranking performance. |

| Confusion Matrix | A breakdown of correct and incorrect predictions. | Provides a complete picture of true positives, false positives, true negatives, and false negatives [17]. |

Critical best practice is to optimize the decision threshold instead of using the default 0.5, as this can significantly improve recall for the minority class without complex resampling [16]. Furthermore, these metrics must be evaluated not just on the whole dataset but also on key patient subgroups (e.g., by age, gender, or ethnicity) to uncover hidden biases [13].

FAQ 4: When should I use data augmentation techniques like SMOTE versus using a strong classifier? The choice depends on your model and data. Recent evidence suggests a tiered approach [16]:

- First, try strong classifiers like XGBoost or CatBoost and optimize the probability threshold. These models are often robust to class imbalance and may yield good performance without any resampling.

- Use augmentation techniques like SMOTE with weaker learners, such as decision trees, support vector machines, or multilayer perceptrons, which are more susceptible to class imbalance.

- Consider simpler methods first. Random oversampling or undersampling can often yield results similar to more complex methods like SMOTE and are computationally cheaper [16] [14].

Troubleshooting Guides

Guide 1: Diagnosing and Mitigating Data Bias

Problem: Your model performs well during validation but fails dramatically when deployed in a new hospital or on a different patient population.

Diagnosis: This is a classic sign of data bias and a covariate shift, where the statistical distribution of the deployment data differs from the training data [18].

Step-by-Step Solution:

- Characterize Your Training Data: Systematically document the sociodemographics (age, gender, race, ethnicity) and technical sources (scanner type, imaging protocol) of your training set. If certain groups are underrepresented, your model is at high risk for bias [13].

- Perform Subgroup Analysis: Do not just evaluate your model on the entire test set. Slice your performance metrics (recall, F1-score) by different demographic and clinical subgroups to identify for whom the model fails [13] [17].

- Cultivate Diverse Datasets: Proactively collect data from multiple sites and diverse patient populations. International data-sharing initiatives can help create more representative datasets [13].

- Apply Debiasing Techniques: If diverse data collection is not immediately possible, employ statistical debiasing methods. For image data, this can include advanced augmentation techniques tailored to the minority class. For tabular data, consider using the

imbalanced-learnlibrary for methods like SMOTE, though with the caveats noted in the FAQs [14].

Guide 2: Addressing Poor Performance on a Specific Parasite Life Stage

Problem: Your parasite detection model accurately identifies late-stage trophozoites but consistently misses early ring stages.

Diagnosis: This is likely due to a combination of data imbalance (fewer ring-stage examples) and feature complexity (ring stages are smaller and have less distinct visual features) [15] [19].

Step-by-Step Solution:

- Augment Ring-Stage Data: Use data augmentation techniques specifically for the ring-stage class. This can include standard image transformations (rotation, scaling, elastic deformations) and, if feasible, more advanced methods like using generative models to create synthetic ring-stage images.

- Implement Strategic Oversampling: Apply SMOTE or similar variants in your feature space to generate more examples for the ring-stage class. In a chemistry context, Borderline-SMOTE has been used to interpolate along the boundaries of minority samples to improve model decision boundaries [14].

- Leverage Transfer Learning: Fine-tune a pre-trained deep learning model (e.g., a CNN) on a dataset enriched with ring-stage images. Pre-trained models can learn robust features even from limited data.

- Utilize Specialized Architectures: Employ a neural network specifically designed for segmentation and classification, such as Cellpose, which can be re-trained with a few annotated examples to segment challenging parasite stages accurately [19].

Experimental Protocols for Bias Mitigation

Protocol 1: A Workflow for Continuous Single-Cell Imaging and Analysis

This protocol, adapted from research on Plasmodium falciparum, enables high-resolution tracking of dynamic processes, which is crucial for generating high-quality, balanced datasets for model training [19].

Objective: To continuously monitor live parasites throughout the intraerythrocytic life cycle to capture rare events and stages for a balanced dataset.

Materials:

- Airyscan Microscope: For label-free, high-resolution 3D imaging with low phototoxicity.

- CellBrite Red Stain: A membrane dye for facilitating accurate cell boundary annotation (used for training data only).

- Cellpose: A pre-trained, deep-learning-based cell segmentation algorithm.

- Ilastik / Imaris Software: For image annotation and segmentation validation.

Methodology:

- Image Acquisition: Culture P. falciparum-infected erythrocytes. Acquire 3D image stacks of single cells over 48 hours using an Airyscan microscope, alternating between Differential Interference Contrast (DIC) and fluorescence modes.

- Data Annotation (Training Set):

- Use the fluorescence images (CellBrite Red channel) to create ground truth annotations.

- For uninfected erythrocytes, use the carving workflow in Ilastik for volume segmentation.

- For infected erythrocytes, manually annotate each parasite compartment using the surface rendering mode in Imaris.

- Model Training: Re-train the Cellpose neural network on the annotated datasets. It is recommended to train separate models for different parasite stages (e.g., a "ring stage model" and a "late stage model") to improve segmentation accuracy for shapes with low convexity.

- Automated Analysis and Tracking: Apply the trained Cellpose model to segment new DIC image stacks automatically. This allows for the extraction of spatial and temporal information from individual parasites across the entire life cycle.

Diagram Title: Single-Cell Imaging and Analysis Workflow

Protocol 2: Physics-Informed Data Augmentation for Imbalanced Data

This protocol outlines a data augmentation strategy that integrates physical principles to generate realistic synthetic data for rare events, such as extreme parasite loads or unusual morphological presentations [20].

Objective: To enrich an imbalanced dataset by generating physically plausible samples of minority classes.

Materials:

- Historical Data: Time-series or feature data from experimental observations.

- Clustering Algorithm: (e.g., K-means) for pattern identification.

- Physics-Based Constraints: Domain knowledge (e.g., mass conservation, biological growth constraints) to guide data generation.

- Physics-Informed Neural Network (PINN): A deep learning model that incorporates physical laws into its loss function.

Methodology:

- Pattern Identification: Perform clustering analysis (e.g., K-means) on historical data from the minority class to identify characteristic patterns or temporal signatures (e.g., specific patterns of parasite development).

- Physics-Guided Augmentation: Generate new synthetic samples for the minority class by interpolating between identified patterns while constraining the generation process with known physical or biological laws. This ensures the new data is realistic and adheres to domain knowledge.

- Model Training with Augmented Data: Integrate the augmented dataset with a Physics-Informed Neural Network (PINN). The PINN uses a loss function that includes both data error and a penalty for violating the physical constraints (e.g.,

Total Loss = Mean Squared Error + λ * Physics Violation). - Validation: Rigorously validate the model on a hold-out test set containing real, rare events to ensure the augmented data has improved performance on the minority class without compromising overall model integrity.

The Scientist's Toolkit: Research Reagent Solutions

Table: Essential Tools for AI-Based Parasite Diagnostics Research

| Item | Function | Application Note |

|---|---|---|

| Airyscan Microscope | Enables high-resolution, continuous 3D live-cell imaging with low photodamage. | Critical for capturing dynamic parasite processes and generating high-quality training data [19]. |

| Cellpose | A pre-trained, deep-learning-based tool for 2D and 3D cell segmentation. | Can be fine-tuned with a small number of annotated images for specific segmentation tasks in parasite-infected cells [19]. |

| Imbalanced-Learn Library | A Python library offering a suite of resampling techniques (e.g., SMOTE, ADASYN, undersampling). | Use for tackling class imbalance in tabular and feature data; start with simple random oversampling before moving to complex methods [16]. |

| Physics-Informed Neural Network (PINN) | A type of neural network that embodes physical laws into its architecture. | Ideal for generating physically plausible synthetic data or making predictions when labeled data for rare events is scarce [20]. |

| Ilastik / Imaris Software | Interactive image analysis and visualization software for annotation and segmentation. | Used to create accurate ground truth labels, which are the foundation for training unbiased models [19]. |

Frequently Asked Questions (FAQs) and Troubleshooting Guides

FAQ: Data and Model Performance

FAQ 1: What are the most effective deep learning architectures for detecting malaria parasites in blood smear images, and how do their accuracies compare?

Based on recent studies, several architectures have been validated for malaria detection. The table below summarizes the performance of key models.

Table 1: Performance Comparison of Deep Learning Models for Malaria Detection

| Model Name | Reported Accuracy | Key Strengths | Use Case |

|---|---|---|---|

| ConvNeXt V2 Tiny (Remod) | 98.1% [21] | Combines convolutional efficiency with advanced feature extraction; suitable for resource-limited settings. | Thin blood smear image classification. |

| InceptionResNetV2 (with Adam optimizer) | 99.96% [3] | High accuracy on a multi-parasite dataset; hybrid model leveraging Inception and ResNet benefits. | Classification of various parasitic organisms. |

| YOLOv8 | 95% (parasites), 98% (leukocytes) [22] | Enables simultaneous detection and counting of parasites and leukocytes for parasitemia calculation. | Object detection in thick blood smear images. |

| Hybrid CapNet | Up to 100% (on specific datasets) [23] | Lightweight (1.35M parameters); excellent for parasite stage classification and spatial localization. | Multiclass classification and mobile diagnostics. |

| ResNet-50 | 81.4% [21] | A well-established baseline model; performance can be boosted with transfer learning. | General image classification for parasitized cells. |

Troubleshooting Guide: If your model's accuracy is lower than expected, consider the following:

- Problem: Model performance has plateaued.

- Solution: Implement or increase the intensity of data augmentation techniques. A study on malaria detection successfully expanded a dataset from 27,558 to over 606,000 images through augmentation, significantly boosting model robustness [21].

- Problem: Model is large, slow, and unsuitable for deployment in low-resource settings.

FAQ 2: How can I address severe class imbalance in my parasite image dataset?

Class imbalance is a common challenge. The primary solution is the use of data augmentation and algorithmic techniques.

- Experimental Protocol: Standard Data Augmentation for Parasite Images A typical pipeline involves applying a series of geometric and photometric transformations to the minority class images to increase dataset diversity and size. The following workflow is commonly used [21] [3]:

Transformations to Apply:

Advanced Technique - Patch Stitching: For a more advanced approach, particularly in histopathology, Patch Stitching image Synthesis (PaSS) can be used. This method creates new synthetic images by stitching together random regions from different original images onto a blank canvas. This technique helps the model learn more generalized features and is highly effective for imbalanced datasets [25].

- Protocol (PaSSRec variant):

- Create a blank canvas

Z. - Randomly select

Pimages from the minority class,{xc_i | i = 1, ..., P}. - Divide each selected image into

Pnon-overlapping rectangular regions. - Use the regions from one randomly selected image to define a corresponding grid on the canvas

Z. - Paste each region from the

Pdifferent images into the corresponding grid cell onZ, creating a new, composite training sample [25].

- Create a blank canvas

- Protocol (PaSSRec variant):

FAQ: Experimental Implementation

FAQ 3: What is a standard experimental workflow for developing a deep learning-based parasite detection system?

A robust workflow integrates data preparation, model training, and validation. The following diagram outlines a generalizable protocol.

- Experimental Workflow for Parasite Detection

Troubleshooting Guide:

- Problem: The model converges slowly or performs poorly even after augmentation.

- Problem: Clinicians do not trust the model's predictions.

- Solution: Integrate Explainable AI (XAI) techniques into your workflow. Use tools like Grad-CAM or LIME to generate visual heatmaps that highlight the regions of the image the model used for its decision. This builds trust and verifies that the model is focusing on biologically relevant features (e.g., the parasite itself and not an artifact) [26] [23].

FAQ 4: What key reagents and computational tools are essential for these experiments?

Table 2: Research Reagent Solutions for AI-Based Parasite Detection

| Item Name | Type | Function/Explanation |

|---|---|---|

| Giemsa-stained Blood Smears | Biological Sample | The standard for preparing blood films for microscopic analysis of malaria and Leishmania parasites [21] [26]. |

| Formalin-Ethyl Acetate (FECT) | Chemical Reagent | A concentration technique used as a gold standard for enriching and detecting intestinal parasites in stool samples [27]. |

| Merthiolate-Iodine-Formalin (MIF) | Staining Reagent | A fixation and staining solution for stool specimens, preserving and highlighting cysts and helminth eggs for microscopy [27]. |

| Pre-trained Models (ImageNet) | Computational Tool | Models like ConvNeXt, ResNet, and DINOv2, pre-trained on millions of images, provide a powerful starting point for feature extraction via transfer learning [21] [27]. |

| YOLO (You Only Look Once) | Computational Tool | An object detection algorithm (e.g., YOLOv8) ideal for locating and identifying multiple parasites and cells within a single image [22] [28]. |

| Grad-CAM | Computational Tool | An explainable AI technique that produces visual explanations for decisions from CNN-based models, crucial for clinical validation [26] [23]. |

FAQ: Cross-Domain Validation

FAQ 5: How have deep learning models been successfully applied for stool parasite examination?

Studies have demonstrated high performance in automating the detection of intestinal parasites, showing strong agreement with human experts.

Table 3: Model Performance in Stool Parasite Identification

| Model | Accuracy | Precision | Sensitivity (Recall) | Specificity | F1-Score |

|---|---|---|---|---|---|

| DINOv2-large [27] | 98.93% | 84.52% | 78.00% | 99.57% | 81.13% |

| YOLOv8-m [27] | 97.59% | 62.02% | 46.78% | 99.13% | 53.33% |

| YOLOv4-tiny [27] | High agreement with experts (Cohen's Kappa >0.90) | - | - | - | - |

Troubleshooting Guide:

- Problem: Your model performs well on helminth eggs but poorly on protozoan cysts.

- Solution: This is expected, as protozoans are smaller and have less distinct morphological features. The performance metrics in Table 3 show this trend. To mitigate this, ensure your training dataset has a sufficient number of accurately labeled protozoan examples. Focused augmentation on these classes and using models with high-resolution input capabilities can also help.

FAQ 6: Can you provide a case study on Leishmania detection?

A 2024 study introduced LeishFuNet, a deep learning framework for detecting Leishmania amastigotes in microscopic images [26].

- Methodology: The researchers employed a feature fusion transfer learning approach. They first trained four models (VGG19, ResNet50, MobileNetV2, DenseNet169) on a dataset from another infectious disease (COVID-19). These models were then used as new pre-trained models and fine-tuned on a dataset of 292 self-collected high-resolution microscopic images of skin scraping samples [26].

- Results: The fused model achieved an accuracy of 98.95%, a specificity of 98%, and a sensitivity of 100%. The use of Grad-CAM provided visual interpretations of the model's focus, aligning with clinicians' observations [26].

- Key Takeaway: This case study highlights the effectiveness of same-domain transfer learning (from another infectious disease) and model fusion when dealing with very small medical datasets.

A Technical Toolkit: From Basic Transformations to Generative AI for Parasite Images

Frequently Asked Questions

Q1: Why should I use classical image augmentation for my parasite image dataset?

Classical image augmentation is a fundamental regularization tool used to combat overfitting, a common problem where models memorize training examples but fail to generalize to new, unseen images [29]. This is especially critical when working with high-dimensional image inputs and large, over-parameterized deep networks typical in computer vision [29]. For parasite image datasets, which often suffer from class imbalance and limited data, augmentation artificially enlarges and diversifies your training set. This "fills out" the underlying data distribution, refines your model's decision boundaries, and significantly improves its ability to generalize [29].

Q2: What is the core difference between online and offline augmentation, and which should I use?

The core difference lies in when the transformations are applied and whether the augmented images are stored.

- Offline Augmentation: You create a completely new dataset that includes all original and transformed images, saving them to disk before training begins. This is not generally recommended unless you need to verify augmented image quality or control the exact images seen during training, as it drastically increases disk storage requirements [29].

- Online Augmentation: This is the most common method. Transformations are randomly applied to images in each epoch or batch as they are loaded for training. The model sees a different variation of an image every epoch, and nothing is saved to disk. This is efficient and provides nearly infinite data diversity [29].

For most parasite image experiments, online augmentation is the preferred and more efficient strategy.

Q3: How do I know if my chosen augmentations are appropriate for parasite images?

Choosing appropriate transformations requires a blend of domain knowledge and experimentation [29]. Ask yourself:

- Is this transformation realistic? Does it generate images that could plausibly be encountered in a real-world diagnostic scenario? For instance, a vertically flipped (upside-down) parasite might not be realistic, whereas a slight rotation or brightness change certainly is [29].

- Does it preserve label semantics? Does the transformation change the fundamental nature of the parasite? For example, a 180-degree rotation might turn a '6' into a '9' in digit classification, but a slight rotation of a parasite does not change its species.

- Experiment and Validate: Use your validation set performance as the ultimate guide. Systematically test different augmentation combinations and select the one that maximizes performance on the validation set [29].

Q4: My model is struggling to learn after implementing augmentation. What could be wrong?

This is a common troubleshooting point. Several pitfalls could be at play:

- Excessively Strong Transformations: If your color jitter is too intense or rotations are too extreme, you may be distorting the images beyond recognition, destroying critical features needed to identify parasites [30].

- Violation of Label Integrity: You might be using a transformation that inadvertently changes the label. For example, if your dataset includes location-specific features, a flip might create an unrealistic image that confuses the model [29].

- Implementation Bugs: Double-check your code to ensure transformations are being applied correctly. A common mistake is misconfiguring the pipeline.

Start with mild transformations (e.g., small angle rotations, slight brightness adjustments) and gradually increase their strength while monitoring validation performance.

Troubleshooting Guides

Issue 1: Poor Model Generalization to Images with Different Microscopy Settings

Problem: Your model performs well on your clean training data but fails on new images taken under different microscopes, lighting conditions, or staining intensities.

Solution: Implement a robust Color Space Transformation pipeline. This simulates the lighting and color variations your model will encounter in production, forcing it to learn features that are invariant to these changes [30].

Experimental Protocol:

- Identify Key Parameters: Focus on brightness, contrast, and color jitter (hue/saturation).

- Define Parameter Ranges: Start with conservative, biologically plausible values.

- Brightness: Adjust with a relative factor between 0.8 and 1.2 [30].

- Contrast: Adjust with a similar factor of 0.8 to 1.2.

- Color Jitter: Apply very slight, random changes to hue and saturation (e.g., 0.1 on a scale of 0-1).

- Integrate Online: Apply these transformations randomly and online during training.

- Validate: Monitor performance on a validation set containing images from various sources to find the optimal augmentation strength.

Issue 2: Model Overfitting to Specific Orientations in Images

Problem: The model becomes biased towards parasites appearing in a specific orientation, a common issue if the original dataset lacks rotational diversity.

Solution: Apply Geometric Transformations, specifically rotation and flipping, to build rotation-invariance into your model [29] [30].

Experimental Protocol:

- Assess Dataset: Check if your parasite images have a natural, consistent orientation. If not, rotation is highly applicable.

- Choose Technique:

- Rotation: Use 90-degree increments (90°, 180°, 270°) to avoid interpolation artifacts. For finer control, use small, arbitrary angles (e.g., ±15°) [30].

- Flipping: Horizontal flipping is generally safe and effective. Use vertical flipping with caution, as it may not be realistic for your dataset [30].

- Implement: Apply these transformations randomly during training. For every batch, each image has a random chance of being rotated or flipped.

Quantitative Data for Augmentation Techniques

Table 1: Summary of Classical Image Augmentation Techniques for Parasite Datasets

| Technique Category | Specific Method | Key Parameters | Primary Benefit | Considerations for Parasite Imaging |

|---|---|---|---|---|

| Geometric Transformations | Rotation [29] [30] | Angle (e.g., 90°, ±15°) | Builds orientation invariance | Avoid if orientation is diagnostically relevant. |

| Flipping (Horizontal) [29] [30] | Probability of flip (e.g., 0.5) | Builds orientation invariance | Ensure the flipped parasite is biologically plausible. | |

| Scaling [29] | Zoom ratio (e.g., 0.9-1.1) | Improves scale invariance | Avoid excessive zoom that crops out key structures. | |

| Color Space Transformations | Brightness Adjustment [30] | Relative factor (e.g., 0.8-1.2) | Robustness to lighting changes | Use a narrow range to avoid clipping details. |

| Contrast Modification [30] | Contrast factor (e.g., 0.8-1.2) | Enhances feature visibility | Can help highlight subtle staining variations. | |

| Color Jittering [31] [30] | Hue/Saturation shifts | Robustness to stain variations | Apply minimal jitter to avoid unrealistic colors. | |

| Advanced / Regularization | Cutout / Random Erasing [29] | Patch size, number | Forces model to use multiple features | Can help the model learn from partial views. |

Workflow Diagram for Implementing Classical Augmentation

Title: Online Image Augmentation Workflow for Model Training

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Software Tools for Implementing Image Augmentation

| Tool Name | Type | Primary Function | Application Note |

|---|---|---|---|

| PyTorch Torchvision | Library | Provides a wide array of composable image transformations for online augmentation [29]. | Ideal for building integrated, high-performance training pipelines. |

| TensorFlow tf.image | Library | Offers similar functions to Torchvision for applying transformations to tensors [29]. | Seamlessly integrates with the TensorFlow and Keras ecosystem. |

| imgaug | Python Library | A dedicated library offering a vast collection of augmentation techniques, including complex ones [29]. | Excellent for prototyping complex sequences of augmentations. |

| Encord Active | Data Analysis Tool | Helps explore your dataset, visualize image attribute distributions, and assess data quality [29]. | Use before augmentation to identify dataset gaps and biases. |

Synthetic Data Generation with SMOTE and Advanced Variants

FAQs: Addressing Common Research Challenges

Q1: What is SMOTE and why is it a preferred technique for handling class imbalance in medical image datasets like parasite detection?

SMOTE (Synthetic Minority Over-sampling Technique) is an algorithm that addresses class imbalance by generating synthetic instances of the minority class rather than simply duplicating existing samples [32]. It operates by selecting a minority class instance and finding its k-nearest neighbors within the same class. It then creates new synthetic data points through interpolation between the selected instance and its randomly chosen neighbors [33] [32]. This technique is particularly valuable for parasite image datasets because it generates more diverse synthetic samples compared to random oversampling, which helps improve model generalization and reduces overfitting—a critical concern when working with limited medical imaging data [33] [32].

Q2: My SMOTE-enhanced model for parasite recognition is overfitting. What advanced SMOTE variants can help mitigate this?

Standard SMOTE can indeed cause overfitting, particularly by generating excessive synthetic samples in high-density regions of the minority class [33]. Several advanced variants have been specifically developed to address this issue:

- ISMOTE (Improved SMOTE): Expands the sample generation space beyond simple linear interpolation between two points. It generates a base sample and then uses random quantities to create new samples around the original samples, effectively alleviating density distortion and reducing overfitting risk [33].

- Dirichlet ExtSMOTE: Uses the Dirichlet distribution to create synthetic samples as weighted averages of neighboring instances, which helps mitigate the influence of outliers and abnormal instances often present in real-world medical datasets [34].

- Borderline-SMOTE: Identifies and selectively oversamples minority instances that are on the decision boundary (considered "harder to learn"), which helps prevent overfitting in low-density regions [33].

Q3: How do I handle abnormal instances or outliers in my minority class when using SMOTE for parasite image data?

Abnormal minority instances (outliers) significantly degrade standard SMOTE performance by propagating synthetic samples in non-representative regions [34]. Specialized SMOTE extensions directly address this challenge:

- Distance ExtSMOTE: Uses inverse distances to assign lower weights to distant neighbors during synthetic sample generation, reducing the influence of outliers [34].

- BGMM SMOTE: Employs Bayesian Gaussian Mixture Models to identify and account for the underlying data distribution structure before generating synthetic samples, making it robust to abnormal instances [34].

- FCRP SMOTE: Utilizes a Bayesian non-parametric approach to model feature correlations and generate higher quality synthetic samples in the presence of outliers [34].

Experimental results demonstrate that these methods, particularly Dirichlet ExtSMOTE, achieve substantial improvements in F1 score, MCC, and PR-AUC compared to standard SMOTE on datasets containing abnormal instances [34].

Q4: What are the practical steps for implementing SMOTE in a parasite image classification pipeline?

A basic implementation protocol involves these key steps [32]:

- Data Preparation: Load and preprocess your parasite image dataset. Ensure images are normalized and formatted consistently.

- Feature Extraction: For traditional machine learning models, extract relevant features from the images (e.g., using convolutional neural networks as feature extractors).

- Apply SMOTE: Use the

imblearnlibrary in Python to apply SMOTE to the extracted feature set. Specify the desired sampling strategy (e.g.,sampling_strategy='auto'to balance the classes). - Model Training: Train your classifier (e.g., Random Forest, SVM) on the balanced dataset.

- Validation: Evaluate model performance using cross-validation and metrics appropriate for imbalanced data (e.g., F1-score, G-mean, AUC).

For advanced implementations, consider integrating SMOTE directly within deep learning frameworks using specialized libraries or custom data generators that apply the oversampling during batch generation.

Troubleshooting Guides

Problem: Poor Model Generalization After Applying SMOTE

Potential Causes and Solutions:

Cause 1: Overamplification of Noise Standard SMOTE can generate noisy samples if interpolating between distant or borderline minority instances [33]. Solution: Implement Borderline-SMOTE or one of the abnormal-instance-resistant variants (Distance ExtSMOTE, Dirichlet ExtSMOTE) that include mechanisms to identify and downweight problematic instances during sample generation [33] [34].

Cause 2: Ignoring Data Distribution The linear interpolation of vanilla SMOTE may not respect the underlying data manifold [33]. Solution: Use methods that incorporate local density and distribution characteristics. ISMOTE adaptively expands the synthetic sample generation space to better preserve original data distribution patterns [33]. Alternatively, cluster-based approaches like K-Means SMOTE can first identify dense regions before oversampling [33].

Cause 3: Inappropriate Evaluation Metrics Using accuracy alone on balanced test sets can mask poor minority class performance. Solution: Always employ comprehensive evaluation metrics. Research shows that advanced SMOTE variants can improve F1-score by up to 13.07%, G-mean by 16.55%, and AUC by 7.94% compared to standard approaches [33]. Track these metrics rigorously during validation.

Problem: High Computational Cost and Processing Time

Potential Causes and Solutions:

Cause 1: Large Dataset Size SMOTE operations on high-dimensional data (like image features) can become computationally expensive [32]. Solution: For very large datasets, consider using hybrid approaches that combine selective SMOTE application with random undersampling of the majority class. This maintains balance while reducing overall dataset size [33].

Cause 2: Complex SMOTE Variant Algorithm Some advanced variants (BGMM SMOTE, FCRP SMOTE) involve additional modeling steps that increase computational overhead [34]. Solution: For initial experiments, begin with simpler variants like Distance ExtSMOTE or Dirichlet ExtSMOTE, which offer good performance improvements with moderate computational increases compared to more complex Bayesian approaches [34].

Experimental Protocols and Performance Data

Comparative Performance of SMOTE Variants

Table 1: Classifier Performance Improvement with Advanced SMOTE Techniques [33]

| Evaluation Metric | Average Relative Improvement | Significance Level |

|---|---|---|

| F1-Score | 13.07% | p < 0.01 |

| G-Mean | 16.55% | p < 0.01 |

| AUC | 7.94% | p < 0.05 |

Table 2: Protocol for Comparing SMOTE Variants on Parasite Image Datasets

| Experimental Step | Protocol Details | Key Parameters |

|---|---|---|

| Dataset Preparation | Use public parasite image datasets (e.g., NIH Malaria dataset). Define train/test splits with original imbalance. | Imbalance Ratio (IR), Number of folds for cross-validation |

| Baseline Establishment | Train classifiers (RF, XGBoost, CNN) on original imbalanced data without SMOTE. | F1-Score, G-mean, AUC on test set |

| SMOTE Application | Apply standard SMOTE and selected variants (ISMOTE, Dirichlet ExtSMOTE, etc.) to training data only. | k-nearest neighbors, sampling strategy |

| Model Training & Evaluation | Train identical classifiers on each SMOTE-enhanced training set. Evaluate on original (unmodified) test set. | Use statistical tests (e.g., paired t-test) to confirm significance of performance differences |

Methodology for Dirichlet ExtSMOTE Implementation

Dirichlet ExtSMOTE enhances SMOTE by generating synthetic samples as weighted averages of multiple neighboring instances, using weights drawn from a Dirichlet distribution. This approach creates more diverse samples and reduces outlier influence [34].

Step-by-Step Protocol:

- Input: Minority class instances ( P ), number of nearest neighbors ( k ), oversampling amount ( N )

- For each instance ( xi ) in ( P ):

- Find ( k )-nearest neighbors of ( xi ) from ( P )

- For ( j = 1 ) to ( N ):

- Sample a weight vector ( \alpha ) from Dirichlet distribution

- Select a random neighbor ( x{zi} ) from the k-nearest neighbors

- Compute synthetic sample: ( sj = \alpha1 xi + \alpha2 x{zi} ) (with ( \alpha1 + \alpha2 = 1 ))

- End For

- End For

- Output: Synthetic minority class samples ( S )

Methodology for ISMOTE Implementation

ISMOTE modifies the spatial constraints for synthetic sample generation to expand the feasible solution space and better preserve local data distribution [33].

Step-by-Step Protocol:

- Input: Minority class instances ( X ), number of nearest neighbors ( k )

- For each minority instance ( xi ) in ( X ):

- Find ( k )-nearest neighbors ( NN(xi) )

- For ( j = 1 ) to number of synthetic samples to generate per instance:

- Randomly select a neighbor ( xz ) from ( NN(xi) )

- Generate a base sample ( xb ) between ( xi ) and ( x_z )

- Compute the Euclidean distance ( d ) between ( xi ) and ( xz )

- Multiply ( d ) by a random number ( r ) between 0 and 1: ( d' = d \times r )

- Generate synthetic sample ( x{new} ) by adding or subtracting ( d' ) based on the position of ( xb )

- End For

- End For

- Output: Enhanced minority class dataset

SMOTE Algorithm Selection Workflow

The Scientist's Toolkit: Essential Research Reagents

Table 3: Key Computational Tools for SMOTE Research on Parasite Images

| Tool/Resource | Function | Application Context |

|---|---|---|

| imbalanced-learn (imblearn) | Python library providing SMOTE and multiple variants | Primary implementation framework for traditional machine learning models |

| Dirichlet ExtSMOTE | Advanced SMOTE variant resistant to outliers | Handling parasite datasets with potential labeling errors or abnormal cells |

| ISMOTE | Density-aware SMOTE variant expanding generation space | Preventing overfitting in high-density regions of parasite image features |

| Public Parasite Datasets | Standardized image collections (e.g., NIH Malaria dataset) | Benchmarking and comparative evaluation of different SMOTE approaches |

| F1-Score & G-Mean | Performance metrics for imbalanced classification | Objective evaluation beyond accuracy, focusing on minority class recognition |

| Statistical Testing Framework | Paired t-tests or Wilcoxon signed-rank tests | Validating significance of performance differences between SMOTE variants |

Generative Adversarial Networks (GANs) and CycleGANs for Realistic Synthetic Parasites

Core Concepts FAQ

What are GANs and CycleGANs, and why are they suitable for parasite image augmentation?

Generative Adversarial Networks (GANs) are a class of deep learning frameworks where two neural networks, a generator (G) and a discriminator (D), are trained in competition. The generator creates synthetic images, while the discriminator evaluates them against real images. This adversarial process forces the generator to produce increasingly realistic outputs [35]. CycleGAN is a specialized variant that enables unpaired image-to-image translation. It uses a cycle-consistency loss to learn a mapping between two image domains (e.g., stained and unstained parasites) without requiring perfectly matched image pairs for training [36] [35]. This is particularly suitable for parasite research because it can generate diverse synthetic parasite images from limited data, effectively addressing class imbalance in datasets.

How can CycleGANs help with the problem of imbalanced parasite datasets?

Imbalanced datasets, where certain parasite species or life stages are underrepresented, can severely bias diagnostic models. CycleGANs mitigate this by [36]:

- Generating High-Fidelity Synthetic Samples: They can produce realistic images of the underrepresented parasite classes.

- Creating Stylistic Variations: They can translate parasites from one staining technique to another (e.g., H&E to Giemsa) or simulate different imaging conditions, thereby increasing the stylistic diversity of the training set without altering the core parasitic morphology.

- Enabling Unpaired Translation: Since collecting paired images of the same parasite under different stains is practically impossible, CycleGAN's ability to learn from unpaired sets is a significant advantage.

Troubleshooting Guide

Issue 1: Unrealistic or Blurry Synthetic Parasites

Problem: The generated parasite images lack clear morphological details (e.g., fuzzy cell walls, indistinct nuclei) or appear artificially blurred.

| Potential Cause | Solution |

|---|---|

| Insufficient or Low-Quality Training Data | Curate a higher-quality dataset. Ensure original images are high-resolution and artifacts are minimized. A small, clean dataset is better than a large, noisy one. |

| Inappropriate Loss Function | Supplement the standard adversarial and cycle-consistency losses. Incorporate a Feature Matching Loss or VGG Loss (perceptual loss) to ensure the generated images match the real ones in feature space, preserving textural details [35]. |

| Generator Architecture Limitations | Consider modifying the generator network. Replacing a standard ResNet with a U-Net architecture, which uses skip connections to share low-level information (like edges) between the input and output, can help preserve fine structural details [36] [35]. |

Issue 2: Training Instability and Mode Collapse

Problem: The model fails to converge, or the generator produces a limited variety of parasites (e.g., only one species).

Solutions:

- Adjust Loss Weights: The cycle-consistency loss (

lambda_cyc) and identity loss (lambda_id) are critical hyperparameters. For tasks requiring high color and structural fidelity (like distinguishing between parasite species), appropriately increasinglambda_idcan help maintain color consistency in the generated images [37]. - Use Advanced Normalization: Replace Batch Normalization layers with Instance Normalization in the generator. This is less dependent on batch statistics and improves training stability for image translation tasks [37].

- Implement a Replay Buffer: Cache previously generated images and occasionally use them to train the discriminator. This prevents the discriminator from overfitting to the generator's most recent outputs and stabilizes the training dynamics [37].

Issue 3: Color and Stain Inconsistencies

Problem: The synthetic parasite images have incorrect or unstable color distributions, making them unreliable for stain-dependent diagnostic tasks.

Solutions:

- Leverage Identity Loss: This loss forces the generator to leave an image unchanged if it already belongs to the target domain. For example, when translating to a Giemsa-stained domain, feeding a real Giemsa image into the generator should yield the same image. This strongly encourages color consistency [37].

- Standardize Data Preprocessing: Ensure all input images undergo the same normalization process (e.g., resizing with BICUBIC interpolation, normalizing pixel values to

[-1, 1]) to create a consistent data distribution [37]. - Adopt an Interactive Framework: For highly complex translations, consider advanced architectures like the Cycle-Interactive GAN (CIGAN). This framework allows the enhancement and degradation generators to interact during training, using a Low-light Guided Transform (LGT) to better transfer color and illumination distributions from the target domain, which can be adapted for stain transfer [38].

Experimental Protocols for Parasite Image Augmentation

Protocol 1: Basic Data Augmentation with CycleGAN

This protocol outlines the steps to generate synthetic parasite images to balance a dataset.

- Data Curation: Collect two unpaired sets of images:

Domain A(e.g., under-represented parasite species) andDomain B(e.g., well-represented species or background tissue). - Preprocessing: Resize all images to a uniform resolution (e.g., 256x256 pixels). Apply normalization, scaling pixel values to the range

[-1, 1][37]. - Model Configuration:

- Training: Train the model until the loss of the discriminator plateaus and visual inspection confirms the quality of generated images.

- Synthesis and Validation: Generate synthetic

Domain Aparasites. A parasitology expert must blindly validate these images against real images before they are added to the training set.

Protocol 2: Cross-Stain Augmentation for Improved Generalization

This protocol uses CycleGAN to translate images between different staining techniques, making models more robust to laboratory variations.

- Data Curation: Collect unpaired images from

Domain X(e.g., Giemsa-stained blood smears) andDomain Y(e.g., H&E-stained tissue sections). - Preprocessing: Same as Protocol 1.

- Model Configuration:

- Enhanced Loss Function: Use a combination of adversarial loss, cycle-consistency loss, identity loss, and a VGG-based perceptual loss to preserve cellular structure during stain transfer [35].

- Training and Evaluation:

- Train the model to learn the mapping between

XandY. - Evaluate the model's performance by training a classifier on a mix of real and synthetic cross-stain images and testing it on a held-out set of real images from a different laboratory.

- Train the model to learn the mapping between

Workflow Visualization

Diagram 1: Standard CycleGAN Architecture for Parasite Augmentation

Diagram 2: Parasite Image Augmentation Workflow

Performance Metrics and Quantitative Results

The following table summarizes key quantitative findings from relevant studies on using GANs for data augmentation in medical and optical imaging.

Table 1: Impact of GAN-based Augmentation on Model Performance

| Application Domain | Model Used | Key Metric | Baseline Performance | Performance with GAN Augmentation | Notes |

|---|---|---|---|---|---|

| Alzheimer's Disease Diagnosis (MRI) [36] | CNN (ResNet-50) | F-1 Score | 89% | 95% | CycleGAN was used to generate synthetic MRI scans, significantly boosting classification accuracy. |

| Abdominal Organ Segmentation (CT) [36] | Not Specified | Generalizability | Poor on non-contrast CT | Improved | CycleGAN created synthetic non-contrast CT from contrast-enhanced scans, improving model robustness. |

| Nighttime Vehicle Detection [36] | YOLOv5 | Detection Accuracy | Low (night images) | Increased | An improved CycleGAN (with U-Net) translated night to day, simplifying feature extraction for the detector. |

| Turbid Water Image Enhancement [36] | Improved CycleGAN | Image Clarity & Interpretability | Low | Effectively Enhanced | A new generator (BSDKNet) and loss function (MLF) improved enhancement precision and efficiency. |

| Unsupervised Low-Light Enhancement (CIGAN) [38] | CIGAN | PSNR/SSIM | Lower on paired methods | Superior to other unpaired methods | The model simultaneously addressed illumination, contrast, and noise in a robust, unpaired manner. |

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Components for a CycleGAN-based Parasite Augmentation Pipeline

| Component | Function in the Experiment | Key Considerations for Parasite Imaging |

|---|---|---|

| CycleGAN Framework | The core engine for unpaired image-to-image translation. | Choose an implementation (e.g., PyTorch-GAN) that allows easy modification of generators and loss functions [37]. |

| U-Net Generator | A type of generator network that uses skip connections. | Crucial for preserving the fine, detailed morphological structures of parasites (e.g., nuclei, flagella) during translation [36] [35]. |

| Multi-Scale Discriminator | A discriminator that judges images at multiple resolutions. | Helps ensure that both the overall structure and local textures of the generated parasite images are realistic [35]. |

| Instance Normalization | A normalization layer used in the generator. | Preferred over Batch Normalization for style transfer tasks as it leads to more stable training and better results [37]. |

| Adversarial Loss | The core GAN loss that drives the competition between generator and discriminator. | Ensures the overall realism of the generated images. |

| Cycle-Consistency Loss | Enforces that translating an image to another domain and back should yield the original image. | Preserves the structural content (the parasite's shape) during translation [35]. |

| Identity Loss | Encourages the generator to be an identity mapping if the input is already from the target domain. | Critical for maintaining color and stain fidelity in the generated parasite images [37]. |

| VGG/Perceptual Loss | A loss based on a pre-trained network (e.g., VGG) that compares high-level feature representations. | Helps in preserving the perceptually important features of the parasite, leading to more natural-looking images [35]. |

Frequently Asked Questions (FAQs)

Q1: My model is performing well on my primary dataset but fails on external validation data. How can I improve its generalizability?

A: This is a common sign of overfitting to the specifics of your initial dataset. To improve generalizability:

- Leverage Advanced Data Augmentation: Implement sophisticated techniques like the modified copy-paste augmentation [2]. This method strategically duplicates and pastes instances of minority class objects (e.g., rare parasites) into training images, directly addressing class imbalance and forcing the model to learn more robust features rather than dataset-specific noise.

- Incorporate Robust Regularization: Utilize label smoothing and optimizers like AdamW, which were key to the strong generalizability demonstrated by a ConvNeXt model for malaria detection [21]. These techniques prevent the model from becoming overconfident on the training data.

- Adopt a Modern Architecture: Consider using a multi-branch ConvNeXt [39] or similar architecture. These models extract and integrate diverse features (e.g., via global average, max, and attention-weighted pooling), which enhances their ability to identify subtle pathological features across different data sources.

Q2: For a new project on parasite detection with a highly imbalanced dataset, should I choose ResNet or ConvNeXt as my backbone?

A: The choice depends on your specific priorities, as both have proven effective in medical imaging. The following table summarizes a comparative analysis to guide your decision:

| Feature | ResNet | ConvNeXt |

|---|---|---|

| Core Innovation | Skip connections to solve vanishing gradient [40] [41] | Modernized CNN using design principles from Vision Transformers [42] |

| Key Strength | Proven, reliable feature extraction; excellent for transfer learning [40] [43] | State-of-the-art accuracy on various benchmarks, including medical tasks [39] [21] [42] |

| Computational Efficiency | High and well-optimized [42] | High, retains CNN efficiency while matching ViT performance [21] [42] |

| Sample Performance (Malaria Detection) | ResNet50: 81.4% accuracy [21] | ConvNeXt V2 Tiny: 98.1% accuracy [21] |

| Recommended Use Case | A robust starting point with extensive community support and pre-trained models. | Projects aiming for top-tier accuracy and willing to use a more modern architecture. |

Q3: I have a very small dataset for my specific parasite species. Can transfer learning still work?

A: Yes, this is a primary strength of transfer learning. The methodology is effectively outlined in the experimental workflow below:

The process involves taking a model pre-trained on a massive dataset like ImageNet and repurposing it for your task. As demonstrated in a malaria detection study, you can either:

- Use the pre-trained model as a fixed feature extractor, and train a new classifier on top using your data.

- Fine-tune the pre-trained model by continuing training on your small dataset, which allows the features to adapt specifically to parasites [21].

Q4: How can I understand why my model made a specific prediction to build trust in its diagnostics?

A: Implementing eXplainable AI (XAI) techniques is crucial for building trust and verifying that your model is learning biologically relevant features.

- Grad-CAM Visualizations: A Hybrid Capsule Network for malaria diagnosis used Grad-CAM to generate heatmaps that visually confirm the model focuses on clinically significant parasite regions within the blood smear images, not irrelevant background artifacts [44].

- LLM and LIME Integration: Research into ConvNeXt for malaria detection also highlights the use of tools like LIME and LLaMA to provide both visual and textual interpretations of the model's decision-making process, enhancing transparency for clinicians [21].

Troubleshooting Guides

Problem: Model Performance is Biased Towards Majority Classes

Symptoms: High overall accuracy, but poor recall for classes with fewer image samples (e.g., rare parasite species or specific life-cycle stages).

Solution Steps:

- Diagnose the Imbalance: Quantify the class distribution in your dataset. The core of this issue is often a significant disparity in instance counts [2].

- Apply Hybrid Data-Level Solutions:

- Use Modified Copy-Paste Augmentation: This technique directly addresses instance imbalance by creating new training samples for minority classes. It copies annotations of rare objects and pastes them onto other training images, effectively oversampling the minority class without exacerbating foreground-background imbalance [2].

- Combine with Data Augmentation: Augment your minority class images with standard geometric and color transformations (rotation, flipping, brightness changes) to further increase diversity [21].

- Implement Classifier-Level Adjustments:

- Weight-Balancing Loss Function: Modify your loss function (e.g., weighted cross-entropy) to assign a higher cost for misclassifying instances from the minority classes. This tells the model to pay more attention to these under-represented examples [2].

Problem: Training is Unstable or Validation Loss Does Not Converge

Symptoms: Loss values fluctuate wildly, or the model fails to show improvement on the validation set over time.

Solution Steps:

- Review Learning Rate and Optimizer:

- A study on ConvNeXt found that the combination of label smoothing and the AdamW optimizer was critical for achieving stable and robust training [21]. Consider switching from standard Adam or SGD to AdamW.

- Implement a learning rate scheduler. A common strategy is to reduce the learning rate after a fixed number of epochs (e.g., reduce by a factor of 10 after every 80 epochs) to allow the model to fine-tune its weights as it converges [41].

- Inspect Data Preprocessing: Ensure your data preprocessing pipeline is consistent between training and validation. Mismatches in normalization or resizing can cause instability.

- Verify Model Architecture Configuration: For ResNet models, ensure the correct order of operations (batch normalization, activation, convolution) in your residual blocks, as defined in your code [41].

Experimental Protocols & Reagents